Foundations of AI in Video

AI sits at the center of modern approaches to video understanding. In this context, AI refers to systems that interpret visual data, reason over sequences, and provide actionable outputs. AI systems often combine CLASSICAL computer vision with statistical learning and natural language components. Machine learning and machine learning algorithms power many of these systems. They train models that can detect objects, classify actions, and summarise content across a video sequence. The shift from frame-level detection to semantic comprehension is not only technical. It also changes how operators and systems make decisions.

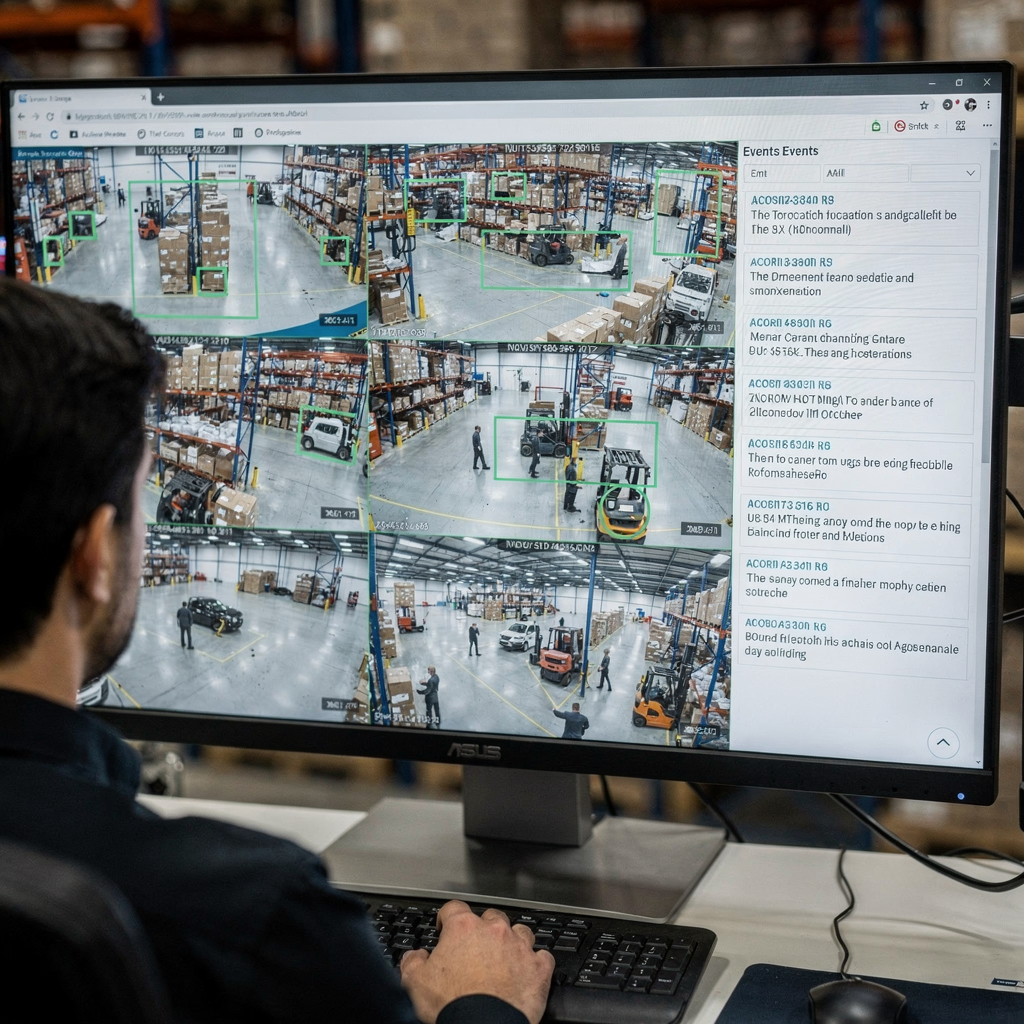

Early pipelines focused on one frame at a time. A single frame could show a person or a vehicle. Systems would produce a bounding box and a label. Those outputs answered simple detection tasks. Today, AI builds on those per-frame outputs and learns context across many frames. It reasons about causality, intent, and behaviour over time. That high-level understanding reduces false positives and supports decision support in control rooms. For example, visionplatform.ai adds a reasoning layer that converts detections into explained situations and recommended actions. This approach helps operators move from raw alerts to verified incidents.

Key performance metrics guide evaluation. Accuracy measures how often decisions match ground truth. Throughput measures how many frames per second a system can process. Latency measures time from input to decision. Resource use matters, too. Edge deployments may need models tuned for lower memory and compute. Benchmarks therefore report accuracy, frames processed per second, and end-to-end latency. Practitioners also track the cost of false alarms because volume matters in operational settings.

Framing design choices matters. A single frame can be clear but ambiguous. Individual frames often omit motion cues, while a video sequence gives context such as direction, speed, and interaction. Robust systems therefore fuse features from frames, audio, and metadata. That fusion powers content understanding and unlocks applications of video across security, retail, and sports. For academic context, see methods for qualitative video analysis that show why context matters in interpretation ici.

Detection and Object Recognition in Videos

Detection forms the foundation of many pipelines. Object detection identifies and localises objects within a frame using a bounding box or segmentation map. Classic detection models include Faster R-CNN and YOLO, and they remain reference points when building systems for real-time operations. Those models run on frames, propose candidate regions, and score classes. Optimised variants and pruning help meet real time targets on edge devices.

Video-specific challenges complicate standard methods. Motion blur, camera movement, lighting changes, and occlusion cause instability across frames. Tracking helps by associating the same object across time, so object tracking improves persistence and identity. For some deployments, combining object detection and tracking gives stable tracks and fewer re-identifications. A detection model must therefore handle both spatial precision and temporal consistency.

Performance in the field matters. CCTV systems with modern analytics reduce incidents in practice. For instance, a study reported a 51% decrease in crime rates in car parking lots with camera coverage, highlighting tangible benefits when detection is paired with operational responses source. That statistic emphasises why accurate object detection and stable tracking are crucial for public safety and asset protection.

Practitioners also face constraints on annotation and dataset quality. Annotating frames by drawing bounding box labels remains time consuming, so semi-automated annotation tools reduce effort. visionplatform.ai supports workflows that let teams improve pre-trained models with site-specific classes and keeps data on-prem for compliance and privacy. Those choices affect model generalisation and the cost of deployment.

AI vision within minutes?

With our no-code platform you can just focus on your data, we’ll do the rest

Action Detection and Video Classification

Action detection extends detection into time. It labels when an action occurs and where. That task requires models that reason over a video segment not a single frame. Video classification assigns a label to a whole clip or to segments within it. The difference matters: single-frame classifiers test a still image, while sequence models learn temporal dynamics. Tasks such as action recognition and action detection depend on temporal models like 3D CNNs or LSTM-based architectures that process a video sequence.

3D CNNs extract spatiotemporal features by applying convolutions in both space and time. LSTM or transformer-based sequence models handle long-range dependencies and can aggregate per-frame features into a meaningful summary. Those models solve detection tasks such as « person running » or « suspicious interaction » across frames in a video. For practical testing, sports and surveillance datasets provide diverse action labels and complex interactions to challenge models.

Comparing approaches clarifies trade-offs. A per-frame approach can detect objects and simple poses quickly, yet it misses context such as who initiated contact or whether a video action is ongoing. Sequence models capture dynamics, yet they can increase latency and require more training data. Many systems combine both: a fast per-frame detector provides candidates, and a temporal model refines the decision across a sliding window.

Evaluation uses metrics like mean Average Precision for localization, F1 for event detection, and top-1 accuracy for classification. When the goal is operational, additional metrics such as false alarm rate, time-to-detect, and the ability to link detections across cameras matter. These considerations shape real-world deployments, from sports coaching that needs fine-grained feedback to video surveillance where timely alerts reduce response time. Human validation and research on detecting manipulated political speech underline the difficulty of verification and the need for tools that support operators with contextual explanations étude.

Video Annotation and Processing Techniques

Good data underpins model performance. Video annotation involves labeling objects, actions, and scenes so models can learn. Manual annotation gives high quality, but it scales poorly. Automated tools speed the process by propagating labels across adjacent frames and suggesting candidate bounding box positions. Active learning closes the loop by asking humans to review only uncertain examples.

Pre-processing affects the training pipeline. Typical steps include frame extraction, resizing, normalisation, and augmentation. Frame extraction converts a video file into ordered frames in a video stream. Resizing balances model input size with compute budgets. Normalisation aligns pixel distributions so deep learning networks converge faster. Augmentation simulates variations like brightness shifts or small rotations to increase robustness.

Annotation can be task-specific. Dense video captioning combines detection, temporal segmentation, and natural language generation to create summaries. For classic computer vision tasks, annotation and model training focus on bounding box accuracy and class labels. High-quality labels of objects in a video help models learn precise object localization, which improves downstream tasks such as forensic search. For more on practical search in archives, see how forensic search supports investigations recherche médico-légale dans les aéroports.

Maintaining labelled corpora is essential for supervised learning. Video dataset curation must reflect the target environment. That is why on-prem solutions, where data never leaves the site, are attractive to organisations with compliance needs. visionplatform.ai offers workflows to improve pre-trained models with site-specific data and to build models from scratch when necessary. This hybrid approach reduces retraining time and improves precision for specific use cases such as people-counting and crowd-density analysis comptage de personnes.

AI vision within minutes?

With our no-code platform you can just focus on your data, we’ll do the rest

Real-Time Video Analysis with AI Models

Real time requirements shape model design and deployment. In many operational settings, systems must process 30 frames per second to keep up with live feeds. Throughput and latency then become first-order constraints. Lightweight architectures and quantised models help meet performance goals on edge hardware such as NVIDIA Jetson. The trade-off is often between accuracy and speed: optimizing for one can reduce the other.

Edge inference reduces network usage and supports EU AI Act–aligned architectures that prefer on-prem processing. visionplatform.ai offers on-prem Vision Language Models and agents that reason over local recordings, which keeps raw video in the environment and reduces compliance risk. An ai model designed for edge will often use fewer parameters, aggressive pruning, and mixed-precision arithmetic to reach required frames per second while preserving acceptable accuracy.

Real-time video analysis also requires robust system design. Processing a raw video feed involves decoding, frame pre-processing, passing frames into an AI pipeline, and producing alarms or textual descriptions. Systems use buffering and asynchronous pipelines to avoid stalls. They also prioritise which feeds to analyse at high fidelity and which to sample at lower rates. For some surveillance scenarios, a detection model flags candidate events, and a second-stage AI verifies and explains them, reducing false alarms and operator overload.

Operators gain from AI that explains its outputs. For example, VP Agent Reasoning correlates detections with VMS logs and access control, and it provides context for each alert. This reduces time per alarm and supports faster decisions. Real-time does not only mean speed; it also means actionable context when seconds count. For more on real-time detections tied to specific tasks, see intrusion detection solutions that integrate real-time analytics détection d’intrusion.

Applications, Insights and Challenges in Video Understanding

Applications of video understanding span security, operations, retail, and sports. In security, systems detect unauthorized access and trigger workflows that guide operator responses. In retail, analytics measure dwell time and customer paths. In sports, coaches get automated highlights and technique feedback. These wide range of applications benefit when models extract features from video data and summarise events for human decision makers.

Insights from video include behaviour patterns, trend detection, and anomaly alerts. Automated systems detect anomalies by learning normal patterns and flagging deviations. Anomaly detection in a production site might look for process deviations or people in restricted zones. The combination of object detection and tracking with reasoning agents helps ensure that alerts carry context rather than raw flags.

Despite progress, challenges in video remain. Deep-fake and manipulation detection is urgent because political speech and news can be targeted by realistic forgeries. Research shows humans struggle to detect hyper-realistic manipulations, which increases the need for algorithmic assistance and robust verification pipelines see study. Multi-camera fusion, privacy, and dataset bias complicate deployments. Systems must avoid leaking video content and must respect regulations. On-prem AI and transparent configurations help address some of these concerns.

Real-world applications also demand integration. visionplatform.ai bridges detections and operations by exposing events as structured inputs for AI agents, enabling VP Agent Actions to pre-fill incident reports and recommend workflows. That agent-driven approach reduces the operator’s cognitive load and scales monitoring with optional autonomy. For additional operational examples such as PPE detection and vehicle classification, see PPE and vehicle detection pages détection d’EPI and détection et classification de véhicules.

FAQ

What is video understanding and how does it differ from simple detection?

Video understanding refers to extracting semantic meaning, context, and intent from visual sequences. Simple detection focuses on localising and labelling objects within a frame, while understanding connects events across time and explains their significance.

How does AI improve real-time monitoring in control rooms?

AI speeds up detection and reduces manual search by converting visual events into searchable descriptions. AI agents can verify alarms, suggest actions, and even pre-fill reports to support operators.

What are common models used for object detection?

Popular models include Faster R-CNN for accuracy and YOLO for speed. Both provide bounding boxes and class labels, and variants are optimised for edge or cloud deployment.

Why is annotation important for video models?

Annotation provides labelled examples that supervised models use to learn. High-quality annotation of objects and actions improves object localization and reduces false positives during inference.

Can systems detect actions like fighting or loitering?

Yes. Action detection and video classification models identify when an action is being performed. Specialized workflows combine detection with temporal models to flag behaviors such as loitering or aggression.

How do systems handle motion blur and low-light conditions?

Robust pre-processing and data augmentation during training help models generalise. Multi-frame aggregation and sensor fusion also improve performance when individual frames are noisy.

Are there privacy concerns with AI for video?

Yes. Storing and processing video data raises privacy and compliance issues. On-prem AI and transparent logging reduce exposure and help meet regulatory requirements.

What is the role of object tracking in surveillance?

Object tracking links detections across frames to maintain identity and trajectory. This persistence improves forensic search and reduces repeated alerts for the same object.

How does dense video captioning help users?

Dense video captioning generates textual summaries for segments, making archives searchable and shortening investigation time. It bridges raw detections and human-readable descriptions.

What is a practical first step to add AI to existing cameras?

Start by running a lightweight detection model on representative footage to measure accuracy and throughput. Then iteratively improve models with site-specific annotations and integrate reasoning agents for context-aware alerts.