latest news

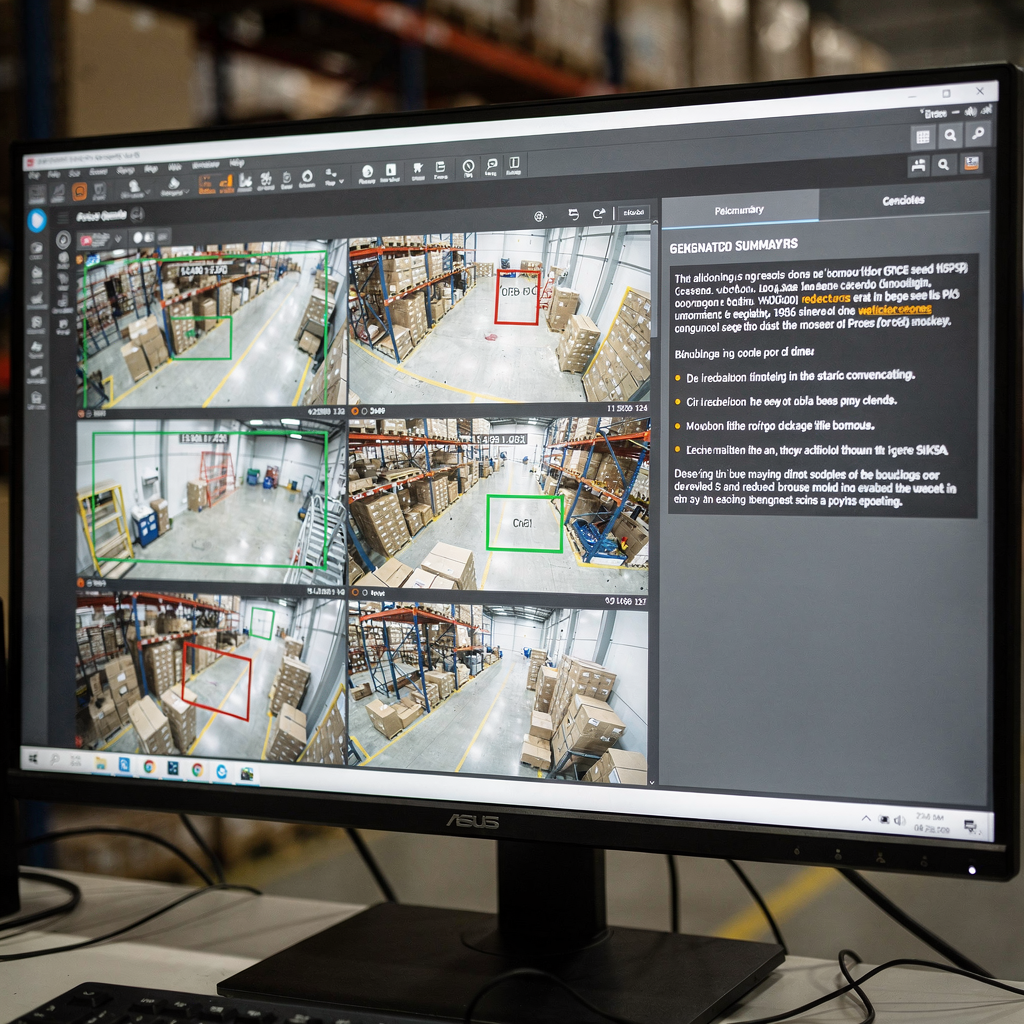

Advanced vision language models for alarm context

vlms and ai systems: architecture of vision language model for alarms Vision and AI meet in practical systems that turn raw video into meaning. In this chapter I explain how vlms fit into ai systems for alarm handling. First, a basic definition helps. A vision language model combines a vision encoder with a language model […]

Vision Language Models for Video Summarization

Understanding the Role of video in Multimodal AI First, video is the richest sensor for many real-world problems. Also, video carries both spatial and temporal signals. Next, visual pixels, motion, and audio combine to form long sequences of frames that require careful handling. Therefore, models must capture spatial detail and temporal dynamics. Furthermore, they must […]

Vision language models for event description

How vision language models work: a multimodal ai overview Vision language models work by bridging visual data and textual reasoning. First, a visual encoder extracts features from images and video frames. Then, a language encoder or decoder maps those features into tokens that a language model can process. Also, this joint process lets a single […]

Vision-language models for incident understanding

vlms: Role and Capabilities in Incident Understanding First, vlms have grown fast at the intersection of computer vision and natural language. Also, vlms combine visual and textual signals to create multimodal reasoning. Next, a vision-language model links image features to language tokens so machines can describe incidents. Then, vlms represent scenes, objects, and actions in […]

Vision-language models for anomaly detection

Understanding anomaly detection Anomaly detection sits at the heart of many monitoring systems in security, industry, and earth observation. In video surveillance it flags unusual behaviours, in industrial monitoring it highlights failing equipment, and in remote sensing it reveals environmental changes. Traditional methods often focus on single inputs, so they miss context that humans use […]

Vision-language models for access control

vision-language models: Principles and Capabilities Vision-language models bring together a vision encoder and language understanding to form a single, multimodal system. First, a vision encoder processes images or video frames and converts them to embeddings. Then, a language model maps text inputs into the same embedding space so that the system can relate images and […]