Category: Industry applications

Vision-language Models for VMS Integration with VLMS

language model and vision language model: introduction A language model predicts text. In VMS contexts a language model maps words, phrases, and commands to probabilities and actions. A vision language model adds vision to that capability. It combines visual input with textual reasoning so VMS operators can ask questions and receive human-readable descriptions. This contrast […]

AI vision-language models for surveillance analytics

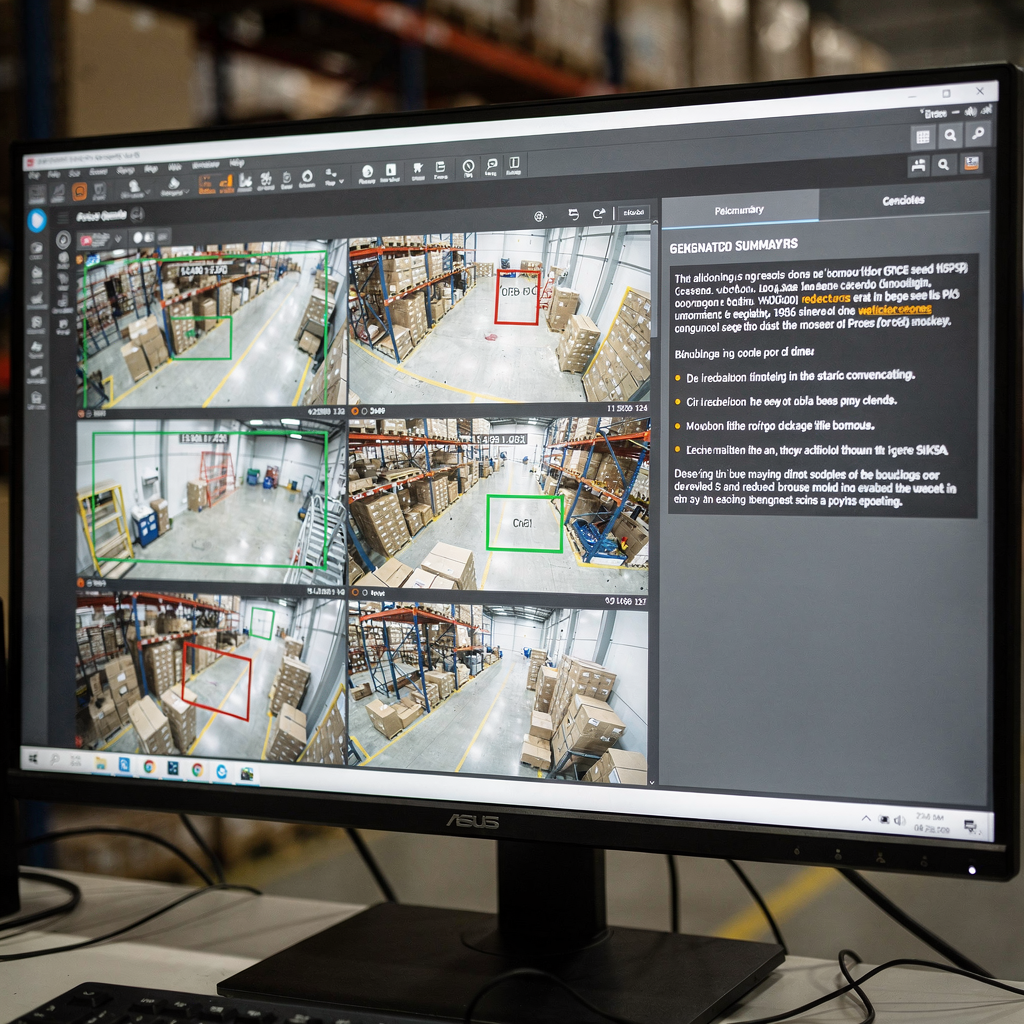

ai systems and agentic ai in video management AI systems now shape modern video management. First, they ingest video feeds and enrich them with metadata. Next, they help operators decide what matters. In security settings, agentic AI takes those decisions further. Agentic AI can orchestrate workflows, act within predefined permissions, and follow escalation rules. For […]

Vision language models for operator decision support

language models and vlms for operator decision support Language models and VLMS sit at the center of modern decision support for complex operators. First, language models describe a class of systems that predict text and follow instructions. Next, VLMS combine visual inputs with text reasoning so a system can interpret images and answer questions. For […]

Advanced vision language models for alarm context

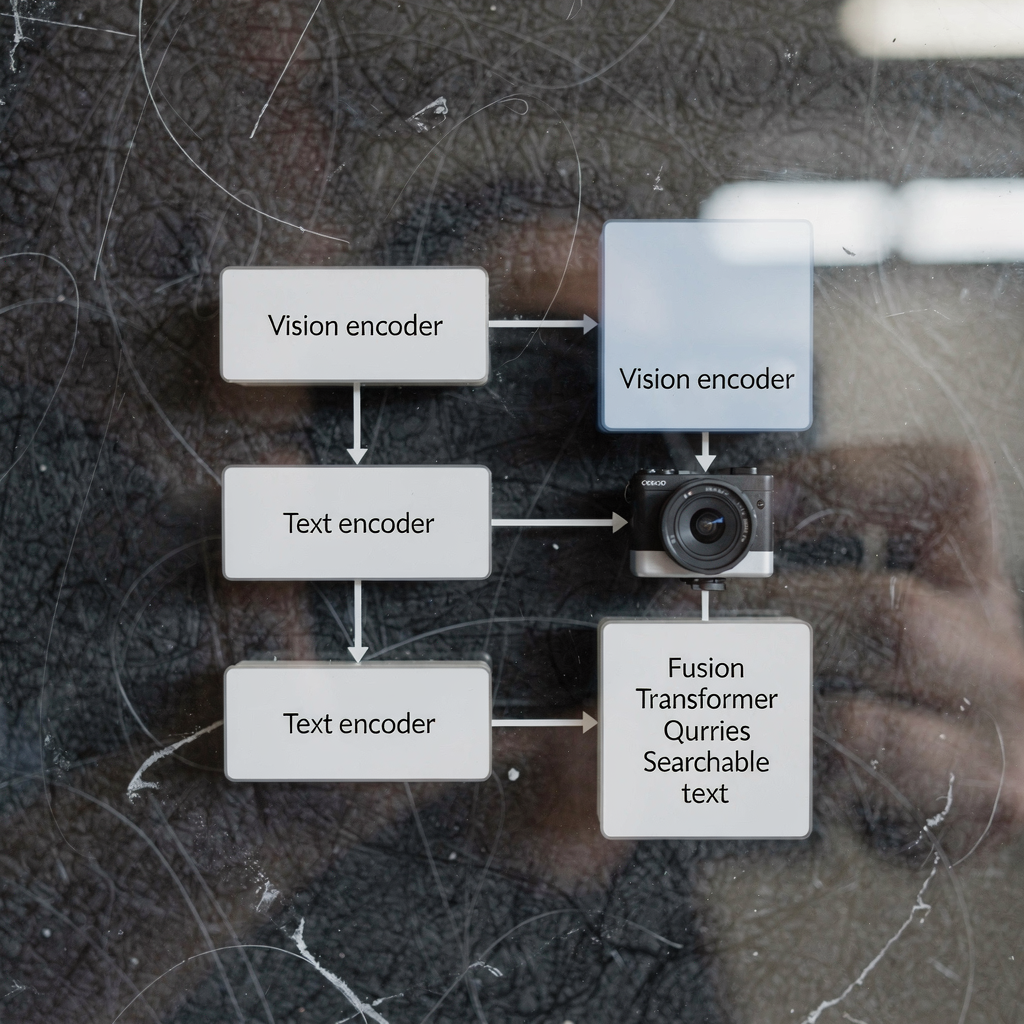

vlms and ai systems: architecture of vision language model for alarms Vision and AI meet in practical systems that turn raw video into meaning. In this chapter I explain how vlms fit into ai systems for alarm handling. First, a basic definition helps. A vision language model combines a vision encoder with a language model […]

Vision Language Models for Video Summarization

Understanding the Role of video in Multimodal AI First, video is the richest sensor for many real-world problems. Also, video carries both spatial and temporal signals. Next, visual pixels, motion, and audio combine to form long sequences of frames that require careful handling. Therefore, models must capture spatial detail and temporal dynamics. Furthermore, they must […]

Vision language models for event description

How vision language models work: a multimodal ai overview Vision language models work by bridging visual data and textual reasoning. First, a visual encoder extracts features from images and video frames. Then, a language encoder or decoder maps those features into tokens that a language model can process. Also, this joint process lets a single […]

Vision-language models for incident understanding

vlms: Role and Capabilities in Incident Understanding First, vlms have grown fast at the intersection of computer vision and natural language. Also, vlms combine visual and textual signals to create multimodal reasoning. Next, a vision-language model links image features to language tokens so machines can describe incidents. Then, vlms represent scenes, objects, and actions in […]

Vision-language models for anomaly detection

Understanding anomaly detection Anomaly detection sits at the heart of many monitoring systems in security, industry, and earth observation. In video surveillance it flags unusual behaviours, in industrial monitoring it highlights failing equipment, and in remote sensing it reveals environmental changes. Traditional methods often focus on single inputs, so they miss context that humans use […]

Vision-language models for access control

vision-language models: Principles and Capabilities Vision-language models bring together a vision encoder and language understanding to form a single, multimodal system. First, a vision encoder processes images or video frames and converts them to embeddings. Then, a language model maps text inputs into the same embedding space so that the system can relate images and […]

AI-driven vision language models for perimeter security

ai architecture: combining computer vision and language models for perimeter security AI architectures that combine computer vision and language models change how teams protect perimeters. In this chapter I describe a core architecture that turns raw video into context and action. First, camera streams feed CV modules that interpret each frame at the pixel level. […]