Understanding multimodal embeddings for semantic search in video analysis

First, define the difference between semantic video search and legacy keyword-based video search. Semantic video search interprets meaning from scenes, objects, and actions. In contrast, keyword methods rely on tags, filenames, or sparse metadata and so they miss context. Next, consider how multimodal embeddings change that dynamic. Multimodal embeddings combine visual frames, audio tracks, and textual transcripts into a shared vector space. Then, a single query can match frames, clips, and captions by semantic similarity. As a result, retrieval becomes about meaning, not just words.

Also, this approach improves precision. For example, semantic video search systems report precision gains of 30–40% over keyword baselines in academic surveys Semantic Feature – an overview | ScienceDirect Topics. In large-scale tests, mean average precision rose from 0.55 to 0.78 on ten thousand hours of footage, showing clear benefits for scaled video analysis New AI Tool Shows the Power of Successive Search. Therefore, teams that implement multimodal embeddings see faster, more accurate search results and reduced time spent finding relevant segments.

Moreover, and importantly, multimodal embeddings support natural language querying. Users state a text query and the system returns precise video segments where the same semantic content appears. This enables content discovery across entire video libraries, and it enables search and retrieval that feels human. For surveillance and operations, visionplatform.ai uses an on-prem Vision Language Model to convert video into textual descriptions for this exact purpose. For example, forensic search in airports benefits from this approach because investigators can search descriptions rather than camera IDs or raw metadata forensic search in airports. Finally, multimodal embeddings reduce false positives in detection pipelines because similarity is judged in an encoding space that captures semantic meaning and contextual relationships.

Generating embeddings and vector representations with embedding model and AI

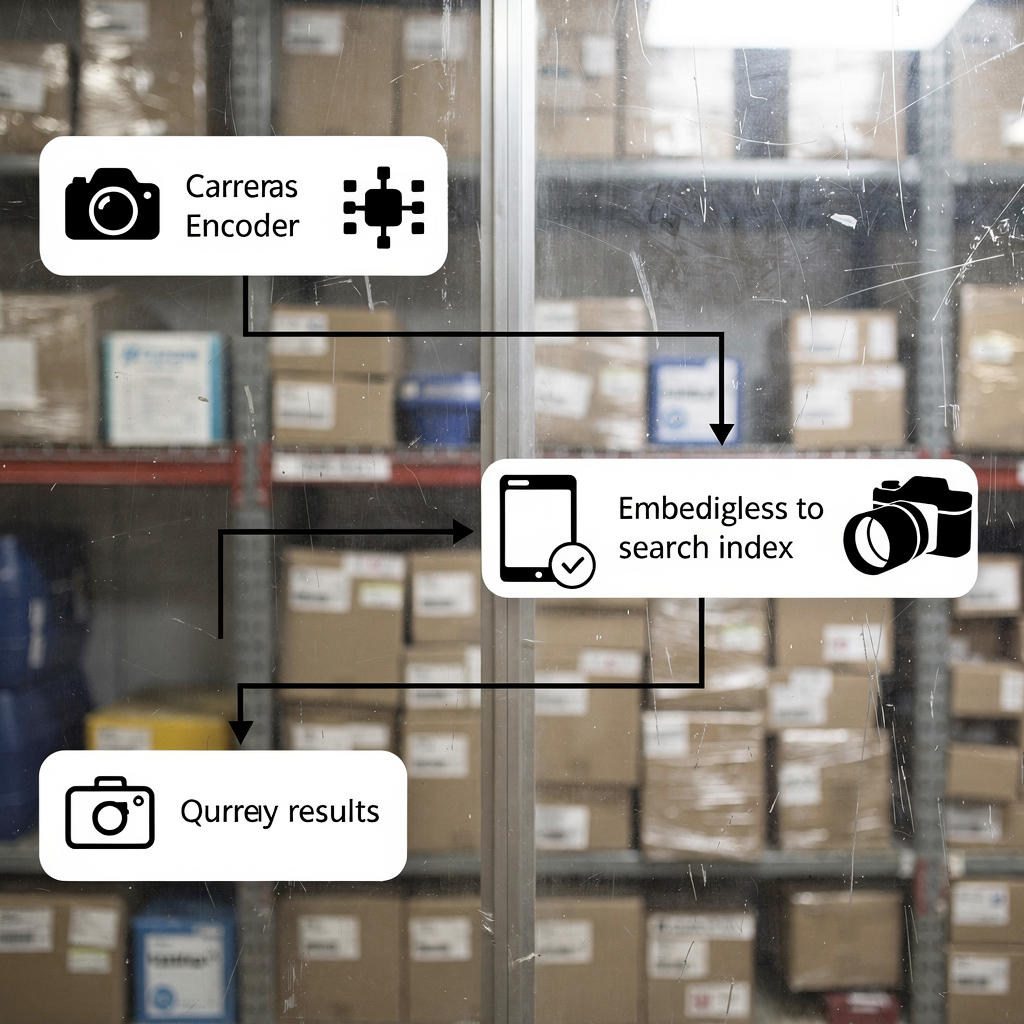

First, explain how to generate embeddings from video. Video files are first split into frames and audio segments. Then, frames are passed through a convolutional or transformer visual encoder. Next, audio is transcribed and its spectrograms are encoded. Also, transcripts are converted into text embedding vectors. Finally, embeddings from each modality are fused into a single multimodal vector that represents the video segment. This process allows a text query to be matched against visual and audio content by similarity.

Second, describe vector indexing for fast similarity search. Vectors are stored in an index such as Faiss or an OpenSearch vector index for k-nn lookup. Indexing creates compact, searchable representations so queries return matching video segments within milliseconds. In practice, vector search yields both exact and approximate nearest neighbor hits. Thus, systems balance recall and latency. For instance, successive search algorithms refine results iteratively using prior hits to improve retrieval, as shown in benchmark research New AI Tool Shows the Power of Successive Search.

Third, show how transformer models learn context for better retrieval. Transformers capture long-range dependencies across frames and words. They learn to encode actions, scene changes, and actor intent in embedding space. Consequently, searches that use a natural language query return relevant video segments even when exact keywords are missing. This is crucial for intelligent video and automated investigations. For surveillance teams using visionplatform.ai, the Vision Language Model turns raw detections into searchable descriptions, and AI agents reason over those embeddings to assist operators. In short, generate embeddings, build a vector index, and then run similarity matching to implement robust semantic search and retrieval.

AI vision within minutes?

With our no-code platform you can just focus on your data, we’ll do the rest

Building embeddings model with open source tools on AWS and Amazon SageMaker

First, compare libraries and tools. Use Faiss for high-performance ANN indexing and Hugging Face for model access. Faiss offers GPU-accelerated k-nn and flexible vector quantization. Hugging Face brings pre-trained transformers that can be fine-tuned for video tasks. Also, open source tooling supports reproducible pipelines and avoids vendor lock-in. For teams that prefer managed services, AWS provides infrastructure and MLOps integrations that simplify training and deployment.

Second, outline training pipelines on AWS EC2 and Amazon SageMaker. Start with data preparation on Amazon S3 where video data and metadata live. Then, spin up EC2 GPU instances for large-scale pretraining or fine-tuning. Alternatively, use Amazon SageMaker for distributed training with managed spot instances and built-in hyperparameter tuning. SageMaker pipelines automate dataset versioning, training, and evaluation. After training, export the embeddings model and registration artifacts. This step supports model governance and reproducibility. For those wanting a managed inference route, deploy models on Amazon SageMaker endpoints and autoscale them behind an API gateway.

Third, address endpoint deployment and autoscaling. Deploy embeddings model containers on SageMaker or using ECS with GPU-backed instances. Configure autoscaling rules based on request latency and CPU/GPU utilization. Also, use model monitoring to detect drift and trigger retraining jobs. For tight compliance and EU AI Act alignment, many teams keep models on-prem or use hybrid deployments. Visionplatform.ai supports on-prem Vision Language Model deployment so video, models, and reasoning remain inside the customer environment. Finally, consider cost and throughput trade-offs and choose training schedules that minimize spot interruptions while maximizing model quality.

Embedding and indexing for real-time multimodal search in OpenSearch Serverless

First, demonstrate how to embed video features and metadata into OpenSearch. Extract visual and textual embeddings per timestamped clip. Then, store vectors alongside camera metadata, camera IDs, and timestamps in an opensearch vector index. This enables search within a single cluster and makes retrieval of relevant video segments straightforward. Use a consistent embedding size to optimize index performance and query throughput. In practice, engineers store both raw metadata and vectors to support hybrid ranking that weighs textual relevance and vector similarity.

Second, configure real-time ingestion and vector search in a serverless environment. Amazon OpenSearch Serverless accepts bulk ingestion APIs and supports near real-time indexing. Use Lambda or containerized consumers to push embeddings from live encoders into the index. Then, queries from operator consoles can run vector search with k-nn filters and Boolean constraints. This architecture supports low-latency forensic search and automated alerts. It also supports mixed queries, where a textual query is combined with metadata filters like camera zone or time window. For surveillance applications, such capabilities speed investigations and reduce time to evidence.

Third, highlight latency benchmarks and cost-efficiency metrics. In benchmarks, well-optimized vector pipelines return results in under 200 ms for median queries at moderate scale. Also, semantic video search often reduces search times by roughly 25%, which directly cuts operator workload Build Agentic Analytics with Semantic Views – Snowflake. Furthermore, serverless options reduce operational overhead and scale elastically with query load. For teams that need total control, hybrid setups combine local encoders with Amazon OpenSearch Serverless for indexing. The result is fast, cost-effective search and analysis across vast video libraries.

AI vision within minutes?

With our no-code platform you can just focus on your data, we’ll do the rest

Use case: AI-driven video analysis and semantic search for surveillance and media

First, present a surveillance scenario. An operator needs to find every instance of “person loitering near gate after hours.” The system ingests video and runs detection models to produce events. Then, a Vision Language Model converts detections into textual descriptions and embeddings. Finally, a single text query returns timestamps and video segments that match the behavior. This workflow reduces manual scrubbing and supports evidence collection. For airports and secure sites, features such as loitering detection and intrusion detection connect naturally to broader incident workflows loitering detection in airports and intrusion detection in airports.

Second, show media production gains. Editors search across hours of rushes to find a specific gesture or shot. Semantic video search surfaces the right clips without relying on imperfect metadata. In trials, teams reported cuts in search time by about 25% Build Agentic Analytics with Semantic Views – Snowflake. As a result, production schedules compress and creative decisions accelerate. Editors also combine textual queries with visual similarity search to find matching B-roll.

Third, quantify business impact. Use mean average precision to measure retrieval quality. Benchmarks showed mAP improvements from 0.55 to 0.78 on large video datasets, indicating more accurate retrieval of relevant video segments New AI Tool Shows the Power of Successive Search. Also, semantic search systems reduce the time spent per investigation and improve user satisfaction. For practitioners, that translates to lower labor costs and faster incident resolution. visionplatform.ai packages these capabilities into an AI-assisted control room so teams can find relevant video quickly, verify alarms, and act with confidence.

Deployment workflow with GitHub: use multimodal artificial intelligence at scale

First, define a CI/CD workflow for models and indexes. Use GitHub for code and model versioning. Store training pipelines, pre-processing scripts, and tagging logic in repositories. Then, implement automated tests that validate embedding quality and index consistency. Next, build GitHub Actions that trigger training runs, model packaging, and container builds. Also, create a registry for embeddings model artifacts and dataset snapshots to ensure reproducibility. This approach supports auditability and controlled rollout of new models.

Second, integrate OpenSearch Serverless deployments via Terraform or AWS CDK. Use IaC to provision opensearch vector index resources, security groups, and ingestion pipelines. Then, wire deployment pipelines to push index mappings and lifecycle policies automatically. For environments that need strong governance, add manual approval gates in GitHub Actions to review index schema changes before they deploy. Additionally, include automated benchmarks that run after deployment to track latency and mAP on a validation dataset.

Third, outline monitoring, multimodal pipelines, and enhancements. Monitor query latency, index size, and model drift. Also, track embeddings generated per hour and the distribution of embedding sizes. Use metrics to trigger retraining when performance drops. For continuous improvement, incorporate active learning where operators label false positives and feed that data back into model updates. visionplatform.ai supports this kind of loop by exposing VMS data and event streams for structured reasoning and retraining. Finally, plan future enhancements such as integration with amazon bedrock for managed model hosting or with amazon titan multimodal embeddings for fast prototyping of multimodal embedding models on amazon. These integrations make it easier to implement video search capabilities at scale while keeping control over sensitive footage.

FAQ

What is semantic video search and how does it differ from keyword-based video search?

Semantic video search interprets the meaning of scenes, actions, and objects rather than matching only literal keywords. It uses embeddings to connect text queries to visual and audio content, so searches return relevant video segments even when keywords are absent.

How do multimodal embeddings work for video?

Multimodal embeddings combine visual, audio, and textual encodings into a shared vector representation. The fused vector captures cross-modality context, which enables a single text query to retrieve matching video clips and timestamps.

Which open source tools are useful for building embedding pipelines?

Faiss is common for ANN indexing and Hugging Face offers pretrained transformers for encoding. Both integrate well with training and inference on AWS, and they support state-of-the-art techniques for semantic search.

Can real-time search be built with OpenSearch Serverless?

Yes. OpenSearch Serverless can index vectors and serve k-nn queries in near real-time when paired with ingestion pipelines. This enables operators to search within live and recorded footage with low latency.

How much does semantic video search improve retrieval accuracy?

Benchmarks show precision gains of 30–40% versus keyword methods, and mAP improvements from 0.55 to 0.78 on large datasets Semantic Feature – an overview | ScienceDirect Topics. These gains reduce manual search time and raise satisfaction.

What are common deployment patterns on AWS?

Teams use EC2 for heavy pretraining, Amazon SageMaker for managed training and endpoints, and Amazon OpenSearch Serverless for vector indexing. IaC via Terraform or AWS CDK ties the pieces together in CI/CD pipelines on GitHub.

How does visionplatform.ai fit into semantic video search?

visionplatform.ai converts detections into human-readable descriptions via an on-prem Vision Language Model and exposes those descriptions for forensic search and reasoning. This supports faster verification and fewer false alarms while keeping data inside customer environments.

Can semantic video search help in media production?

Yes. Editors find relevant video clips faster because searches use meaning and similarity, not just tags. Trials show search time reductions of about 25% in production workflows Build Agentic Analytics with Semantic Views – Snowflake.

What privacy or compliance concerns exist, and how are they addressed?

Privacy concerns arise when video and metadata leave secure environments. To address this, deploy on-prem models and keep video in private buckets. visionplatform.ai supports on-prem deployments and EU AI Act alignment to reduce data exposure.

How do I start implementing semantic video search in my organization?

Start with a pilot: select a dataset, generate embeddings, and build a small vector index. Then run user trials to measure mAP and latency. Use those results to scale training and invest in automation for ingestion and CI/CD with GitHub.