language models and vlms for operator decision support

Language models and VLMS sit at the center of modern decision support for complex operators. First, language models describe a class of systems that predict text and follow instructions. Next, VLMS combine visual inputs with text reasoning so a system can interpret images and answer questions. For example, vision-language models can link an image to a report, and then explain why a detection matters. Also, multimodal systems outperform single-modality systems on many benchmarks. In fact, pretrained multimodal models show up to a 30% gain on VQA tasks, which matters when an operator must ask rapid, targeted questions.

Operators need both visual and text reasoning because no single stream captures context. First, a camera may see an event. Then, logs and procedures provide what to do next. Thus, a combined model that handles visual and textual inputs can interpret the scene and match it to rules, procedures, or historical patterns. Also, this fusion of visual and textual data helps reduce false positives and improves the speed of verification. For example, emergency diagnostics research found up to a 15% accuracy improvement when imaging was paired with textual context in acute settings.

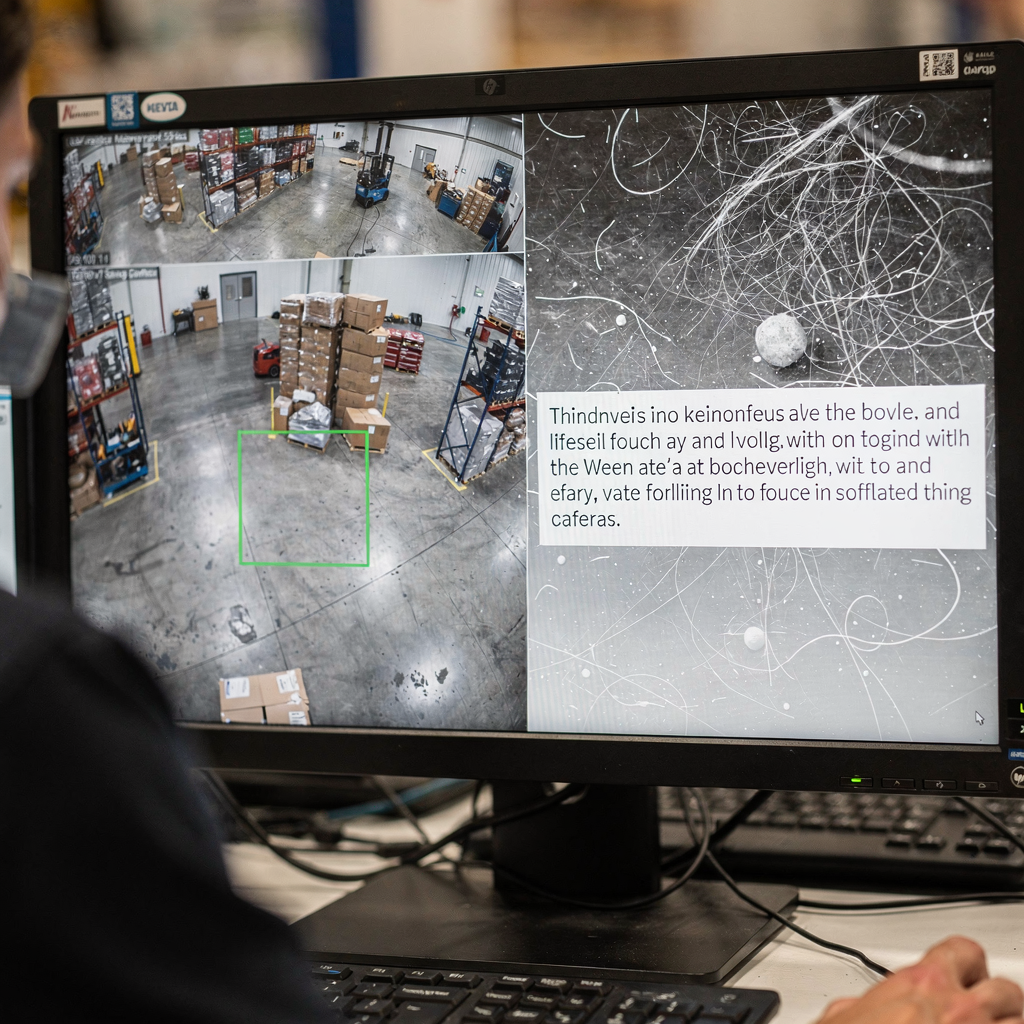

In operations, the ability to search and retrieve is critical. visionplatform.ai turns camera feeds into searchable knowledge so operators can find relevant clips with plain queries. For instance, the VP Agent Search feature lets a human ask for a past incident in ordinary words and get precise results; this bridges traditional detection and human reasoning. Also, VLMS enable automatic summaries and timelines of events. Moreover, a human operator can request a natural-language rationale for a model output; such natural language explanations help trust and verification. Finally, VLMS with robotic systems can be used to inspect equipment or guide a robotic arm during remote maintenance, enabling safer and faster interventions.

vision language models for agents in the space domain

Agents in the space domain are starting to use vision language models to enhance situational awareness. First, operator agents in the space can analyze satellite and payload imagery. Next, visual language models as operator aides help identify anomalies, space debris, or instrument faults. For example, in anomaly detection benchmarks, VLMs showed up to a 15% accuracy boost when contextual metadata was included. Also, large language models add planning and instruction-following to visual pipelines, which enables end-to-end situational reasoning for mission teams.

Use cases vary across the space sector. First, satellite inspection teams use VLMs to scan imagery for panel damage or micrometeorite strikes. Second, debris detection systems flag fragments and classify threats to an orbiting asset. Third, payload inspection workflows combine vision analysis with telemetry to decide whether to schedule a corrective operation. Also, these systems operate inside a tight architecture where bandwidth and latency constrain what can be sent to ground. Therefore, on-board pre-processing and intelligent data selection become essential. In a software context and a hardware context, teams must decide where to run models: on satellite edge processors or on ground servers during limited contact windows. For example, a small model on board can score frames and send only flagged inputs to ground, which reduces telemetry volume.

Simulation environment testing plays a key role before deployment. Also, a realistic simulation helps validate models on synthetic anomalies and rare conditions. For instance, teams use a dataset of annotated failure modes and a simulation environment to stress-test agents. Next, human-in-the-loop validation helps ensure outputs are actionable and aligned with procedures. Finally, mission planners value architectures that balance compute, power, and robustness so that models remain reliable during extended missions.

AI vision within minutes?

With our no-code platform you can just focus on your data, we’ll do the rest

real-time AI integrate vlms with computer vision

System architecture must connect sensors to reasoning. First, a typical pipeline flows camera or sensor → computer vision pre-processing → VLM inference → action. Also, CV modules handle detection, tracking, and basic filtering. Then, VLMS add interpretation and contextual responses. For real-time needs, many teams process live camera feeds at 30 fps and push pre-processed frames to a VLM that returns sub-second responses. For instance, careful pruning and model quantization allow stream processing with low latency. Also, visionplatform.ai supports on-prem inference so video and models never leave the site, which helps meet compliance needs.

Edge deployment demands model efficiency. First, pruning, distillation, and quantization reduce model size without sacrificing critical capability. Next, deploying a compact VLM on GPU servers or edge accelerators like NVIDIA Jetson makes sub-second inference possible. Also, best practices include batch sizing to match throughput, asynchronous pipelines to avoid blocking, and fallback detectors to maintain uptime. For robotics, real-time perception must tolerate dropped frames and noisy inputs. Therefore, the system should aggregate evidence across frames and use confidence thresholds to decide when to ask a human operator to intervene.

Integration requires clear APIs and event formats. visionplatform.ai exposes events via MQTT and webhooks so control rooms can connect AI agents to existing VMS workflows. Also, search tools and pre-programme actions can be tied to verified alerts to reduce manual steps. Finally, teams should measure computational cost, classification latency, and end-to-end time-to-action. This systematic monitoring helps iterate on architecture and maintain operational resilience during heavy load.

bridging the gap: vision language models as operator to leverage decisions

Explainability stands at the heart of trust. First, operators need saliency maps and natural-language rationales to understand why a model made an assertion. Also, a quoted expert captured this requirement: “The explainability of model recommendations is not just a technical challenge but a fundamental requirement for adoption in clinical and operational settings” (source). Next, combining visual overlays with a short natural-language rationale helps the operator validate a recommendation quickly. For example, the VP Agent Reasoning feature correlates detections, VLM descriptions, and VMS logs to explain validity and impact.

Human–machine teaming studies show measurable gains. First, experiments indicate a roughly 20% reduction in operator error when AI provides context and recommendations during high-pressure tasks. Next, these gains come from reduced cognitive load and faster verification cycles. Also, human-in-the-loop processes preserve oversight for high-risk decisions while enabling automation for routine tasks. For instance, VP Agent Actions can pre-fill incident reports and suggest next steps while leaving final approval to human operators.

Governance and policy matter. First, operators must know data provenance and audit trails to accept automated suggestions. Next, a governance framework should define permissions, escalation rules, and validation checkpoints. Also, systems should support continuous validation and retraining pipelines to maintain robustness as environments evolve. Finally, organizations should evaluate models with open-source tools where possible and keep validation logs to satisfy standards such as ITAR or GDPR when applicable. This builds operator trust and supports safer automation in live operations.

AI vision within minutes?

With our no-code platform you can just focus on your data, we’ll do the rest

GenAI deployment in the public sector to integrate vlms

Public sector agencies are actively evaluating GenAI and VLMS for defence, emergency services, and space programmes. First, policy drivers in many countries encourage on-prem and auditable AI to meet compliance and security needs. Next, agencies demand clear data governance and must align with ITAR, GDPR, and procurement rules. For example, some national trials use VLMS on secure networks where video never leaves the site to ensure compliance with local rules. Also, deployment plans typically combine field pilots with controlled live trials.

Use cases in defence and emergency services include perimeter monitoring, forensic search, and automated reporting. Forensic capabilities can search historical footage using plain language; see our forensic search resource for an example of how search can work in an airport context (forensic search in airports). Also, process anomaly detection helps teams find atypical behaviors before they escalate; more context is available in our process anomaly page (process anomaly detection in airports).

Trials in national space programmes often focus on satellite health and debris monitoring. First, a satellite can generate large volumes of visual data but only limited downlink. Therefore, agencies use on-board filters and ground-based VLMS to prioritize telemetry. Also, public sector deployments must consider procurement, audit, and long-term maintenance. visionplatform.ai works as an integrator, enabling control rooms to keep video and models on-prem while adding agent-driven workflows that reduce operator workload and support automation. Finally, a staged rollout that includes pilot studies, validation, and iterative scaling helps align stakeholders and institutionalize best practices.

advance real-world domain integration

Next-gen capabilities will focus on continual learning, adapters, and prompt tuning to improve adaptability. First, adapter modules allow teams to update a model for a new site without retraining the full network. Then, continual learning pipelines adapt models over time while limiting catastrophic forgetting. Also, prompt tuning and multimodal extensions let a single system handle new tasks with minimal labeled data. For example, fine-tuning on a targeted dataset improves site-specific accuracy and reduces false positives.

Challenges remain. First, dataset labeling for rare events is costly, and compute budgets constrain how often teams can retrain. Next, robustness under operational variance—lighting, weather, camera angles—requires systematic testing. Therefore, a practical roadmap starts with small pilots, followed by incremental rollout and continuous monitoring. Also, teams should include clear validation criteria and a plan for iteration. For instance, a pilot can evaluate experimental results on controlled scenarios, then extend to live camera feeds for validation.

Governance and metrics complete the loop. First, monitor classification accuracy, latency, and the human operator acceptance rate. Next, track actionable insights and time-to-resolution to highlight operational benefits. Also, include behavior analytics to understand how AI suggestions change human decision-making processes. Finally, a methodical approach that blends technical validation, human-in-the-loop testing, and policy alignment will enable sustainable deployment. As a result, teams can bridge the gap between research and practice and advance safer, more adaptive operational AI across domains.

FAQ

What are vision language models and how do they differ from language models?

Vision language models combine image and text understanding so they can answer questions about visual scenes. By contrast, language models handle text-only tasks and cannot interpret visual data without extra modules.

How do VLMS improve operator decision-making in control rooms?

VLMS convert live video into textual descriptions and contextual summaries that operators can search and verify. They reduce cognitive load by correlating video, procedures, and system logs to present actionable options.

Can VLMS run on local infrastructure to meet compliance needs?

Yes. On-prem deployment keeps video and models within the secure environment, which helps meet GDPR and ITAR constraints. visionplatform.ai offers options that avoid cloud video transfer and support audit trails.

Are there examples of measurable benefits from using VLMS?

Benchmarking shows up to a 30% improvement on visual question answering and a 15% accuracy boost in some diagnostic tasks when visual context is paired with text benchmarks. Studies also report time savings in clinical workflows and validation.

How do VLMS handle limited bandwidth in space missions?

They use on-board pre-processing to score frames and send only flagged data or summaries. This selective telemetry reduces downlink volume while keeping the ground team informed.

What explainability features should operators expect from VLMS?

Expect saliency maps, short natural-language rationales, and cross-checks against logs and procedures. These features enable quick validation and build trust in high-risk decisions.

How do I integrate VLMS with existing VMS platforms?

Use standard APIs and event streams such as MQTT and webhooks to connect VLMS outputs to VMS workflows. For detailed examples in airport contexts, see our intrusion and forensic search resources (intrusion detection, forensic search).

What governance steps are needed before deploying VLMS?

Define permissions, audit trails, escalation paths, and validation criteria. Also, implement continuous monitoring and retraining plans to maintain robustness over time.

Can VLMS work with robotic inspection systems?

Yes. VLMS can guide robotic arms or drones by interpreting camera input and providing contextually relevant instructions. Integration with robotics enables safer inspection and autonomous routines.

How should organisations start a rollout of VLMS?

Begin with a small pilot focused on a well-defined use case, collect validation metrics, and iteratively expand deployment. Include human-in-the-loop reviews during early stages to ensure safe automation and operator acceptance.