anomaly

An anomaly in a video stream is any motion, behaviour, or event that deviates from an established normal pattern. An anomaly can be a person moving against the crowd, an object left unattended, or a vehicle taking an unexpected route. Anomaly is rare by definition, and yet anomaly is exactly what operators search for in long hours of surveillance. Because anomaly is infrequent, human review is inefficient and slow. Cameras produce huge volumes of footage, and large-scale operations produce petabytes of video data each day, so automatic anomaly flagging is essential for effective monitoring Intelligent video surveillance: a review through deep learning. First, operators need a clear definition of anomaly. Second, systems need robust models that distinguish anomaly from normal noise. Third, the system must surface the anomaly quickly and with a score that operators trust.

In practice, anomaly often appears as brief deviations in motion or behaviour within a video frame and across a sequence. For example, a person running in an otherwise steady crowd creates unusual motion vectors, and an unattended bag creates a localized change in object patterns. Anomaly may involve single objects, groups, or contextual interactions. Anomaly is also context dependent. A behaviour that is normal at one time becomes an anomaly at another. For example, loitering near a secure gate is anomalous after hours. For more structured site examples, our forensic search capability helps operators find loitering or unattended-object anomalies across days and cameras forensic loitering and search.

Because anomaly is rare, labelled anomalous examples are scarce. Therefore, training models for anomaly requires careful data curation and learning approaches that prefer normal examples. Anomaly detectors must balance sensitivity and precision. Too many false positives overwhelm operators, and too many false negatives miss critical events. In addition, any deployed anomaly detector must respect privacy and legal boundaries. Systems that extract and store video data must follow rules, especially in sensitive jurisdictions such as the EU. For legal context and discussion of search and civil liberties see the analysis of video analytics and search practices Video Analytics and Fourth Amendment Vision. Finally, an effective control-room workflow pairs anomaly flags with context, and that is why platforms which turn detections into searchable context and actions improve operator outcomes. For example, visionplatform.ai converts anomaly detections into human-readable descriptions and decision support so operators can verify and act faster and with more confidence.

video anomaly detection

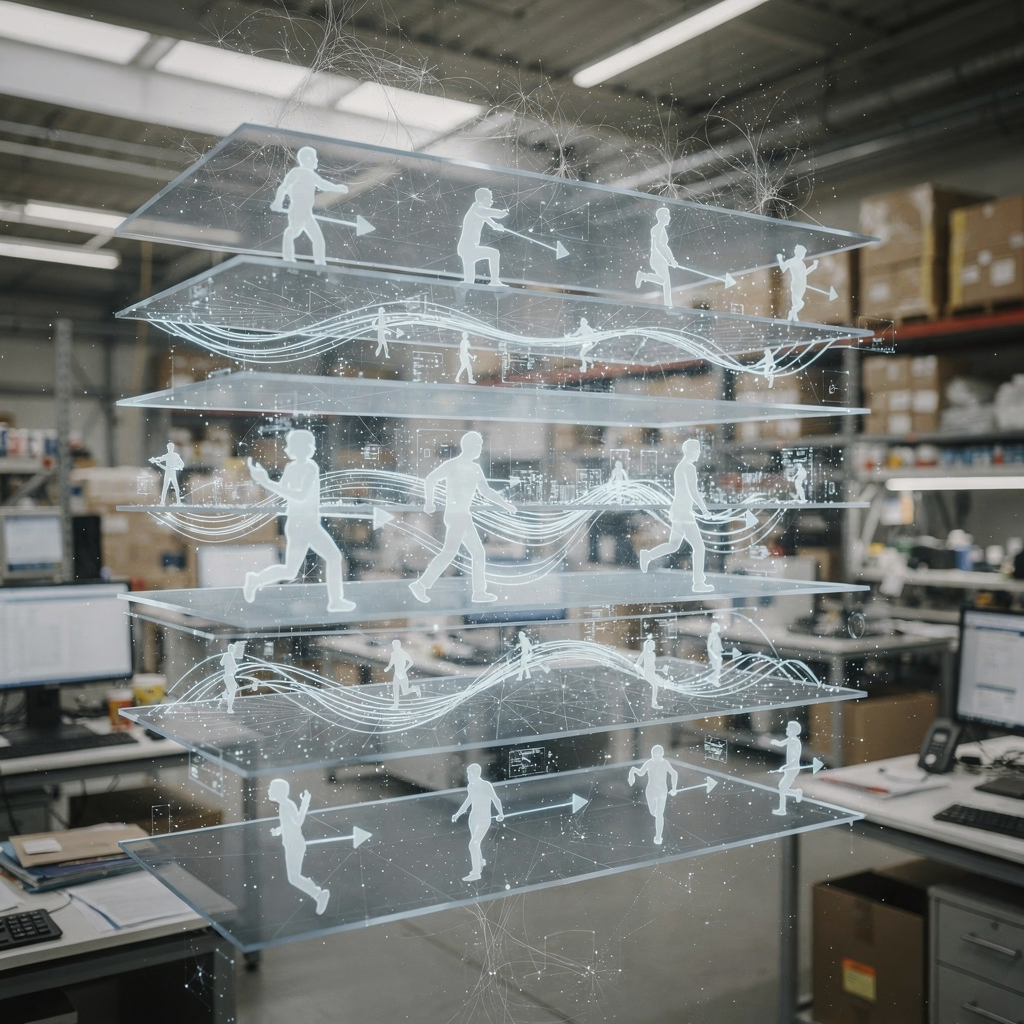

Video anomaly detection applies algorithms directly to pixel data and motion, not to metadata alone. Video anomaly detection inspects video frames and temporal patterns to find deviations. Video anomaly detection feeds spatial embeddings and motion cues into models which return an anomaly score per frame or per segment. Then the system ranks segments for operator review. In trials, modern systems report high detection rates, with state-of-the-art models achieving detection accuracy above 85% on benchmark datasets and in some specialised settings reaching near 90% precision for suspicious activities review of intelligent video surveillance. Also, anomaly detection can cut false alarms by up to 30% compared to threshold-based monitors, which directly reduces wasted operator time and response costs IDS and operational impact.

Real-time video processing matters. Many camera streams run at 25–30 frames per second, and real-time video pipelines allow timely alerts and rapid response. Real-time video detection and localization deliver both frame-level anomaly markers and time ranges for events in video. As a result, teams can triage and dispatch while the event unfolds. Real-time video systems combine fast neural networks for spatial detection and compact temporal models for short-term context, and then they fuse outputs into a robust anomaly score. For use cases like traffic monitoring and public-space surveillance, this latency matters. For example, traffic management benefits when an abnormal stopped vehicle is detected and escalated within seconds. For deployment, a hybrid approach that combines rule-based triggers with learned scoring often improves robustness, and our platform supports such mixed workflows to reduce false positives and to aid verification.

Video anomaly detection also enables efficient search across archives. Rather than searching by time code, operators can search by behaviour or incident type. Our VP Agent Search converts video using a Vision Language Model, so teams can query long archives with natural language and find anomalous segments faster forensic search in airports. And importantly, models must be fine-tuned for site-specific normal video, because what counts as anomalous varies by environment and time. Finally, researchers continue to improve both the speed and accuracy of video anomaly detection via new architectures and training regimes anomaly detection techniques and performance.

AI vision within minutes?

With our no-code platform you can just focus on your data, we’ll do the rest

supervised video anomaly detection

Supervised video anomaly detection trains models on labelled normal and labelled anomalous clips. Supervised anomaly systems learn direct mappings from input video to anomaly labels, and they can achieve high precision when ample labelled anomalies exist. Architectures typically use convolutional neural networks and 3D ConvNets to capture appearance and short-term motion. These networks learn spatial patterns and temporal dynamics together. Many supervised pipelines also add recurrent layers for extended context. The result is often strong detection and even coarse localization of events in video.

Supervised video anomaly detection can reach very high precision. In controlled benchmarks, supervised methods report precision levels up to 90% for specific tasks and datasets when training data includes representative anomalies. However, supervised anomaly approaches require large, annotated video datasets of anomalous events, and collecting such datasets is costly. Annotating events in video clips is labour intensive. Moreover, anomalies are rare and varied, so models can overfit to the known anomaly types and then miss novel anomalies. To manage this, teams use data augmentation, synthetic anomalies, and transfer learning. They also combine supervised models with unsupervised scoring so the system catches unknown anomalies and known threat patterns together.

In operational settings, supervised approaches work best when the site has repeatable anomalous events or when the organisation can invest in annotation. For airports, for instance, supervised models trained on people detection, ANPR/LPR and object behaviours can identify specific breach patterns quickly, and those models plug into a broader control-room agent for verification and response vehicle identification and ANPR workflows. Still, supervised anomaly training demands careful validation to keep false alarms low. Finally, a supervised anomaly approach benefits from a continuous feedback loop where operator corrections re-label events and retrain models incrementally. This loop reduces drift and improves long-term anomaly detection performance.

weakly supervised video anomaly detection

Weakly supervised video anomaly detection uses coarse labels, such as video-level tags, instead of frame-level annotations. Weakly supervised methods lower the cost of labelling by letting algorithms learn which parts of a labelled clip likely contain anomalous moments. One common pattern is multiple instance learning, where a long clip is labelled as containing an anomaly and the model infers which segments are responsible. Multiple instance learning helps models focus on candidate segments without exhaustive annotation.

Weakly supervised video anomaly detection is effective in many real environments. For example, teams can train on day-long clips labelled “contains intrusion” and then let the algorithm pinpoint anomalous segments during training. This reduces labelling effort drastically. In benchmarks, weakly supervised pipelines have achieved strong area-under-curve measures, sometimes reaching around 88% AUC on public datasets with minimal labels. The approach also scales well when new anomaly categories emerge. In practice, weak supervision pairs well with a small set of strongly labelled clips to ground the model.

Weakly supervised methods often rely on temporal attention modules and ranking losses that push likely anomaly segments to score higher than normal segments. They also connect to our VP Agent Reasoning layer, which verifies probable anomalies by checking correlated signals. For instance, an anomalous person near a secure bay may trigger a weak label, and then the agent reasons using access logs and camera context to confirm or rule out the event intrusion detection workflows. This combination reduces false alarms and improves trust in flagged segments. Finally, weakly supervised anomaly learning supports incremental deployment: start with coarse labels, then refine with operator feedback to improve localization and reduce response time.

AI vision within minutes?

With our no-code platform you can just focus on your data, we’ll do the rest

anomaly detection method

Key anomaly detection methods in video include reconstruction-based models, predictive models, and discriminative clustering. Classic autoencoders reconstruct normal video frames and raise an alert when reconstruction error is high. GAN-based predictors synthesise expected frames and treat large discrepancies as anomalous. Clustering-based systems group normal behaviour and label outliers as anomalies. Each anomaly detection method has trade-offs in sensitivity, interpretability, and computational cost.

Feature extraction matters. Spatial features come from CNN embeddings that capture appearance. Temporal features come from recurrent modules, LSTMs, or temporal convolution blocks that capture motion across a video sequence. Hybrid pipelines often combine an object detection front end with a temporal scoring back end. For example, an object detection module extracts people, vehicles, and items, and then a temporal model scores sequences for unusual transitions. Hybrid pipelines are robust, because rule-based triggers can catch obvious events and learned scoring can filter ambiguous cases.

Practical deployments mix rule-based logic with learned anomaly detectors to improve robustness. For critical systems, a human-in-the-loop verifies high-risk anomalies, and low-risk anomalies may be triaged automatically. This pattern reduces operator burden and keeps oversight. For video anomaly detection based on contextual reasoning and search, our VP Agent Suite turns detected events into descriptive text and reasoning so operators can verify and act immediately. The combined pipeline supports incident report generation and automated workflows. For crowded or complex scenes, systems that include event detection and localization help operators pinpoint the exact video frame and location of the anomaly in the scene. Finally, ongoing research looks at combining contrastive learning and multi-task objectives to improve feature discrimination for anomaly detection in dynamic scenes.

unsupervised video anomaly detection

Unsupervised video anomaly detection learns normal patterns from unlabelled streams, and then it flags departures from those patterns. Unsupervised approaches include one-class SVMs on feature embeddings, deep clustering that groups normal behaviour, and self-supervised contrastive learning that builds robust representations. In unsupervised setups, the model sees only normal video during training. Then at runtime, anything that does not fit the learned normal manifold is scored as an anomaly. This design is ideal when anomalous examples are extremely rare or unknown.

Unsupervised video anomaly detection reduces labelling costs and supports continuous learning. For example, a deep autoencoder for unsupervised anomaly detection compresses normal video, and large reconstruction errors indicate potential anomalies. Similarly, self-supervised tasks such as future frame prediction or temporal order verification let a model learn temporal regularities; when predictions fail, the system raises an alert. These methods can operate without curated labels and can adapt as normal patterns change. However, unsupervised approaches face challenges. Distinguishing subtle anomalies from normal variation is hard. Setting thresholds for real-world use requires tuning and operator feedback. Concept drift occurs as environments change, and models must be retrained or adapt online.

To handle drift, teams combine unsupervised scoring with periodic human verification and with light-weight supervised updates. For example, a model may operate in unsupervised mode and surface candidate anomalous video segments to operators, who confirm or reject them; confirmed segments are added to a labelled dataset for periodic retraining. For aerial or traffic monitoring, unsupervised anomaly detection helps spot unexpected incidents without prior examples. Also, combining unsupervised scoring with object detection and rule-based checks improves precision in operational systems. For those implementing unsupervised detection in surveillance video, it is essential to include clear escalation rules and privacy-preserving data handling. Overall, unsupervised methods remain an active research direction, especially when paired with contrastive learning and continual adaptation to keep anomaly detection performance stable.

FAQ

What counts as an anomaly in video streams?

An anomaly is any event, motion, or behaviour that departs from normal patterns in the camera view. It can be a person in a restricted area, an object left unattended, or sudden atypical motion.

How does video anomaly detection differ from traditional search?

Video anomaly detection looks directly at pixels and motion rather than relying on metadata or tags. It finds unusual events automatically and enables search by behaviour rather than by pre-set keywords.

Can anomaly detection work in real time?

Yes. Modern systems can process 25–30 frames per second and provide timely alerts for rapid response. Real-time pipelines combine fast spatial networks with compact temporal models to meet latency constraints.

What are the common technical approaches?

Approaches include supervised learning with labelled anomalies, weakly supervised learning with clip-level labels, and unsupervised learning from normal video. Architectures use CNNs, temporal modules, autoencoders, GANs, and contrastive learning.

How accurate is video anomaly detection?

State-of-the-art models report detection rates exceeding 85% on benchmark datasets, and specialized systems can reach higher precision for targeted tasks research review. Performance depends on data quality and environment.

What are deployment best practices?

Combine learned detectors with rule-based checks and human verification to reduce false alarms. Keep models on-premises when compliance requires it, and use feedback loops to update models with confirmed events.

How do privacy and legal concerns affect anomaly systems?

Video analytics can raise privacy issues, especially when mass data collection and search are involved. Deployers must follow local laws and design systems with data minimisation and audit logs legal analysis.

What is weakly supervised learning in this context?

Weakly supervised learning trains on coarse labels such as “this clip contains an anomaly” and uses methods like multiple instance learning to localise anomalous segments. It cuts labelling costs while retaining good performance.

How does visionplatform.ai help with anomalies?

visionplatform.ai turns detections into searchable context and decision support by converting video events into human-readable descriptions and by providing AI agents for verification and actions. This reduces operator workload and speeds up incident handling.

Where can I learn more about practical applications?

Explore use-case pages such as vehicle detection and ANPR, intrusion detection, and forensic search for applied examples in airports and transport hubs vehicle detection, intrusion detection, and forensic search.