Context-aware Video Surveillance: Definition and Benefits

Context-aware video surveillance brings together visual feeds and extra information to create richer understanding. Also, it does this by combining CAMERA inputs with other SENSORS and metadata. Additionally, this approach lets AI reason about who, when, and where in a way that traditional systems cannot. Therefore, operators gain meaningful INSIGHT that informs timely RESPONSE. The term “context-aware” refers to systems that UNIFY video with situational markers like TIME OF DAY, access control logs, and environmental signals. In practice, context-aware VIDEO links a visual FOOTAGE stream to triggers from a sensor or a dataset so the system can decide whether an ALERT is actionable or routine. For example, linking entry logs to a CAMERA feed helps determine whether an employee is authorised or an instance is UNAUTHORIZED ACCESS. Furthermore, context-aware AI can tailor thresholds for movement and behavior to a specific site, and thus REDUCE false positives and improve operator focus.

Also, benefits include reduced false alarms, proactive THREAT detection, and better resource ALLOCATION. For instance, research shows that multimodal networks can improve recognition by about 10–20% over single-modality baselines (NIH study). Furthermore, adding contextual REGULARIZATION reduces spurious MOTION triggers in cluttered scenes. Therefore, security teams can triage events faster and allocate PERSONNEL where they are truly needed. visionplatform.ai applies these ideas by turning existing CAMERAS and VMS into an operational layer that explains what happened and why. Also, the platform’s on-prem Vision Language Model and agents enable operators to search, verify, and ACT on incidents without sending VIDEO to the CLOUD. Finally, this context-aware AI approach helps ENSURE compliance with regional rules while enabling proactive management of routine incidents and escalations.

Traditional Video Surveillance Limitations and False Alarms

Traditional surveillance systems depend mainly on visual feeds. As a result, they struggle to interpret ambiguous scenes. Also, they often flag any unexpected MOTION as an alert. Therefore, operators face many false positives. Research indicates that conventional setups can have false alarm rates as high as 70% (MDPI survey). Consequently, teams spend time chasing non-events. This overload reduces situational VISIBILITY and increases cognitive load for staff. In addition, without CONTEXT, simple behaviors like a CROWD gathering for a scheduled event may appear suspicious. Thus, the absence of surrounding information hinders accurate BEHAVIOR interpretation.

Also, traditional analytics are often rigid. They rely on predefine rules and black-box models that do not fit site-specific reality. Furthermore, these systems commonly send footage to the CLOUD for processing, complicating compliance and raising costs. In contrast, a context-aware strategy integrates access logs, time of day, and environmental inputs to filter irrelevant alerts. For example, linking shift schedules to camera zones reduces alarms from authorized PERSONNEL. Another example is storing movement patterns as a dataset, then using that historical trend to contextualize a current alert. Therefore, context-aware deployments can significantly reduce false alarms and allow operators to focus on real THREATs. visionplatform.ai helps by providing VP Agent Reasoning to verify and explain alarms, which reduces time per alarm and supports faster RESPONSE.

AI vision within minutes?

With our no-code platform you can just focus on your data, we’ll do the rest

Multimodal Sensor Integration in Video Surveillance

Multimodal SENSOR integration combines CAMERA video with RFID, depth cameras, microphones, and environmental sensors to create a richer picture. Also, fusing these streams enables the system to confirm that an observed movement is meaningful. For instance, RFID and access control logs can confirm whether a person at a RESTRICTED AREA is authorized, which helps REDUCE false alarms. Additionally, depth CAMERAS help separate a human silhouette from background clutter, which improves DETECTION precision. Research shows that combining modalities consistently improves activity recognition by 10–20% (NIH paper). Therefore, a MIXED-sensor framework leads to more reliable outputs and fewer wasted operator minutes.

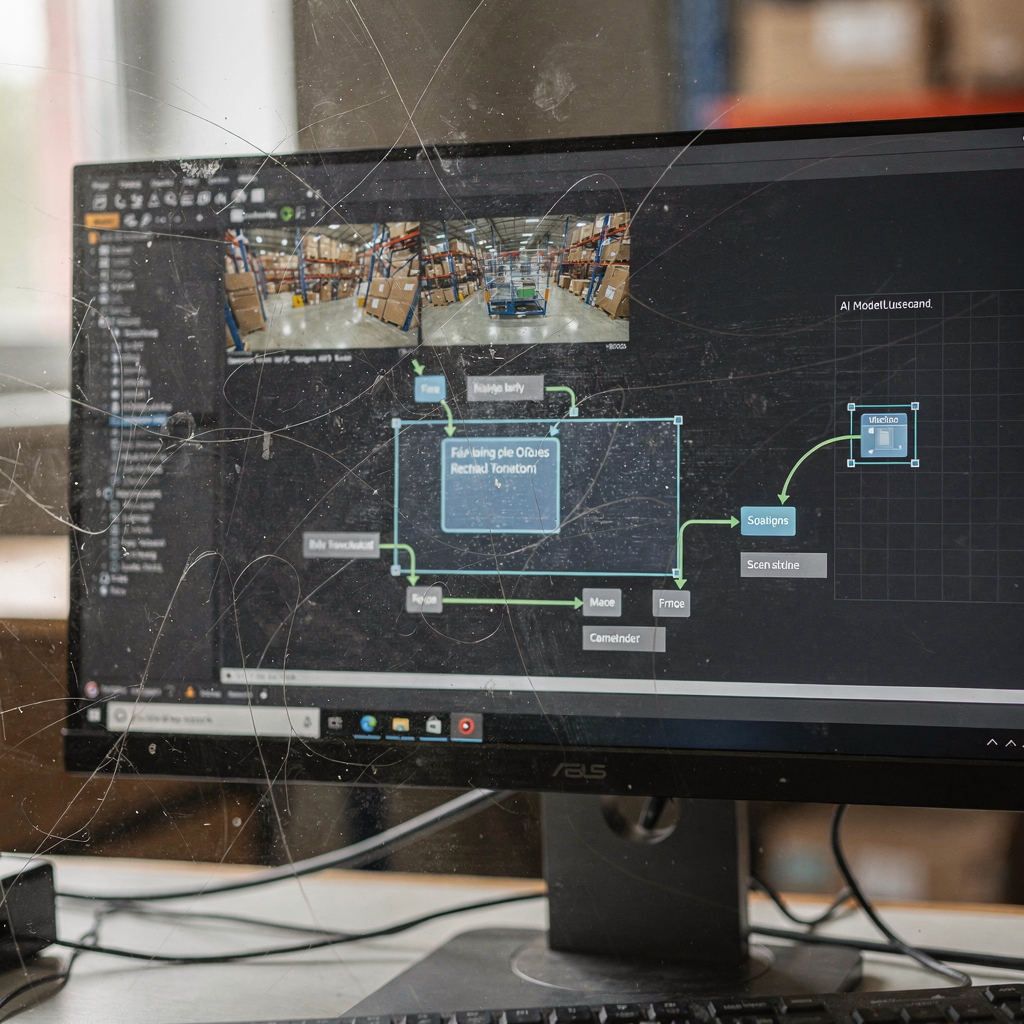

Also, middleware plays a key role. Middleware ARCHITECTURES manage data flow across devices while enforcing privacy and compliance. For example, privacy-aware middleware can keep sensitive VIDEO and datasets on-prem while exposing structured events for reasoning. visionplatform.ai uses such on-prem approaches to avoid unnecessary CLOUD MANAGEMENT and to offer full control over datasets and models. Furthermore, middleware unifies event streams so AI agents can correlate a camera alert with access logs or a temperature alert. In addition, this unified approach supports forensic SEARCH across recorded footage; see the VP Agent Search capability for natural language queries and retrospective analysis. Finally, integrating sensors lets operations AUTOMATE low-risk workflows while keeping human oversight for critical incidents, which improves security and operational efficiency across multiple sectors.

Deep Learning and AI for Anomaly Detection

Deep Learning and AI transform how systems analyze spatiotemporal patterns. Convolutional neural networks extract spatial features from frames, and recurrent models or temporal convolutions capture motion over time. Also, these models can be trained on curated datasets to recognize normal movement and thus detect ANOMALY. For example, modern systems have achieved early-warning accuracy above 85% in behavior recognition tasks (PLOS study). Therefore, AI-powered surveillance can detect irregular behavior sooner and with higher confidence than heuristic rules.

Additionally, contextual REGULARIZATION techniques add environmental priors into learning. These methods penalize unlikely combinations of events, which filters noise from busy scenes. For example, a model can learn that loitering near a secure gate after hours is more suspicious than similar behavior during a scheduled SHIFT change. Also, context-aware AI in surveillance enables systems to adapt to time of day and site-specific patterns. visionplatform.ai’s VP Agent Reasoning correlates video, VMS metadata, and access control to explain why an alert matters. Furthermore, real-time PROCESSING is essential for timely RESPONSE. Edge and on-prem deployments reduce latency and keep sensitive VIDEO inside the organization. In short, deep learning models, when combined with contextual signals, enable more INTELLIGENT anomaly detection and actionable explanations that operators can trust.

AI vision within minutes?

With our no-code platform you can just focus on your data, we’ll do the rest

Quantitative Impact and Performance Metrics

Key metrics for assessing context-aware systems include reduction in false positives, detection accuracy, processing latency, and operator time per alert. Also, studies show that context-aware methods can cut false alarms by up to 40% (MDPI survey). Furthermore, deep learning approaches have pushed behavior recognition accuracy beyond 85% in many real-world tests (PLOS). Therefore, the quantitative gains are measurable and operationally relevant.

Additionally, combining modalities yields consistent recognition improvements in the 10–20% range (NIH). Also, the trade-off between computational cost and security gains depends on deployment choices. For instance, edge PROCESSING reduces bandwidth and latency but may require GPUs or specialized devices. In contrast, cloud solutions can scale but raise privacy and cost concerns. visionplatform.ai addresses this trade-off by offering on-prem VP Agent deployments that keep VIDEO and models local while streaming structured events for reasoning. Moreover, organizations can evaluate metrics like mean time to verify an ALERT and the percentage of incidents resolved without escalation. These KPIs provide concrete evidence that context-aware frameworks improve security and operational efficiency across sectors. Finally, a measured rollout with clear dataset validation and model audits ensures that gains persist during expansion and that the system remains aligned with policy.

Ethical and Privacy Considerations in Context-aware Surveillance

Ethical questions are central to widespread deployment. Also, experts warn about surveillance overreach and potential misuse of personal information. For example, a review noted that “the absence of universally applicable solutions to address privacy concerns remains a critical challenge” (ScienceDirect). Therefore, any deployment must include strong PRIVACY safeguards. In practice, that means data minimisation, clear retention policies, and transparent audits. Additionally, access control and authorization rules should be enforced so only permitted PERSONNEL can view sensitive footage. visionplatform.ai’s on-prem architecture supports these requirements by keeping video inside the organization and by providing auditable logs to support compliance with regulations like the EU AI Act.

Also, ethical frameworks must balance safety and civil liberties. For instance, AI-driven surveillance should avoid biased outcomes by validating models on representative datasets. Furthermore, organisations should inform users and stakeholders about monitoring practices and provide channels for redress. In addition, technical measures such as anonymisation, selective processing, and purpose limitation reduce privacy impact. Finally, governance must define when an AI agent can act autonomously and when human approval is required. By designing with privacy-first principles, teams can ensure that context-aware systems enhance security while respecting rights and maintaining public trust.

FAQ

What is context-aware video surveillance?

Context-aware video surveillance links camera footage with additional information like access logs, time of day, and environmental sensors. This fusion helps the system decide whether an event is truly suspicious or routine.

How does multimodal integration improve accuracy?

Combining modalities such as RFID and depth cameras provides corroborating signals that reduce ambiguity. As a result, activity recognition improves and false positives decline.

Can these systems operate without the cloud?

Yes. On-prem processing keeps video and models inside the organization, which helps meet privacy and compliance requirements. visionplatform.ai offers on-prem solutions to avoid unnecessary cloud transfer.

What metrics should I track after deployment?

Track false-positive rates, detection accuracy, processing latency, and mean time to verify an alert. These KPIs show how the system affects operational efficiency.

Do context-aware methods actually reduce false alarms?

Evidence shows notable reductions; some studies report up to a 40% decrease in false alarms (MDPI). That leads to fewer wasted responses and clearer priorities for teams.

Are there risks of biased detections?

Yes. Models trained on limited datasets can reflect biases, so testing on representative data and auditing models is essential. Ongoing validation helps prevent unfair outcomes.

What is a practical use case for context-aware AI?

One use case is correlating access control logs with camera events to detect unauthorized access. This reduces alerts from authorized personnel and highlights true threats.

How do AI agents assist operators?

AI agents can verify alarms, provide explanations, and recommend actions. For example, VP Agent Reasoning correlates video and metadata to advise operators on next steps.

What privacy safeguards should be implemented?

Implement data minimisation, retention limits, strict access control, and audit trails. Transparent policies and user notice support ethical operation.

Where can I learn more about specialized detections?

For focused solutions, see resources on people detection, loitering detection, and forensic search at visionplatform.ai. For example, explore people detection in airports to understand tailored applications: people detection in airports.