ai video analytics in meat processing

AI video analytics brings cameras, computer vision and machine learning to cutting lines in practical ways. AI systems watch conveyors and cutting stations, and they run video analysis to spot anatomy, color shifts and defects. In butchery this approach helps achieve precise cuts on pork, beef and poultry, and it supports consistent yields that help reduce waste. A vision system that combines high-resolution cameras with on-device inference can flag off-spec product and stream structured events for downstream process control.

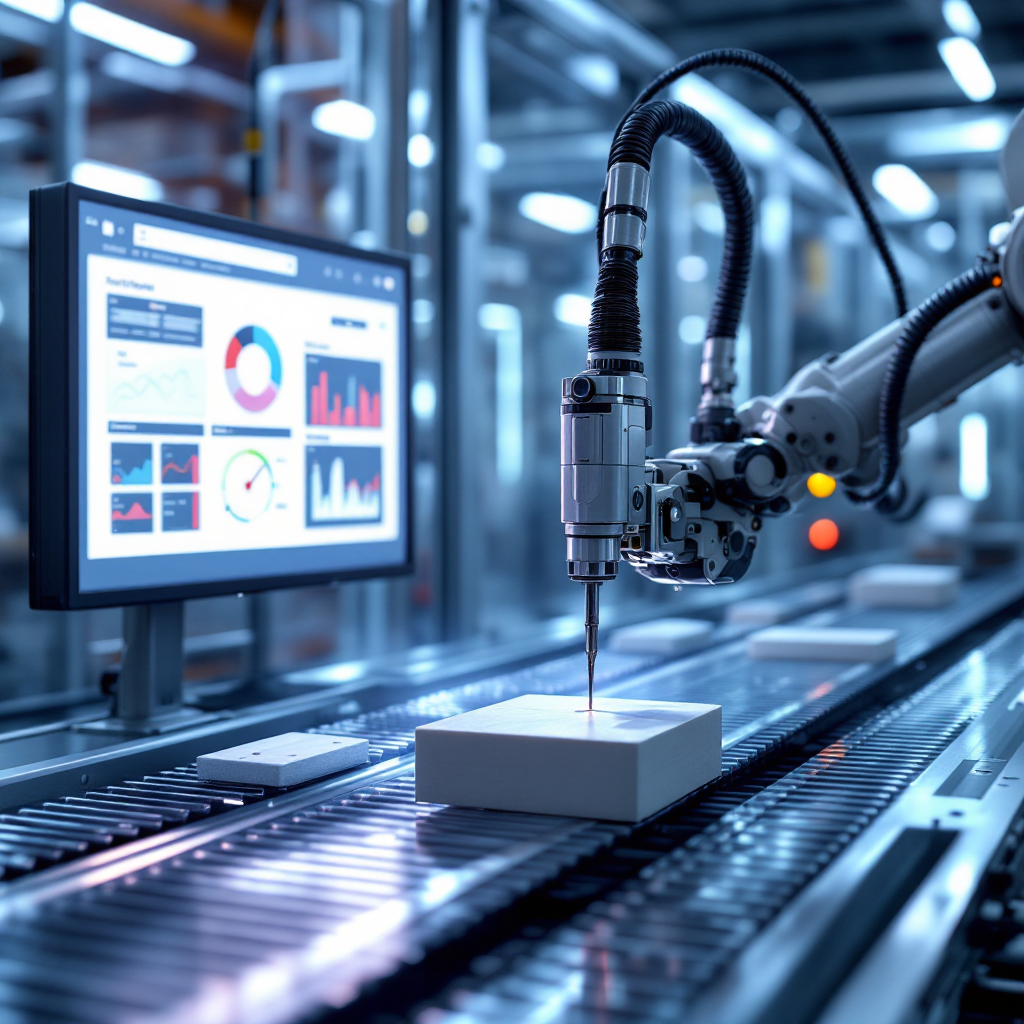

In practice, AI inspects the carcass at high speed, locates bone landmarks, and maps muscle edges for guided deboning and portioning. These detections feed robotic actuators and operator displays, and they enable the stable product attributes that buyers demand. Laboratories and plant teams use the same feeds to track meat quality over time and to compare batches. That data supports tracer studies and quality assurance programs.

Using AI here improves throughput and decreases variability on the line. For example, research shows AI-driven classification and cut guidance can increase cutting accuracy by up to 30% (source). Systems also spot color and texture changes that correlate with spoilage risk or inconsistent process control. Cameras act as a sensor layer across the floor so teams can visualise trends rather than rely on sampling alone. That shift matters for meat and poultry managers who must balance yield, product quality and regulatory compliance.

Companies such as Visionplatform.ai focus on turning existing CCTV into an operational sensor network. Their platform lets plants reuse VMS feeds to detect people, PPE and custom objects and to publish events to BI and OT systems. This approach keeps training and inference on-site, and it reduces data movement while supporting GDPR and EU AI Act readiness. As a result, plants keep control of their models and their video, and they convert passive cameras into active sensors that stop quality issues earlier.

Role of artificial intelligence and analytics in ai-powered cutting lines

Training deep networks on annotated images lets AI learn where to cut, how to trim and how to sort. Engineers label thousands of frames to teach AI models to recognise muscle, fat and bone, and they validate outputs against human experts. Training happens on secure datasets, and then models run on edge devices for low-latency decisions that prevent stoppage. The link between labelling quality and real-world performance is strong, so good annotation practice reduces false detections.

AI-powered robotics take those detections and guide blades and end-effectors for exact portioning. Robotic systems use feedback loops to adjust in real time, and they can correct for variability in animal size or positioning. That means less rework, fewer rejected packs and better yield per carcass. A robotics-assisted pork line reported a roughly 25% throughput increase with integrated automation and vision guidance (source).

Analytics also plays a central role. Plant dashboards collect events from cameras and from other sensors to map OEE and identify bottlenecks. KPM analytics and operator-facing KPIs can reveal cycle-time variance, highlight maintenance needs and improve labor efficiency. When a production line shows repeated cuts at the wrong angle, analytics helps isolate whether the cause is a model drift, a sensor misalignment or human training gaps. Teams then adjust models, retrain on new frames and redeploy to edge devices without large cloud transfers.

These tightly coupled ai systems and process control tools make the production line more resilient. Low-latency inference and clear feedback loops reduce the time between detection and correction, and predictive signals can schedule maintenance before a stoppage. As one review observes, the convergence of sensors, robotics and digital twins is moving the industry toward smarter, more adaptive operations (source).

AI vision within minutes?

With our no-code platform you can just focus on your data, we’ll do the rest

Leveraging real-time quality control to improve food safety

Continuous visual inspection helps plants detect contaminants, defects and foreign objects before packaging. Cameras combined with AI spot pieces of packaging film, bone splinters and other foreign materials that manual checks can miss. This vision inspection system for meat runs alongside metal detectors and X-rays to provide layered protection. When an anomaly appears, the system issues a real-time alert so staff can remove the item quickly and trace the affected batch.

Video feeds also support shelf-life estimation and spoilage prediction through texture and color trends. Predictive AI models that analyse temporal video patterns can estimate remaining shelf-life, though broad adoption faces data quality hurdles (source). When integrated with traceability records, these signals improve product recalls and reduce unnecessary waste. Better scoring of product attributes leads to more accurate packaging labels and a clearer supply-chain view.

Quality assurance workflows benefit from rapid alerts and auditable logs. Real-time production checks improve quality and consistency, and inspection reduces the number of consumer complaints and regulatory hits. Plants that need to improve food safety can combine camera systems, hyperspectral imaging and temperature sensors to catch subtle quality issues early. That mix of sensor types strengthens compliance and supports HACCP plans for meat and poultry products.

AI also helps maintain hygiene standards through PPE and behaviour monitoring. For example, Visionplatform.ai’s PPE detection and people-counting capabilities—adapted from airport solutions—translate well to meat and poultry processors where PPE compliance and shift headcounts matter for traceability and labour management (PPE detection) (people counting). With these tools plants can reduce quality issues and respond to foreign objects faster.

How robotic butchers automate and boost operational efficiency

Robotic butchers guided by AI combine speed with repeatable precision. Robots handle repetitive tasks such as trimming, portioning and deboning, and they work in concert with human teams on more complex operations. This combination increases throughput and saves labour time. Case studies show automation and robotics can lift throughput and yield by 25–30% in some lines (source).

Plants use robotics to automate the dull, dirty and dangerous work so staff focus on inspection, quality assurance and complex assembly. That shift reduces workplace injuries and improves labor efficiency. Robotic butchers also enable consistent product sizing and contribute to quality and consistency across shifts. As a result, product quality signals improve and buyer satisfaction rises.

Automation reduces dependence on seasonal labour and helps manage labour shortages. When staff are scarce, robots keep rates steady and avoid costly downtime. Still, the human role remains central: operators train and supervise models, tune the process and handle exceptions. AI helps by delivering clear, actionable detections and by feeding analytics that show where retraining or mechanical adjustments are needed. That transparency helps teams accept robotic partners sooner.

Operational efficiency gains extend beyond the line. Better cuts lower trim loss and improve OEE. Predictive maintenance schedules based on vision and vibration sensors can prevent equipment failures and reduce stoppage. In short, ai-driven robotic workstreams improve efficiency and accuracy while freeing operators from repetitive tasks and enabling higher value work.

AI vision within minutes?

With our no-code platform you can just focus on your data, we’ll do the rest

camera systems in abattoir and poultry processors

Strategic camera placement gives full line coverage in the abattoir and in poultry halls. High-resolution cameras mounted at multiple angles capture the carcass and allow algorithms to infer bone structure, muscle density and fat distribution. These cameras act as a sensor network that feeds vision systems and supports process control. For many meat processing plants, retrofitting existing CCTV provides a cost-effective path to better visibility.

Vision systems that combine standard RGB feeds with hyperspectral imaging or depth sensors can measure subtle product attributes that matter for sorting and grading. Those attributes help determine portion weights and where to cut for optimal value. Camera systems paired with edge devices deliver low-latency decisions so robots and operators get immediate feedback. That live feedback loop reduces rework and helps maintain consistent carcass yields.

Integration with conveyors, weighing scales and PLCs creates a synchronized processing line where each camera-triggered event can adjust speed or hold items for inspection. For example, a camera can spot a bone fragment, and the system can trigger a nearby diverter to remove the unit. This approach supports deboning stations and automated sorting for cut specification. It also supports traceability: recorded events and timestamps provide audit trails for regulators and customers.

For meat and poultry processors, combining camera networks with process-anomaly detection tools helps identify upstream issues before they propagate. Visionplatform.ai’s ability to stream events via MQTT into BI and SCADA tools lets plants use camera-derived data the same way they use scale or temperature data (process anomaly detection). That integration increases operational efficiency and gives teams a single source of truth for on-site decisions.

Future of ai in meat industry: animal welfare and next steps to improve food safety

Predictive analytics and IIoT technologies will offer greater supply-chain transparency and better animal welfare monitoring. Sensors and cameras can detect stress indicators and movement patterns that correlate with handling issues, and these signals enable corrective actions before product quality declines. Linking such data to farm records also supports provenance claims and welfare certifications across the processing sector.

Digital twins and adaptive cutting lines are on the roadmap for industry 4.0 adoption. These models allow operators to simulate adjustments, to test new cut schemes and to forecast the impact on yield and product attributes. Adaptive lines will tweak blade paths and robot speeds based on live camera input, and they will reduce variability in finished packs. As systems mature, plants should expect fewer quality issues and better alignment between orders and output.

Challenges remain: data quality, regulatory compliance and workforce transition require attention. Plants need curated, annotated datasets that reflect their mix of breeds, sizes and products. Transparent governance for model ownership and on-site training eases compliance with the EU AI Act. Predictive maintenance and edge deployment reduce data movement and support auditable practices. Finally, training programs help workers move from manual cutting to supervisory and model-tuning roles, which eases labor shortages over time.

In short, use AI to increase traceability, to improve food safety and to support animal welfare with measurable signals. As one review puts it, AI’s ability to interpret complex visual data in real time is reshaping how the sector approaches cutting and quality (quote). Continued investment in sensors, models and on-site control will define the next decade of work in meat-processing and will help the industry meet higher standards for product quality and welfare.

FAQ

What is AI video analytics and how does it apply to butchery?

AI video analytics uses cameras plus machine learning to interpret visual feeds and to produce actionable events. In butchery it identifies anatomy, flags defects and guides automated cutters so teams get consistent yields and fewer rejects.

Can AI improve food safety in meat plants?

Yes. AI detects contaminants and foreign objects and supports traceability through recorded logs. It also enables faster response through real-time alerts and helps improve food safety by reducing missed defects.

How do robotic butchers work with human staff?

Robots handle repetitive tasks such as trimming and portioning, while humans manage inspection, exceptions and model training. This pairing reduces injuries and boosts labor efficiency, freeing skilled workers for higher-value tasks.

Are existing CCTV systems useful for AI upgrades?

Many plants reuse VMS footage for analytics rather than replacing cameras. Platforms that run on-site let teams turn existing CCTV into operational sensors without sending data off-site.

What accuracy improvements are typical with AI-guided cutting?

Studies report accuracy gains up to 30% in cutting precision and measurable yield improvements in automated lines. Results depend on dataset quality, camera setup and integration with robotics.

How does AI support animal welfare?

Sensors and cameras track movement and stress indicators that correlate with handling problems. That data helps managers adjust handling procedures and document welfare metrics across the supply chain.

Does AI require cloud processing?

Not necessarily. Edge devices and on-site servers enable low-latency inference and keep data local for GDPR and EU AI Act compliance. That approach also reduces bandwidth and supports predictable operations.

What are the main barriers to adoption?

Key barriers include data annotation, model ownership and workforce transition. Integration with legacy equipment and ensuring robust operation in noisy production environments also require investment.

How do AI models handle variability in carcass size?

Models trained on diverse, annotated datasets adapt to variability and guide robots to make dynamic adjustments. Feedback loops and periodic retraining keep performance stable as input characteristics change.

Where can I learn more about on-site PPE and people counting for plants?

Solutions that adapt people detection and PPE monitoring from other industries can help plants enforce safety and manage headcounts. For examples of such capabilities, see Visionplatform.ai’s PPE detection and people-counting pages for implementation ideas (PPE detection) and (people counting).