AI video search: Fundamentals of Event-based Video analytics in Video surveillance

Event-based CCTV search changes how teams handle large volumes of footage. AI video search indexes clips by events, not by time. This approach replaces slow manual review with fast, targeted retrieval. Traditional video review forced operators to watch hours of footage. In contrast, event-based systems extract meaning, tag incidents, and make recorded video searchable in seconds. The result transforms response and case management for safety and security operations. Additionally, AI systems use deep learning and learning models to create structured descriptions of people or objects. For example, modern object detection and vision AI can label object classes, identify people or objects, and flag specific events without human review. As a result, investigators instantly find relevant footage and relevant clips, which helps investigators and reduces human error.

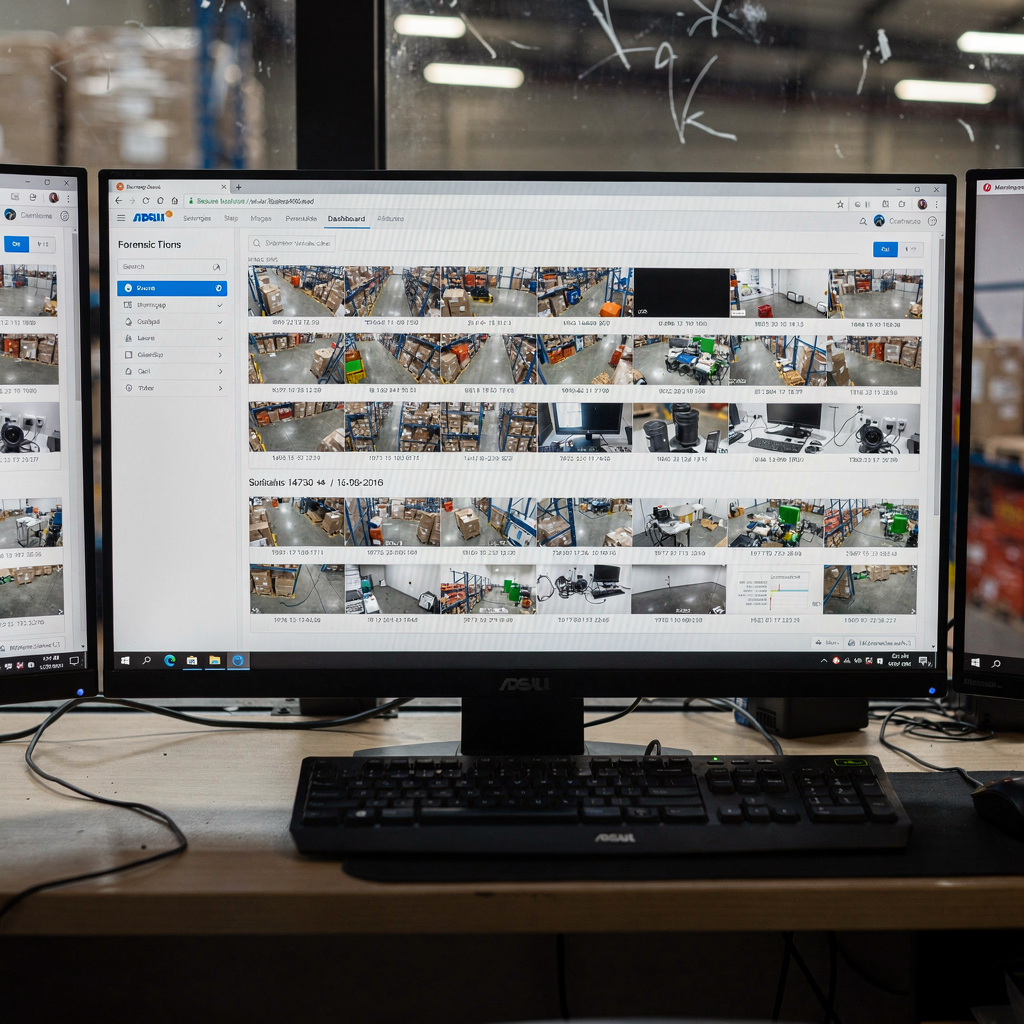

The core components include detection engines, metadata extractors, indexing services, and a search interface. AI handles detection and description. Then metadata stores attributes like time, location, object_class, and behavior. The metadata makes video data searchable and allows users to query the archive by natural language. Using ai smart search, operators can type a plain-English query and get precise hits across camera streams. This ai-powered search capability reduces time per alarm and speeds up inspections. For airports and enterprise video deployments, it is common to pair AI with video management systems for reliable operation. visionplatform.ai builds on this principle by adding a reasoning layer to turn detections into operational insights and decision support.

Event-based indexing accelerates security operations across use cases. For security teams, it powers quick threat verification and exportable evidence for law enforcement. For operations, it supports access control audits and process anomaly detection. For traffic monitoring, license plate recognition and vehicle tracking reduce investigation time. Studies show that intelligent systems can improve detection rates but still face issues in night scenes; for instance, a traffic monitoring system reported 56.7% accuracy in difficult night conditions, suggesting a need for synthetic data and improved models (56.7% accuracy in night scenes). Overall, event-based search transforms video into searchable, actionable intelligence while cutting alarm fatigue and making large amounts of recorded footage useful again.

Integrating Metadata and Object detection Across cameras in VMS

Metadata tagging and object detection work together to index clips so operators can quickly find specific events. First, object detection identifies people, vehicles, and other targets. Next, metadata records attributes such as color, direction, behavior, and license plate text. Then the VMS indexes those tags so users can query the archive. In practice, a modern video management systems setup streams events from camera analytics and stores compact metadata instead of raw footage. This approach scales better and lowers costs while preserving the ability to reopen raw footage when needed. Forensic search benefits because only relevant clips are pulled for detailed review and evidence export.

Linking multiple camera streams creates unified search across a site. A cross-camera workflow lets operators trace a person across camera systems and build a timeline of actions. For example, a staff member can search for someone loitering near a gate and then automatically follow that person across multiple camera views. This cross-camera correlation helps verify alarms and reduces false positives. visionplatform.ai supports these workflows with a VP Agent that exposes VMS data and converts video into human-readable descriptions so users can search without camera IDs. For more on loitering detectors and how they integrate, see our loitering detection page (loitering detection in airports).

VMS requirements for seamless metadata exchange include open APIs, standardized event schemas, and support for MQTT or webhooks. Additionally, systems must allow bi-directional control so AI can flag an incident and the VMS can call back for recorded footage retrieval. Integration with access control and case management systems adds context for decision-making. For those deploying at scale, consider compatibility with third-party platforms like Genetec Security Center for central reporting and unified dashboards (forensic search in airports). Finally, ensure the VMS and AI modules maintain data locality if compliance requires on-prem processing. This preserves privacy and meets EU AI Act alignment for sensitive sites.

AI vision within minutes?

With our no-code platform you can just focus on your data, we’ll do the rest

Optimising AI-powered alerts and Detection to Speed up investigations

AI-powered alerts change how teams react to incidents. Instead of receiving raw alarms, operators get verified, explained notifications that include context and confidence scores. For example, an alert might report a perimeter breach, include video snapshots, and list corroborating access control logs. This makes each notification actionable and reduces the time required to triage. The VP Agent Reasoning from visionplatform.ai verifies alarms by correlating VMS events, metadata, and procedural checks, which helps investigators and lowers false positives.

Detection rules include loiter, perimeter breach, intrusion, and object-left-behind scenarios. When a system detects a person loitering near a secure entrance, it triggers a notification and stores the event as searchable metadata. That event becomes part of the forensic search index so users can later query for similar incidents. For license plate workflows, the detection of a license plate can compare against watch lists and flag vehicles of interest instantly. Studies show multi-camera IVS systems enhance event detection and support smarter city infrastructure (networked camera benefits).

Quantifying impact, automated alerts can cut review times by orders of magnitude. For example, replacing manual review of hours of video with event-based clips can reduce investigation time from hours to minutes. Also, AI that validates an alarm by cross-checking additional sensors reduces false positives and therefore reduces unnecessary dispatches. In practice, teams report faster decisions and fewer escalations when AI agents provide suggested actions and pre-filled case data. A clear workflow that links alerts to case management and evidence export makes the control room more efficient and allows operators to focus on complex incidents rather than routine validation.

Leveraging Smart video for Scalable Forensic search and Raw video review

Smart video features filter raw video into event-specific clips and make archives searchable. Instead of scanning hours of footage, an investigator can pull relevant clips filtered by behavior, object class, or license plate. The forensic search tool then provides frame-level access and exportable evidence. This workflow turns raw video into actionable intelligence and keeps the raw footage available for legal processes. For massive archives, scalable architectures index metadata separately from video blobs to reduce storage and speed queries.

Scalable storage strategies include tiered retention, on-prem GPU acceleration, and indexed object stores. For example, hot events and their associated frames can remain on high-speed storage, while older raw footage is archived. When an investigator queries the archive, the system streams only relevant frames, not entire files, which cuts retrieval times and bandwidth. This approach makes enterprise video solutions practical for sites with thousands of cameras and large amounts of recorded data. For high-security environments like an airport, a combination of local AI inference and robust retention policies supports compliance and rapid evidence retrieval (license plate and ANPR in airports).

Forensic search requires tools that support natural language queries, timelines, and multi-source correlation. Using a Vision Language Model, operators can ask for “all alarms where no actual intrusion occurred” and receive exportable clips, timestamps, and contextual notes. This ai-powered search capability reduces the dependency on manual tagging and speeds up legal review. Additionally, integrating case management automates report creation and chain-of-custody records so evidence remains admissible. As a result, teams get accurate results faster, which improves outcomes in both security and operational investigations.

AI vision within minutes?

With our no-code platform you can just focus on your data, we’ll do the rest

Enhancing Search capabilities with License plate recognition and Loiter detection

License plate recognition workflows are central to vehicle tracking and watch lists. First, an ANPR engine reads the plate from a moving vehicle. Then the system compares the plate to lists and raises an alert for vehicles of interest. This alert links to timestamps and camera feeds so operators can instantly find prior movements and export evidence. Combining license plate recognition with cross-camera tracking empowers investigators to trace routes across a campus or city. For airport deployments, ANPR supports curb management and security screening, and it pairs naturally with other camera analytics to create a fuller picture.

Loiter detection algorithms flag abnormal pedestrian behaviour by monitoring time-on-scene, proximity to restricted areas, and motion patterns. When someone stays near sensitive infrastructure, the system creates an event that becomes searchable. In these cases, the search transforms from a simple keyword lookup into a behavior-driven timeline. Tools that support both loiter and ANPR can correlate a suspicious person with a vehicle, delivering rapid incident reconstruction and clear investigative leads.

When combined, ANPR and loiter detection produce powerful cross-event correlation. For instance, if loitering is detected near a loading dock and a license plate sighting matches a vehicle of interest, the system creates a linked case with relevant clips, metadata, and suggested next steps. Those exportable results reduce human review and help investigators focus on high-probability incidents. In practice, deploying these features across multiple camera locations shortens time to resolution and improves situational awareness. To learn about loitering-specific detection at gateway sites, see our loitering detection in airports resource (loitering detection in airports).

Reducing Bandwidth and Helping security teams Using AI analytics

Bandwidth and storage costs rise quickly with raw footage. AI analytics help by processing video at the edge and sending only metadata or event clips over the network. For example, an edge node can run ai object detection and only stream alerts and low-bitrate thumbnails to the control room. This preserves bandwidth while keeping operators instantly informed. Using AI on-edge reduces the amount of video data leaving a site, which supports privacy and local compliance requirements. Visionplatform.ai emphasizes on-prem processing to keep models and video inside the environment and to avoid unnecessary cloud egress.

Techniques for bandwidth-efficient streaming include adaptive frame rates, selective frame extraction for events, and compact metadata sheets for each incident. Additionally, VMS and AI can negotiate to keep low-priority camera feeds at reduced bitrates until an event triggers higher-quality streaming. These methods cut costs and allow thousands of cameras to remain manageable without degrading investigative capability. As a result, security teams receive actionable alerts and can instantly find critical footage without draining the network.

Centralised dashboards aggregate alerts, metadata, and case statuses so operators get operational insights in one view. These dashboards support guided workflows and reduce cognitive load by recommending actions or pre-filling reports. By combining AI-powered alerts with case management and procedural automation, teams handle more events with the same staff. Ultimately, smart use of ai analytics and edge processing makes surveillance systems scalable, cost-effective, and more useful for both safety and security.

FAQ

What is event-based CCTV search?

Event-based CCTV search indexes video by detected events rather than by time. This lets operators quickly retrieve clips related to specific behaviors, objects, or incidents without watching hours of recorded footage.

How does metadata improve search?

Metadata records attributes like time, location, object type, and behavior. As a result, searches return targeted results and investigators can query the archive by descriptive terms or search criteria instead of camera IDs.

Can AI reduce false positives?

Yes. AI systems that correlate multiple sources and apply contextual checks lower false positives. Systems that verify alerts against access control or multiple camera perspectives provide more accurate results and reduce wasted responses.

What role does license plate recognition play?

License plate recognition helps track vehicles and match them to watch lists. It supports vehicle forensics, curb management, and rapid identification of vehicles of interest across camera streams.

Do I need new cameras to use AI search?

Not always. Many deployments leverage existing cameras and add AI at the edge or in the VMS. However, higher-resolution or IR-capable cameras may improve accuracy for certain detection tasks.

How does forensic search work?

Forensic search indexes metadata and provides frame-level access to relevant clips. Investigators can query by behaviors or descriptions and export clips and timestamps for evidence handling.

Can AI analytics work on-prem for compliance?

Yes, on-prem AI keeps video and models inside your environment and reduces cloud exposure. This supports compliance, auditability, and aligns with stricter data protection regimes.

How much bandwidth can AI save?

Significant amounts. By streaming only events, thumbnails, or metadata instead of full streams, systems cut bandwidth and storage costs. Edge processing further reduces network load while preserving access to necessary footage.

What is the benefit of cross-camera search?

Cross-camera search traces people or vehicles across multiple camera views, enabling faster incident reconstruction. This capability transforms video into an operational timeline rather than isolated clips.

How quickly can teams find relevant footage?

With event-based indexing and AI-powered search, teams can find relevant footage within seconds to minutes instead of spending hours on manual review. This speed improves outcomes and supports faster decision-making.