AI-driven video analytics to accelerate forensic investigations in video surveillance

AI-driven video analytics now form the backbone of modern forensic work. First, motion detection and face recognition algorithms reduce the time analysts spend manually reviewing footage. For example, motion filters and targeted tracking can cut manually reviewing by up to 50% when combined with smart thumbnail and indexing workflows (motion analysis study). Also, this speed helps investigators to accelerate case timelines and close cases faster.

Second, AI reduces noise and highlights likely events for the analyst. An ai-powered forensic pipeline flags events, extracts a thumbnail, and links related video evidence across camera systems. As a result, search allows teams to jump from hours of video to relevant footage within seconds. Therefore, control room workload drops and time to action shortens. Our VP Agent Search converts recorded video into human-readable descriptions, which lets operators search using plain language queries, such as “red truck entering dock area yesterday evening.” This ai forensic approach turns a powerful tool into an everyday capability for investigation teams.

Third, deep learning enables pattern matching across time and space. Deep learning networks learn features that generalise across environments and camera angles. Thus, the system can match faces or object features across multiple camera feeds and across multiple cameras. In practice, linking an object or a person across views improves clearance rates, because investigators find corroborating video evidence faster (CCTV value study). Also, face recognition narrows search results so that analysts verify leads, not sift hours of footage.

Finally, AI agents combine video analytics with VMS events to provide context. Forensic video analysis benefits when video management and AI outputs are unified. For instance, an alarm triggers a review, and AI-powered search pre-populates relevant clips, timestamps, and metadata for faster retrieval and playback. This process reduces manual steps and makes the forensic workflow scalable.

Using metadata and search filters to speed up investigations and refine search results

Metadata extraction is essential to reduce search scope quickly. Forensic teams extract timestamps, event logs, and object tags from proprietary CCTV formats. Then, they use search filters to pinpoint clips by date, time, camera ID, and sensor data. This workflow helps investigators to speed up investigations and to find video evidence with far less manual effort. For example, generating structured metadata allows a search for evidence to return clips in minutes rather than hours of video, and research into automated recovery highlights how dealing with proprietary formats is now common in forensic workflows (automated recovery study).

Next, search filters narrow results by including motion thresholds, object type, and scene metadata. A typical targeted search sequence might begin with date and time, then add object tags such as “vehicle” and a color filter. As a result, the search scope can fall by over 70% in many cases, since the system excludes irrelevant idle periods. The platform then surfaces a set of thumbnails tied to events. The thumbnail preview accelerates triage, because investigators can scan images to decide which clips require playback.

Also, metadata from access control and auxiliary sensors enhances filtering. By correlating access control logs with camera timestamps, investigators exclude false leads quickly. This is especially useful at sites with many cameras where naive timelines generate overwhelming results. Visionplatform.ai’s VP Agent extracts natural language descriptions and generates metadata server-side to keep data on-prem and compliant with policies. Doing so keeps video data local, reduces cloud risks, and supports audit-ready retrieval for formal forensic video analysis.

Finally, high-quality metadata enables more advanced forensic search, such as chronological stitching of events across cameras. In practice, a workflow that combines camera IDs, motion tags, and object attributes speeds retrieval and helps investigation teams move from discovery to evidence collection with fewer steps.

AI vision within minutes?

With our no-code platform you can just focus on your data, we’ll do the rest

Advanced forensic search integration with camera systems and partner integrations to unify video evidence

Seamless integration across camera manufacturers, VMS, and third-party systems unifies video evidence for cross-site investigations. First, APIs and plugins connect camera systems and video management platforms. For instance, Genetec and Axis Communications often expose event streams and metadata via standardized protocols, which let forensic tools ingest and query recorded video. A unified platform improves search capabilities and removes the need to open multiple consoles.

Second, partner integrations enable third-party video and sensor data to join the repository. When teams unify VMS events and third-party video, the system can correlate alarms with video to confirm an incident. This reduces false positives and gives operators richer situational context. Visionplatform.ai emphasises seamless integration with VMS through lightweight server-side agents that expose data to AI agents while keeping video on-prem. The result is a searchable, auditable archive for legal processes.

Third, a unified repository simplifies cross-site investigations. Investigators can query multiple sites from one interface, and get matched thumbnails, timestamps, and related access control logs. Forensic search across sites speeds locating relevant footage, because the platform normalises metadata and exposes consistent search fields. Also, integration with Genetec Security Center or other video management systems allows queries to run across distributed archives without manual export.

Finally, using a unified platform reduces the need for extra hardware. With proper APIs and partner integrations, organisations avoid complex separate ingestion appliances. Instead, they leverage existing VMS integrations to generate searchable indexes and to support workflows like generating metadata, exporting clips, and preserving chain of custody for video evidence.

Scalable forensic video search capabilities and analytics for Genetec and other VMS

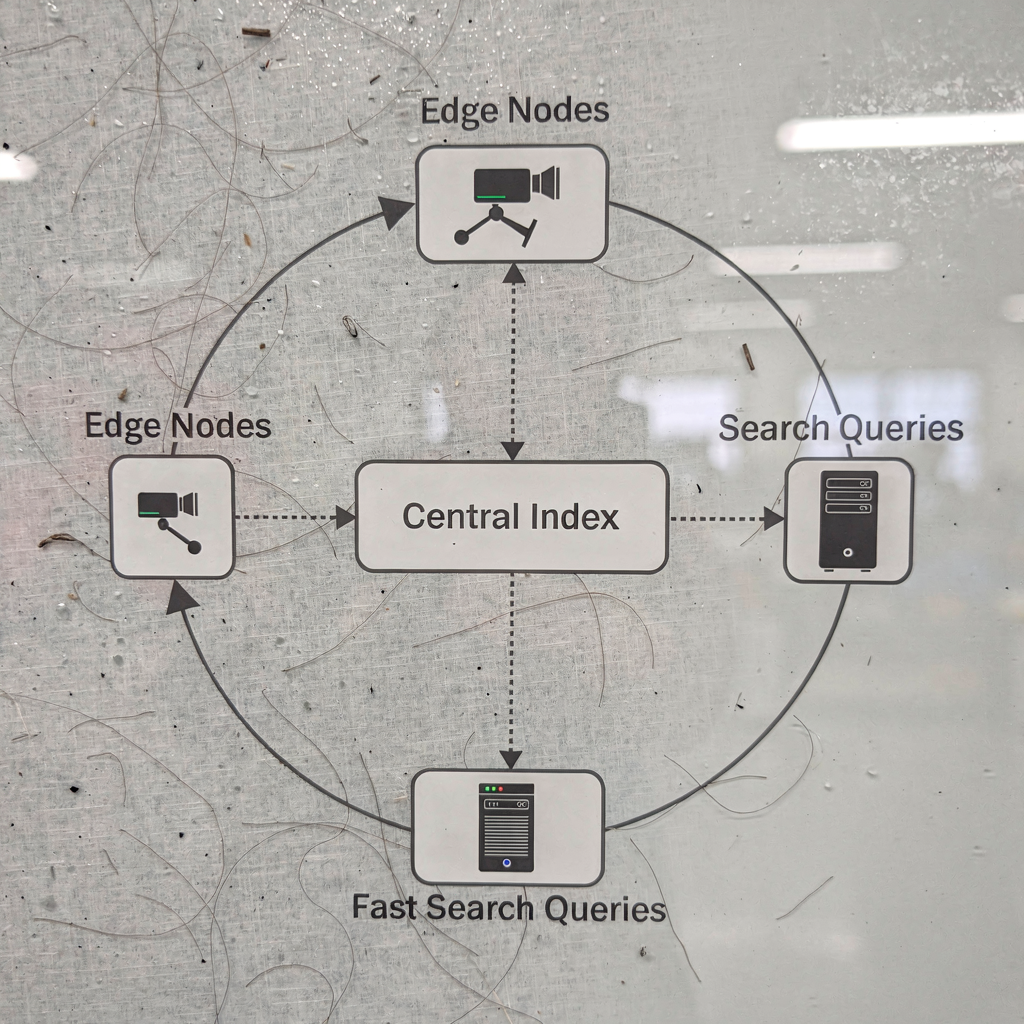

Scalability matters when archives reach petabyte scale. Scalable forensic video search relies on distributed indexing techniques to keep queries fast. For example, sharded indexes and inverted file systems let search engines return thumbnails and timestamps within seconds, even on very large datasets. In larger deployments, query performance on a multi-site setup benefits from edge indexing and federated queries that reduce central bottlenecks. As a result, teams can search across many sites with similar speed to single-site queries.

Second, analytics help to manage retrieval and playback load. When a query returns many hits, the system prioritises results by confidence score, relevance, and proximity in time. This targeted search reduces playback demands on storage servers and on operator attention. Visionplatform.ai supports scalable deployments from a handful of streams to thousands, and integrates with video management systems to distribute indexing tasks.

Third, consider a municipal case: an urban surveillance network processes about 1 PB of footage per month. Using distributed indexing and server-side agents, the system shards metadata across multiple nodes. Consequently, investigators can run an advanced search that returns candidate clips from dozens of cameras in under a minute. This approach preserves the ability to search for evidence at scale without adding extra hardware or moving video offsite.

Finally, system design affects query latency. Single-site deployments often show lower absolute latency, while multi-site queries add network overhead. To mitigate this, caching and prefetching of popular timelines can provide consistent interactive performance. In short, distributed architectures and careful analytics design make forensic search both fast and scalable for Genetec and other VMS platforms (HIKVISION log research).

AI vision within minutes?

With our no-code platform you can just focus on your data, we’ll do the rest

Using video analytics and license plate recognition to enhance surveillance and investigation

License plate recognition plays a central role in many investigations. AI-based license plate recognition systems deliver high read rates and low latency. In real-world deployments, modern models achieve quick reads with acceptable false-positive rates when cameras are correctly positioned. Also, linking a license plate across cameras creates a movement trail, which investigators use to reconstruct routes and to identify stopping points.

Second, combining license plate recognition with object tracking and vehicle classification enriches the dataset. Knowing vehicle type alongside a plate helps investigators prioritise leads. For example, a query can filter by license plate plus vehicle type to reduce results to the most likely matches. This targeted search is invaluable in traffic enforcement, parking control, perimeter defence, and loss prevention.

Third, cross-camera linking is vital. When an LPR system reads a plate at one gate and then later at another, the system links those events across multiple cameras and across multiple cameras feeds. As a result, investigation teams reconstruct timelines with confidence. This capability supports traffic investigations and criminal enquiries where vehicle movement is a key element.

Also, integrating LPR with VMS and access control systems provides operational context. For instance, correlating a plate read with a gate open event shows access legitimacy. Visionplatform.ai integrates ANPR/LPR analytics with VMS data and can surface those correlations to the operator. Doing so provides rapid verification, reduces false alarms, and supports safety and security objectives.

Finally, automated LPR can trigger downstream workflows, such as automated alerts or containment measures. When paired with granular search filters and AI agents, license plate recognition becomes part of an ai-powered search pipeline that helps investigators locate relevant footage and close cases faster.

Granular search filters and advanced search for efficient forensic search results with AI

Granular search filters let investigators refine queries by object type, motion vector, and color. For example, a search profile might combine “person,” motion direction towards an exit, and a red jacket color tag. Advanced search modules then score results by relevance and by confidence. This helps analysts to prioritise clips for playback. Also, integrating face and license plate recognition outputs into the same query reduces the need for separate tool chains.

Next, advanced forensic search blends VLM-generated descriptions with structured indexes. Visionplatform.ai’s VP Agent Search turns video image content into searchable text. Consequently, investigators can perform free-text searches that resemble how humans describe events. This makes search more intuitive and reduces training time for new operators. At the same time, server-side processing keeps models and video local, supporting compliance with privacy and EU AI Act constraints.

Third, predictive search and anomaly detection are the next frontier. Machine learning models can surface anomalous patterns that human filters miss. For example, an anomaly detector flags motion patterns that deviate from baseline. Then, an ai-powered search can return similar past events, which aids investigation and pattern discovery. The combination of granular filters and predictive models gives a powerful tool for proactive and reactive work.

Finally, practical features like searchable thumbnails, playback trimming, and export workflows make the output usable in court. Advanced search supports chaining: a query returns clips, the operator refines based on visual checks, and the platform annotates the subset for export as video evidence. This workflow reduces the cognitive load on investigators and lets teams focus on interpretation rather than retrieval.

FAQ

What is forensic video search and why does it matter?

Forensic video search is the process of locating and extracting relevant video evidence from surveillance archives. It matters because it saves investigators time, reduces workload, and improves the chances of resolving cases by quickly surfacing relevant footage.

How does AI improve forensic investigations?

AI automates detection, indexing, and ranking of events, which accelerates review and reduces manual steps. AI also links related clips across cameras and timelines so investigators can follow events and close cases faster.

Can metadata really speed up investigations?

Yes. Metadata such as timestamps, camera ID, and object tags can filter thousands of hours of video down to minutes. This targeted approach reduces retrieval and playback demands and streamlines evidence collection.

Is it possible to unify footage from different VMS and camera brands?

Yes. Using APIs and partner integrations, systems can unify third-party video and VMS events into a single searchable repository. Integrations with common platforms like Genetec Security Center help create a unified platform for cross-site investigation.

How accurate is license plate recognition in real-world conditions?

LPR accuracy depends on camera placement, lighting, and image resolution, but modern systems achieve high read rates when configured correctly. Linking plate reads across cameras provides valuable movement trails for investigations.

What role does deep learning play in video forensics?

Deep learning extracts robust features for faces, vehicles, and behaviors, which improves matching across camera angles and image quality variations. It also powers anomaly detection and predictive search for earlier detection of suspicious patterns.

How does on-prem processing help with compliance?

On-prem processing keeps video data and models inside the organisation, reducing cloud transfer risks and helping meet legal and regulatory requirements like the EU AI Act. It also supports auditable chains of custody for video evidence.

Can advanced forensic search work with cameras without built-in analytics?

Yes. Server-side indexing and machine vision can analyse recorded video from cameras without embedded analytics. The platform can generate metadata and thumbnails for searchable access.

How do AI agents assist control room operators?

AI agents verify alarms, provide contextual explanations, and recommend actions based on correlated data from video, access control, and procedures. This reduces false alarms and supports faster decision-making.

Where can I learn more about airport-focused forensic search features?

For airport-specific solutions, see resources on forensic search in airports and related detection features such as people detection and ANPR/LPR in airports. These pages explain how integrated analytics support operational and security needs: forensic search in airports, people detection in airports, and ANPR/LPR in airports.