Overview of Temporal Navigation in Video Understanding

Video understanding sits at the intersection of perception and context. It draws on computer vision and language to make sense of moving scenes. In dynamic contexts, systems must not only detect objects but also follow how those objects change and interact over time. Temporal navigation in this setting means tracking events, ordering them, and linking causes to effects as the sequence unfolds. It differs from static image analysis because a single frame cannot show a beginning or an outcome. Instead, systems must process sequences of frames and maintain state. This need places a premium on efficient pipelines and clear frameworks for continuous inference.

Temporal understanding requires a stack of capabilities. First, systems must extract frame-level features quickly. Then, they must map those features into a structured representation for higher-level semantic interpretation. Finally, they must use that structure to answer questions, make decisions, or trigger actions. For operational settings, we must build models that can operate on live feeds with bounded latency. For example, control rooms often need to verify alarms and provide operators with context in seconds. visionplatform.ai builds on this idea by converting camera feeds into human-readable descriptions, and then letting AI agents reason over that stream to propose actions when needed. This approach helps reduce time per alarm and supports on-prem privacy constraints.

To ground discussion, consider the SOK-Bench dataset. It helps evaluate how well models handle situated knowledge and temporal links across clips, and it gives researchers a clear benchmark to compare methods SOK-Bench paper. Also, dense video captioning surveys show how larger annotated collections enable richer training and better evaluation for event sequencing dense captioning survey. Finally, streaming approaches demonstrate how large language models can reason as they read incoming data StreamingThinker. Together, these works define an overview for temporal navigation in video understanding. They highlight the need to unify short-term perception and longer-term inference across live video streams.

Task Taxonomy for Video Reasoning

Video reasoning organizes into a clear taxonomy of tasks. At the lowest level, frame-level detection identifies entities such as people, vehicles, or objects. These detectors form the input to activity recognition modules that identify actions in short clips. Next, event sequencing assembles those actions into higher-level instances, such as “approach, loiter, then depart.” At a higher tier, hierarchical reasoning tasks form scene-level inferences and causal chains. These tasks require relational reasoning and an understanding of temporal dependencies across shots and camera views.

We can divide core tasks into categories. Perception tasks include people and vehicle detection, ANPR/LPR, and PPE checks. For surveillance and airport contexts, visionplatform.ai uses detectors that sit on-prem and stream structured events into an agent pipeline. For forensic workflows, the platform supports natural language search over recorded timelines, which complements classic forensic search tools forensic search. Decision tasks then combine detections with context. For example, an intrusion detector flags an event. Then a reasoning module checks access logs, nearby camera views, and recent activity to verify the alert. This blends video-llms and agent logic to reduce false positives intrusion detection.

Higher-level tasks include video question answering and multi-turn scenario evaluation. These require linking entities to trajectories over extended sequences. They also require spatiotemporal models that can represent graphs of entities and their interactions. For real deployments, we ask models to perform scene summarisation, generate human-readable incident reports, and recommend actions. For example, the VP Agent can pre-fill incident forms based on structured detections, which speeds operator response. The taxonomy therefore spans from frame extraction to causal chain assembly and to decision support. This structure helps researchers and practitioners pick the right dataset and evaluation method for each task.

AI vision within minutes?

With our no-code platform you can just focus on your data, we’ll do the rest

Benchmark Evaluation for Video Understanding

Benchmarks shape progress by providing standard datasets, metrics, and baselines. SOK-Bench stands out because it aligns situated video clips with open-world knowledge and reasoning tasks. The dataset contains clips with annotations that require linking visual evidence to external knowledge. The SOK-Bench paper gives a clear description of its structure and evaluation goals SOK-Bench. Researchers use it to evaluate whether models can ground answers in observed events and aligned facts.

Evaluation covers both accuracy and temporal fidelity. Recent results report accuracy improvements of roughly 15–20% on SOK-Bench compared to earlier baselines, which indicates stronger temporal reasoning and knowledge alignment SOK-Bench results. Latency is also a key metric. StreamingThinker shows about a 30% reduction in processing latency when models reason incrementally rather than in batches StreamingThinker. Order preservation matters too. A model that answers correctly but ignores the temporal order will fail many operational checks.

Other benchmarks complement SOK-Bench. Dense video captioning datasets offer long annotations across many events. Larger datasets with 10,000+ annotated clips support richer training and can improve description quality for dense captioning and downstream evaluation dense captioning survey. When selecting a benchmark, teams should match their target task and deployment scenario. For operational control rooms, datasets that reflect camera angles, occlusions, and domain-specific objects work best. visionplatform.ai addresses this by enabling teams to improve pre-trained detectors with site-specific data and then map events into explainable summaries for operator workflows.

Temporal Reason Units: Real-Time Chain-of-Thought

StreamingThinker introduces a streaming reasoning unit designed to support chain-of-thought generation while reading incoming data. The core idea lets a large language models component produce intermediate reasoning as new frames or events arrive. This contrasts with batch pipelines that wait for full clips before generating any inference. The streaming mechanism keeps a manageable working memory and enforces order-preserving updates to the internal chain-of-thought. As a result, systems can answer multi-turn queries faster and with coherent temporal links.

The streaming reasoning unit applies quality control to each intermediate step. It filters noisy inputs, checks consistency with previous updates, and discards low-confidence inferences. Those mechanisms reduce drift and help the system maintain a grounded narrative. In tests, StreamingThinker reduced end-to-end latency by roughly 30% compared to batch models, which makes it attractive for real-time operations StreamingThinker. The approach also helps when models must incorporate external knowledge or align to a grounded dataset, because the incremental nature eases integration with external APIs and knowledge graphs.

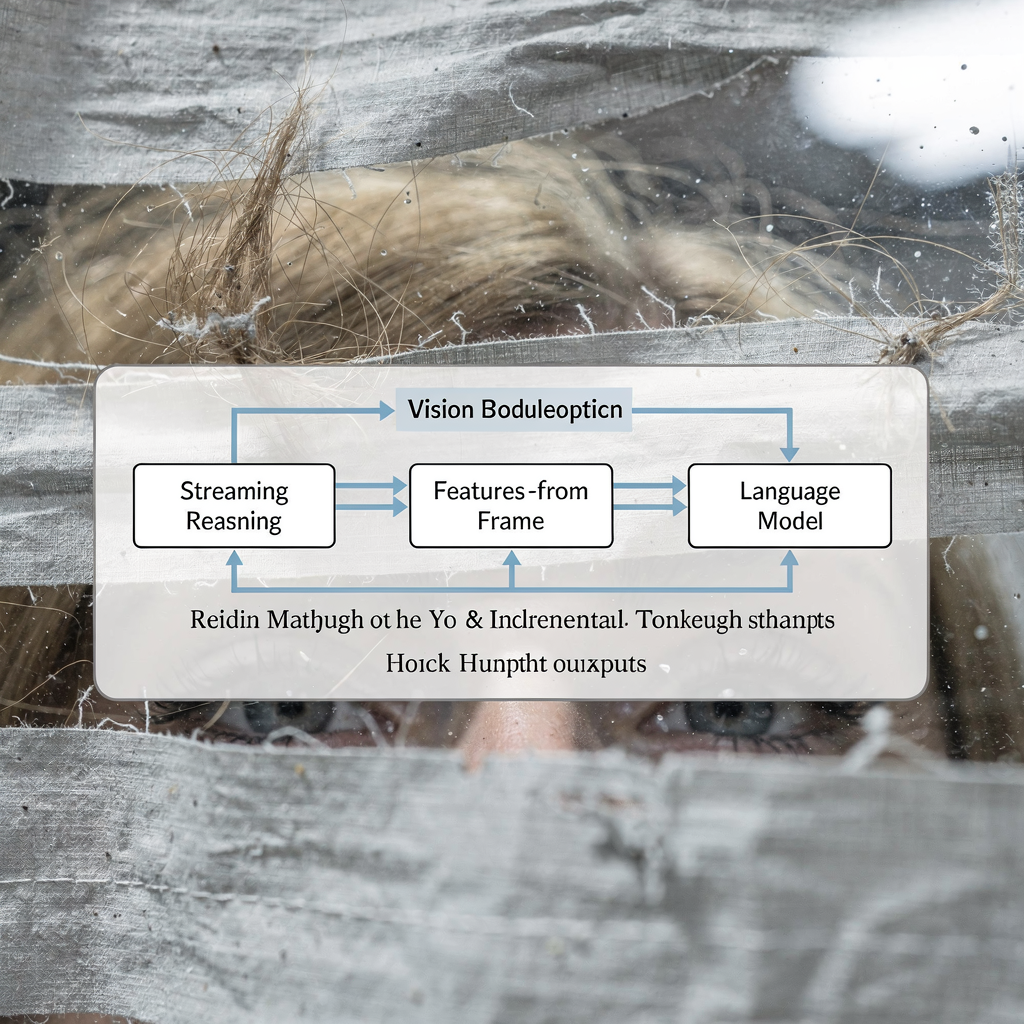

Practical systems combine streaming reasoning units with specialists. For example, a vision module runs frame-level detection. Then a small neural aggregator builds short-term trajectories. Next, a llm consumes that structured summary and generates explanations. This pipeline supports a hybrid of neural perception and symbolic composition. visionplatform.ai follows a similar pattern: detectors stream events into a Vision Language Model, which in turn feeds VP Agent Reasoning. The agent verifies alarms, checks logs, and recommends actions, thus turning raw detections into operational outcomes. This hybrid design helps control rooms adapt to diverse scenarios and keep decision trails auditable.

AI vision within minutes?

With our no-code platform you can just focus on your data, we’ll do the rest

Insights and Updates on StreamingThinker and SOK-Bench

Key insights have emerged from recent work and experimental evaluations. First, real-time inference matters. Systems that reason while they read can reduce latency and improve responsiveness in operational settings. Second, temporal integrity remains essential. Models must preserve order and avoid hallucination when assembling causal chains. Third, multimodal fusion enhances robustness. When audio, metadata, and access logs augment visual signals, models can verify events more reliably. SOK-Bench and StreamingThinker exemplify these points by focusing on aligned knowledge and incremental reasoning respectively SOK-Bench, StreamingThinker.

Recent updates in the field include extended sequences and richer annotation schemas. Benchmarks now ask models to handle longer clips, to ground answers in external facts, and to produce fine-grained temporal labels. Dense captioning surveys note larger datasets and more varied event types, which help models generalise across settings dense captioning survey. In addition, experimental systems test video-llms that combine small vision encoders with lightweight llm reasoning for on-prem inference. These mllms aim to balance capability with privacy and compute constraints.

Experts stress explainability and deployment readiness. As Dr Jane Smith notes, “The ability to reason over video streams in real time opens up transformative possibilities for AI systems, enabling them to understand complex scenarios as they happen rather than retrospectively” Dr Jane Smith. Similarly, developers of streaming approaches point out that “Streaming reasoning units with quality control not only improve the accuracy of chain-of-thought generation but also ensure that the reasoning process respects the temporal order of events” StreamingThinker authors. For operators, these advances mean fewer false alarms and faster, more consistent recommendations. visionplatform.ai tightly couples detectors, a Vision Language Model, and agents to deliver those practical benefits on-prem.

Limitations in Video Reasoning and Future Directions

The field still faces significant limitations. First, noisy or incomplete streams remain a big problem. Missing frames, occlusions, and low-light conditions can break detections. Second, scaling to longer video durations stresses both memory and computational budgets. Models often lose temporal context when sequences extend beyond a few minutes. Third, multimodal integration poses alignment and latency challenges. Syncing audio, metadata, sensor logs, and video frames requires careful design of buffers and timestamps.

To address these limits, researchers propose hybrid frameworks and compression strategies. For example, temporal compression can reduce redundant frames while preserving key events. Graph-based representations can summarise entity interactions and enable efficient spatiotemporal queries. Other teams focus on improving explainable AI so models can justify their intermediate steps to operators. Standardised APIs for event streams and reasoning modules would also help practitioners integrate components across vendors.

Future work needs to enable cross-domain transfer and adapt to site-specific reality. Operational deployments require on-prem models that respect data governance and the EU AI Act. visionplatform.ai adopts an on-prem approach to give customers control over video, models, and deployment. This setup supports fine-grained model updates, custom classes, and audit trails. Researchers must also refine benchmarks to include long video scenarios, more diverse modalities, and metrics that evaluate temporal fidelity and explainability. Finally, the community should work toward standardising evaluation protocols so labs can fairly compare approaches and identify which frameworks outperform baselines under realistic conditions.

FAQ

What is temporal navigation in video understanding?

Temporal navigation refers to tracking events and their relationships across time in a video. It focuses on ordering, causal links, and how sequences of actions produce outcomes.

How does SOK-Bench help evaluate models?

SOK-Bench provides annotated clips that align visual evidence with open-world knowledge. Researchers use it to test whether models can ground answers in observed events and external facts SOK-Bench.

What gains have benchmarks shown recently?

State-of-the-art systems reported accuracy improvements of about 15–20% on SOK-Bench over prior baselines, reflecting better temporal and contextual reasoning SOK-Bench results.

What is StreamingThinker and why does it matter?

StreamingThinker is a streaming reasoning approach that lets models produce incremental chain-of-thought as frames arrive. It reduces latency and helps maintain order in the reasoning process StreamingThinker.

Can these techniques work in control rooms?

Yes. Systems that convert video into human-readable descriptions and then let agents reason over those summaries support faster verification and decision making. visionplatform.ai combines detectors, a Vision Language Model, and agents to provide that workflow.

How do multimodal signals improve reasoning?

Adding audio, logs, and metadata helps disambiguate events and verify detections. Multimodal fusion reduces false positives and provides richer context for incident reports.

What are the main deployment challenges?

Key challenges include handling noisy streams, scaling to long video, and maintaining privacy and compliance. On-prem deployments and auditable pipelines help address these concerns.

Where can I learn more about dense captioning datasets?

Surveys on dense video captioning summarise techniques and dataset growth, showing how larger annotated collections support richer training and evaluation dense captioning survey.

How do I evaluate latency and order preservation?

Measure end-to-end response time for live queries and check whether model outputs respect the chronological order of events. Streaming approaches often yield lower latency and better order preservation.

What practical tools exist for forensic search in airports?

Forensic search tools let operators query recorded video using natural language and event summaries. For airport use cases, see visionplatform.ai’s forensic search features for targeted queries across timelines forensic search.