AI and video surveillance: transforming video search

AI-driven semantic video search brings meaning to CCTV feeds. It moves beyond tags and pixel matching to interpret scenes, actions, and intent. In practice, a security operator can type a natural phrase and retrieve the most relevant video, not just frames that match a color or shape. For example, instead of locating a “red car,” operators can search for “person entering a restricted area” and find the exact video segments. This shift transforms how teams work, because it reduces time spent scrubbing timelines and increases situational awareness.

Market forces push this change. The global video surveillance market is projected to reach USD 144.4 billion by 2027 at a CAGR of 10.4% (source). At the same time, CCTV systems produce massive amounts of video data every day. Estimates point to more than a billion operational surveillance cameras worldwide, with petabytes of footage generated daily (source). Consequently, manual review cannot scale. Semantic systems scale better, because they index meaning and not only low-level features.

Semantic video search using AI fuses computer vision, natural language, and contextual metadata. It lets users pose complex natural language query statements and receive ranked results. Large language models and vision models interpret user intent and map it to video descriptors. As a result, search technology becomes conversational. visionplatform.ai applies these ideas to turn existing cameras and VMS into searchable operational sensors. Forensic use cases such as rapid incident reconstruction benefit directly. For a deep forensic example, see our forensic search resource for airports at forensic search in airports, which shows how natural language descriptions speed investigations.

First, semantic systems tag objects and actions. Next, they store semantic indexes for fast retrieval. Then, operators query using plain language and get contextual clips. Finally, teams verify and act. This workflow reduces false positives and yields faster responses. For teams that monitor perimeters, see the practical detection examples in our intrusion detection in airports page to understand how contextual alerts cut noise. Overall, AI and semantic search lift CCTV from a passive recorder to an active, searchable knowledge source.

computer vision and metadata: extract meaningful insights

Computer vision models form the backbone of semantic processing. Convolutional Neural Networks (CNNs) detect objects in video frames. Recurrent networks and temporal models link detections across frames to recognize actions and interactions. In short, these models translate pixels into events. Then metadata layers add human-friendly context. Metadata annotates who did what, when, and where. That annotation turns raw footage into searchable content.

Deep models label people, vehicles, and behaviors. They also assign attributes like clothing color, direction, and activity type. This metadata can include natural language descriptions for each video segment. visionplatform.ai uses an on-prem Vision Language Model to convert detections into textual descriptions and provide search-ready metadata. As a result, operators can search using plain phrases such as “person loitering near gate after hours.” For a related use case, check our loitering detection guidance at loitering detection in airports.

Studies show big operational gains. Semantic search can cut manual review time by up to 70% (source). In many deployments, deep networks reach accuracy rates above 90% for complex activity recognition (source). These numbers matter because they lower the burden on human examiners and improve evidence quality. Moreover, metadata enables efficient indexing and search across vast video libraries, so teams find video clips quickly without exhaustive manual scrubbing.

In practice, systems must balance accuracy and latency. Low-latency tagging lets operators act in real-time while full annotation supports later forensic work. Semantic annotation supports video summarization, temporal indexing, and audit trails. In addition, computer vision and machine learning combined create a pipeline that extracts events, tags context, and stores metadata for fast retrieval. This pipeline enables better situational awareness and improves operational decision-making in busy control rooms.

AI vision within minutes?

With our no-code platform you can just focus on your data, we’ll do the rest

vector, embeddings and vector database: powering vector search

Embeddings map visual concepts into numbers. An embed is a compact numeric representation. Embeddings generated from frames or segments capture semantics in a format suitable for similarity math. In a vector space, similar scenes lie near each other. Therefore, queries become vector lookups instead of keyword matches. This approach powers scalable and flexible retrieval across video datasets.

To be precise, a high-dimensional vector captures appearance, action, and contextual cues. The system stores these vectors in a vector database for fast similarity search. A vector database supports nearest-neighbor search and optimized indexing. It also enables low-latency responses for real-time use. For example, when an operator submits a query, the system encodes the query as a vector and retrieves nearest vectors representing relevant video segments. This vector search supports retrieval of temporally linked video segments and entire video clips that match semantic intent.

Workflows for vector search follow clear steps. First, ingest video files and split them into video segments. Next, run pretrained or task-specific models to produce embeddings. Then, persist embeddings in a vector database and link them to metadata and timestamps. Finally, accept queries that get encoded into vectors and return ranked results. This process yields accurate similarity search and rapid retrieval of relevant video content. It also supports similarity search across different video types and cameras.

Vector methods scale with data. They perform well on large video datasets and allow efficient indexing and search. Systems can combine vector matching with rule-based filters for policy and compliance. Also, vector search integrates with existing VMS and workflows. visionplatform.ai maps vision language descriptions to vectors so operators can use natural language queries and receive precise results. In addition, teams can run the vector database on-prem to satisfy data protection rules and reduce cloud dependency. Overall, vector and embeddings are the engine that makes efficient semantic video search possible.

machine learning and multimodal: enriching context

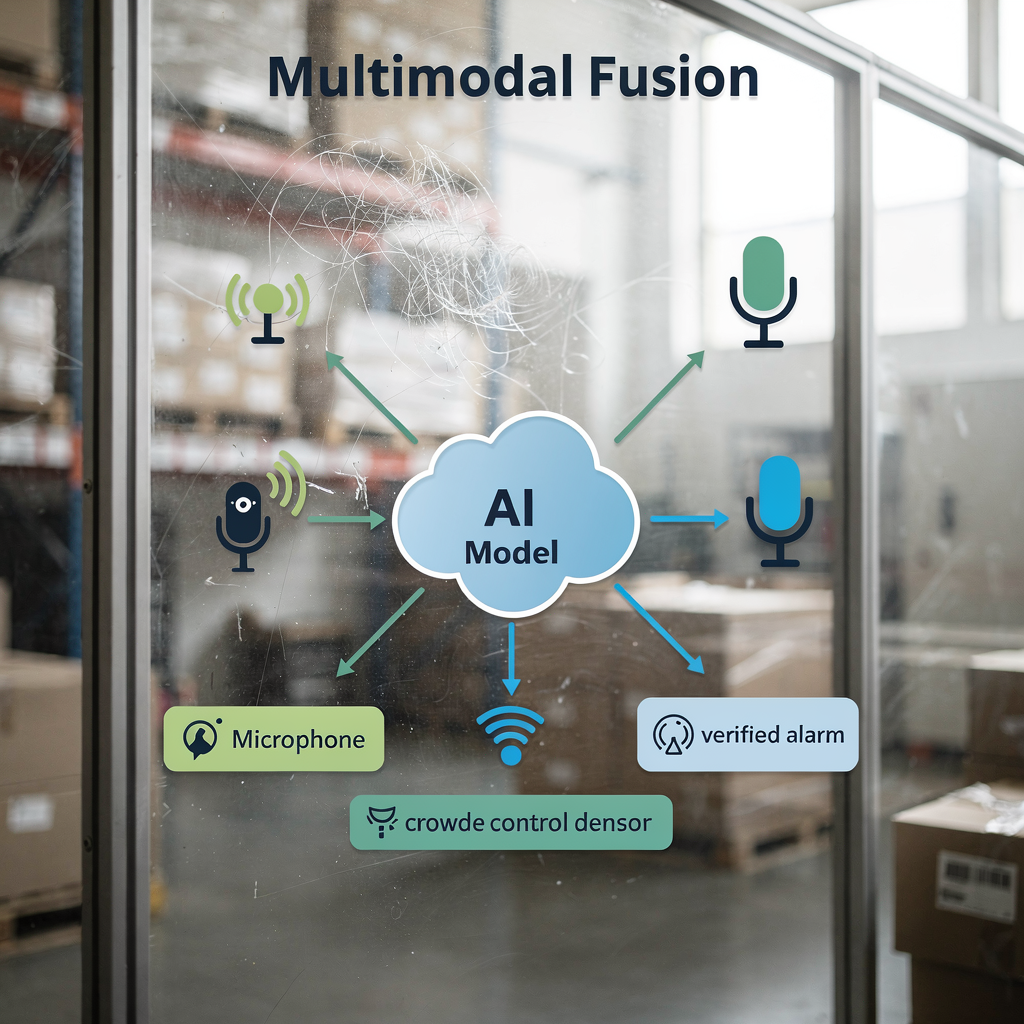

Multimodal fusion improves accuracy by combining signals. Video, audio, and sensor feeds each provide unique cues. For instance, combining visual cues with sound levels improves crowd behavior detection. When models see both motion patterns and rising sound, they detect agitation more reliably. Thus, multimodal approaches reduce false alarms and increase confidence in alerts.

Machine learning models learn to weight inputs. They integrate visual embeddings with textual logs, access control events, or IoT sensors. This integration helps confirm incidents and provide richer context. For example, an access control entry combined with a matched face detection yields stronger evidence than either source alone. visionplatform.ai’s VP Agent Reasoning correlates video analytics with VMS data and procedures to explain why an alarm matters. The platform then recommends actions or pre-fills incident reports to accelerate response.

Large-scale multimodal models also enable richer content discovery. They accept video plus textual reports or metadata and learn joint embeddings. As a result, queries expressed using natural language yield more precise retrieval. For systems monitoring airports, multimodal detection aids in people flow and crowd density analysis; see our crowd detection resource at crowd detection density in airports. Data fusion supports video summarization and automated reporting, which improve operator productivity and situational clarity.

Finally, multimodal pipelines enhance performance without sacrificing compliance. On-prem or edge deployments let organizations keep raw video and sensor data local. This design protects privacy and aligns with regional rules. The combination of machine learning and multimodal inputs drives richer analytics, better verification, and ultimately enhanced security for operations at scale.

AI vision within minutes?

With our no-code platform you can just focus on your data, we’ll do the rest

artificial intelligence and large language models: refining user queries

Large language models translate human intent into actionable queries. Operators write natural language descriptions and the model maps them to semantic indexes. In practice, a user might ask, “suspicious behaviour near an ATM at night.” The system encodes that phrase, finds matching embeddings, and returns ranked video clips. This process hides complexity from the operator and speeds investigations.

NLP pipelines convert long user text into concise semantic vectors. Then the system combines those vectors with metadata filters such as time range or camera ID. This hybrid approach improves both precision and recall. In addition, fine-tuning AI models for domain-specific CCTV scenarios increases relevance. For example, a model trained on airport datasets will better recognize boarding gate congestion and access violations. For domain-specific searches, consider our people detection in airports guide at people detection in airports.

AI video search adds conversational capabilities to control rooms. Operators can iterate with follow-up queries and refine results. Systems can also explain why clips match a query by surfacing natural language descriptions and visual highlights. This transparency helps operators trust the output and act faster. Furthermore, indexing and search become more resilient as pre-trained models adapt with task-specific examples. Teams can use contrastive language-image pre-training and then fine-tune on local video datasets to increase accuracy and speed.

Finally, an integrated approach supports low-latency responses and auditability. By deploying models on-prem, organizations keep data private while enabling fast, secure searches. visionplatform.ai uses an on-prem Vision Language Model and AI agents to link search to reasoning and actions. This combination allows operators to retrieve relevant video content quickly and to run verified workflows with minimal manual steps. The result is a more precise, explainable, and useful search experience for video surveillance teams.

future of video: trends, ethics and safeguards

The future of video emphasizes real-time semantic alerts and edge processing. As compute moves to the edge, systems provide low-latency inference and local data protection. Edge deployments reduce bandwidth and protect sensitive video data. At the same time, architectures will support hybrid models that run lightweight inference on devices and heavier models in local servers.

Ethics and privacy concerns require strong safeguards. Surveillance tools can be misused, and risks include discriminatory targeting and unauthorized linkage of data. Researchers highlight security and privacy challenges in modern surveillance systems and recommend protections such as data minimization, access controls, and auditable logs (source). Regulation such as the EU AI Act also pushes for transparent and controllable deployments. Therefore, teams should adopt governance that combines technical controls with policy and oversight.

Looking ahead, research will focus on responsible scaling. Models must handle large data and amounts of data while preserving individual rights. Techniques like federated learning and anonymized metadata can help. Also, industry best practices will include explainability, bias testing, and careful dataset curation. For practical detection features that can be implemented responsibly, review our perimeter breach and unauthorized access examples at perimeter breach detection in airports and unauthorized access detection in airports.

Finally, innovation will bring more automated workflows. Autonomy will expand cautiously, with human-in-the-loop controls and audit trails. Systems such as VP Agent Auto plan to enable autonomous responses for low-risk scenarios while preserving oversight. Overall, the future of video balances richer capabilities with stronger protections to deliver efficient, trusted, and responsible surveillance solutions.

FAQ

What is semantic video search in CCTV?

Semantic video search interprets video content to return results based on meaning rather than raw pixels. It uses AI models and metadata to find events, behaviors, or scenarios described in natural language.

How does vector search help find relevant video?

Vector search converts visual and textual concepts into numeric vectors and stores them in a vector database. Then similarity search retrieves the closest matches, enabling rapid retrieval of relevant video content.

Can semantic systems work in real-time?

Yes. Edge compute and optimized pipelines enable real-time video analysis and low-latency alerts. Systems can run lightweight inference on cameras and richer models on local servers for rapid response.

Are there privacy risks with semantic search?

Yes. Semantic capabilities can increase the risk of misuse if not governed properly. Implementing access controls, on-prem deployments, and audit logs reduces privacy exposure.

What datasets do models need?

Models benefit from diverse, annotated video datasets that reflect the deployment environment. Task-specific fine-tuning on local video datasets improves accuracy and reduces false positives.

How do large language models improve queries?

Large language models translate natural language descriptions into semantic vectors that align with video embeddings. This mapping makes searches conversational and more precise.

Can multimodal fusion improve detection?

Yes. Combining video with audio, sensors, or textual logs gives richer context and higher confidence in alerts. Multimodal models reduce ambiguity and enhance verification.

What is a vector database and why use it?

A vector database stores high-dimensional vectors and supports nearest-neighbor search for similarity. It enables fast retrieval and scales to large video datasets efficiently.

How does metadata help investigations?

Metadata annotates who, what, when, and where across video segments, which speeds forensic review. It supports indexing and allows operators to search across cameras and timelines effectively.

Is autonomous operation possible for CCTV systems?

Autonomous operation is possible for low-risk, repeatable tasks with human oversight and audit trails. Systems can automate routine workflows while keeping escalation and review procedures in place.