vision language model summarises hours of footage into concise text with generative AI

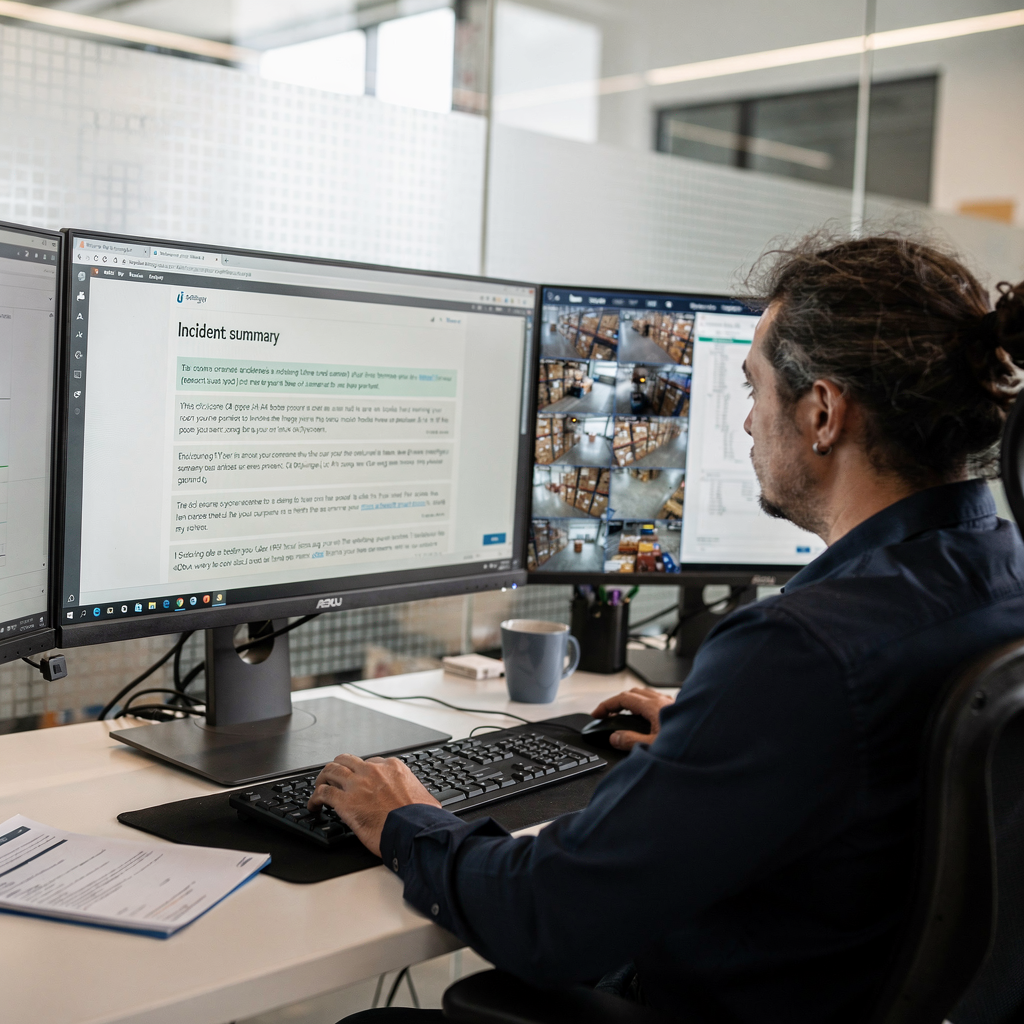

Vision language model technology turns long video timelines into readable incident narratives, and this shift matters for real teams. Also, these systems combine image and language processing to create human-like descriptions of what the camera captured. For example, advanced models will generate a text that explains actions, objects, and context from minutes or hours of footage. Consequently, operators no longer need to scrub through endless video. Instead, they can read short reports, search in plain language, and focus on response.

Today’s VLMS pair visual encoders with large language models, and they expand what surveillance platforms can do. For instance, research shows leading models excel at perception tasks while still improving on reasoning benchmarks (high accuracy results). Also, a comprehensive review highlights the multimodal strengths that enable image captioning, visual question answering, and summarization (survey of VLM approaches). Therefore, integrating a VLM into XProtect removes a major bottleneck: manual review.

In practice, the new video summarization tool converts hours of camera footage into concise incident summaries. For example, operators can submit a short video clip and receive an executive-style paragraph that lists the what, who, where, and when. Also, the summarization tool analyzes camera footage and describes what’s relevant. This capability supports forensic workflows, and it speeds investigations by letting people search video like a report rather than a set of files.

visionplatform.ai uses on-prem VLMs so customers keep control of video and models. In addition, our VP Agent Suite turns video detections into searchable descriptions, and it pairs VLM output with agent reasoning to suggest actions. This reduces time per alarm and helps teams scale monitoring without moving raw video to the cloud. Early reports show video summarization could reduce operator time spent on manual review by roughly 30%, and this aligns with industry evidence that AI speeds incident detection (Milestone case metrics).

milestone systems offers VLM as a service to extend AI capabilities to custom workflows

Milestone Systems provides modular services that let integrators add vision-language features to existing deployments. Also, Milestone has introduced cloud and on-prem options, and the company presents both as ways to deliver scalable intelligence. For example, Milestone XProtect AWS Professional Services show how XProtect can run on cloud infrastructure with added AI capabilities (AWS listing). Additionally, Milestone Systems is a world leader in data-driven video, and their platform roadmap includes new multimodal services.

Milestone offers a language model as a service and VLM as a service, and both extend what developers can do with XProtect. Also, integration points include APIs and SDKs that expose VLM outputs to workflows, dashboards, and incident systems. The XProtect Smart Client – users benefit from clickable summaries, and developers can build an ai-powered plug-in for the xprotect to surface VLM text directly in the client. Directly in the XProtect Smart, teams can read incident summaries, jump to snippets, and export reports.

Milestone’s new video summarization tool for xprotect® analyzes camera footage and describes what’s important. Also, the milestone vision language model can be configured to send a snippet of video and a prompt describing the desired output. Then, the model will generate a text summary and a short timeline of key frames. This workflow supports both investigations and daily monitoring. Moreover, organizations can adopt a plug-in for the xprotect smart to enable this feature inside existing XProtect installations. Milestone Systems’ new video summarization combines a high accuracy vision language model with operational connectors, and it offers api access to production-ready video to partners and service providers.

For customers who prefer on-prem operation, visionplatform.ai complements these services by offering on-prem VP Agent components that keep video inside the environment. In addition, visionplatform.ai retains model control and audit logs, which supports compliance and EU AI Act concerns. Finally, Milestone Systems emphasizes AI in their communications: “Our award-winning XProtect software harnesses the power of AI and vision-language models to deliver unparalleled situational awareness and operational efficiency to our customers worldwide.” (Milestone statement).

AI vision within minutes?

With our no-code platform you can just focus on your data, we’ll do the rest

video management professionals face high manual workload, AI can cut review time by 30%

Control rooms report video overload and time-consuming manual review as daily realities. Also, operators juggle alarms, logs, and procedures, and this slows decision-making under pressure. Industry data indicates AI can reduce review time by roughly 30%, and reports show video summarization could reduce operator time by that margin in real deployments (Milestone case studies). Therefore, adding concise summaries and natural language search changes workloads.

AI-driven summarisation summarizes long recordings, and it flags suspicious sequences for immediate review. Consequently, operators see fewer false positives, and they spend more time on verified incidents. For example, Milestone has reported up to a 40% reduction in false alarms when AI and contextual verification are in place (Milestone false alarm reduction). Also, academic benchmarks show VLMs exceed 85% in complex visual perception tasks, which supports reliable detection at scale (research results).

visionplatform.ai focuses on turning detections into decisions. For example, VP Agent Search lets teams run forensic queries like “person loitering near gate after hours” across recorded video and returns human-readable results. Additionally, VP Agent Reasoning correlates camera events with access control, procedures, and historical context to explain whether an alarm is valid. This approach lowers operator cognitive load and reduces steps per incident.

Because video systems capture vast amounts of footage, teams need automated triage. Also, systems that combine real-time VLM descriptions with agent actions can close false alarms, create pre-filled reports, and notify responders. In short, adopting advanced video intelligence and on-prem VLMs gives control rooms the tools to scale monitoring with the same staff, and it creates a clear path from detection to action.

vision language model in XProtect Smart Client specialises in traffic analysis

One practical VLM deployment focuses on traffic. Specifically, a model specialized for real-world traffic video can identify collisions, wrong-way movements, and congestion patterns. Also, the model can be fine-tuned on local camera angles so it recognizes lane markings, vehicle types, and cyclists in different weather. The result is a summarization tool for xprotect video that lists key events, timecodes, and short textual context for each incident.

Traffic workflows benefit from structured summaries. For example, a summarization tool for xprotect allows users to submit a short video clip along with a prompt describing the desired focus, and the model returns an incident list with timestamps. Also, this workflow supports law enforcement and city planners who need rapid evidence extraction and trend analysis. The tool for xprotect® video management helps analysts review peak-hour events, and it supports traffic management decision-making.

The milestone vision language model used in these flows is specialized for real-world traffic video and fine-tuned on responsibly curated datasets. In addition, video summarization for xprotect allows users to extract snippets that show violations or near-misses, and teams can export them for follow-up. For example, city planners can use aggregated summaries to adjust signal timings, and police can use the same summaries to prioritize investigations. visionplatform.ai integrates with XProtect so incident summaries appear inside the xprotect smart client and link back to the full recorded segment.

Furthermore, the system can enrich events with ANPR/LPR outputs and vehicle classifications. For context, see our vehicle detection and classification work for airports which demonstrates similar real-time outputs over moving vehicles (vehicle detection and classification in airports). Also, teams that need forensic search can extend these summaries with full-text queries across time using VP Agent Search (forensic search in airports).

AI vision within minutes?

With our no-code platform you can just focus on your data, we’ll do the rest

milestone systems reports up to 40% reduction in false alarms and 30% faster incident detection

Milestone Systems reports significant operational improvements when AI is applied to XProtect. For instance, systems’ new video summarization tool and integrated AI reportedly reduced false alarms by up to 40% and accelerated incident detection by around 30% in some deployments (Milestone metrics). Also, these figures align with field feedback that automation reduces time-to-action and increases situational awareness.

These gains come from combining VLM outputs with rule engines and contextual verification. For example, an existing xprotect event can be enriched by a VLM description, and then an AI agent can ask complementary systems if a badge read or door sensor corroborates the event. As a result, the system avoids sending raw alerts that lack context, and operators receive explained situations with recommended actions.

Vision-language integrations also improve reporting and compliance. Specifically, production-ready video intelligence built into workflows reduces the manual burden of incident summaries. The platform can create structured incident records, pre-fill investigation fields, and export evidence packages. For customers working under regulatory constraints, keeping video and models on-prem or in controlled cloud tenancy matters. visionplatform.ai’s on-prem approach supports that need, and it complements Milestone’s cloud options for customers that prefer hosted services.

Finally, adding advanced video intelligence into XProtect supports broader operational goals. For example, airport teams that use people-counting, ANPR, and intrusion detection find VLM summaries help correlate operational events with security incidents (people counting). Also, by combining visual descriptions with metadata, teams can reduce operator load and focus human attention where it matters most.

Future video management will rely on advanced vision language model architectures

Research in VLM architectures continues to evolve, and benchmarks like MaCBench push models toward stronger scientific reasoning and richer multimodal understanding (MaCBench benchmark). Also, the ICLR 2026 review of vision-language-action research highlights trends in diffusion models and reasoning that will benefit surveillance and operational AI (ICLR VLA analysis). Therefore, future XProtect integrations will likely use advanced vision language model topologies to balance speed and accuracy.

Milestone has introduced initiatives that combine cloud and edge options, and the hafnia vision language model concept shows how vendors plan to offer flexible deployments. Additionally, concepts like vlm as a service and language model as a service will let integrators pick hosted or on-prem models depending on compliance needs. For customers that need full on-site control, visionplatform.ai offers on-prem VP Agent capabilities that keep raw video local and still deliver access to production-ready video intelligence.

Looking ahead, advanced video ai platforms will support richer agent workflows. For example, agents will reason over timelines, access control logs, and SOPs to recommend actions. This turns detections into decisions and creates an impactful path to turning video into actionable outcomes. Also, developers will be able to add advanced video intelligence features to XProtect via APIs and plugins, and Milestone’s ecosystem will make it simple to add advanced video intelligence features to applications.

Finally, as model accuracy improves, adoption accelerates. Early adopters already see measurable benefits, and as benchmarks and tooling mature, XProtect video management software will embed multimodal reasoning across operations. In short, combining VLMs with robust VMS architecture will define the next generation of video surveillance systems and operational AI.

FAQ

What is a vision language model and how does it work with XProtect?

A vision language model (VLM) processes visual inputs and generates natural language outputs that describe what appears in video. In XProtect, a VLM can produce summaries, captions, and searchable descriptions that appear in the XProtect Smart Client or via APIs.

Can VLM summaries really replace manual video review?

VLM summaries reduce the amount of video an analyst must watch by highlighting key moments and creating concise reports. Also, these summaries accelerate triage and allow operators to focus on verified incidents rather than raw footage.

Does Milestone Systems offer VLMs as part of XProtect?

Milestone Systems has introduced VLM capabilities and related services for XProtect, and the company reports measurable reductions in false alarms and faster detection in deployments (Milestone case metrics). Also, Milestone provides cloud and integration options for partners and integrators.

How does visionplatform.ai complement Milestone XProtect?

visionplatform.ai provides on-prem VLMs, agent reasoning, and natural-language forensic search that integrate tightly with XProtect. In addition, our VP Agent Suite turns detections into context and recommended actions while keeping video and models under customer control.

What performance improvements can organizations expect?

Field reports indicate up to 40% fewer false alarms and around 30% faster incident detection when AI and VLM summaries are applied. Also, academic studies show strong perception accuracy in modern VLMs (research).

Are VLMs suitable for traffic management?

Yes. Models specialized for real-world traffic video can detect collisions, congestion, and violations, and they generate context-aware summaries to support police and city planning. Also, these summaries help optimize signal timing and resource allocation.

Can VLMs run on-prem for compliance-sensitive sites?

They can. visionplatform.ai and some Milestone integrations support on-prem deployment to maintain data sovereignty, comply with the EU AI Act, and avoid sending raw video to external clouds. This preserves audit trails and control.

How do I integrate VLM summaries into existing XProtect workflows?

Integrations typically use Milestone APIs, SDKs, or an ai-powered plug-in for the xprotect to surface summaries inside the XProtect Smart Client. Developers can also call VLM services via REST APIs to retrieve summaries and link them to incidents.

What about model training and dataset requirements?

High-quality VLMs need diverse, annotated video data and careful fine-tuning for site-specific camera views; models specialized for real-world traffic video and fine-tuned on responsibly curated datasets perform best. Also, vendors may offer pre-trained models and tools to refine them with local data.

Where can I learn more about forensic search and vehicle detection integration?

See our resources on forensic search in airports for natural-language video search and our vehicle detection and classification page to learn how VLM summaries combine with metadata for investigations (forensic search, vehicle detection). Also, our people-counting page shows how summaries can support operational analytics (people counting).