Understanding the Role of video in Multimodal AI

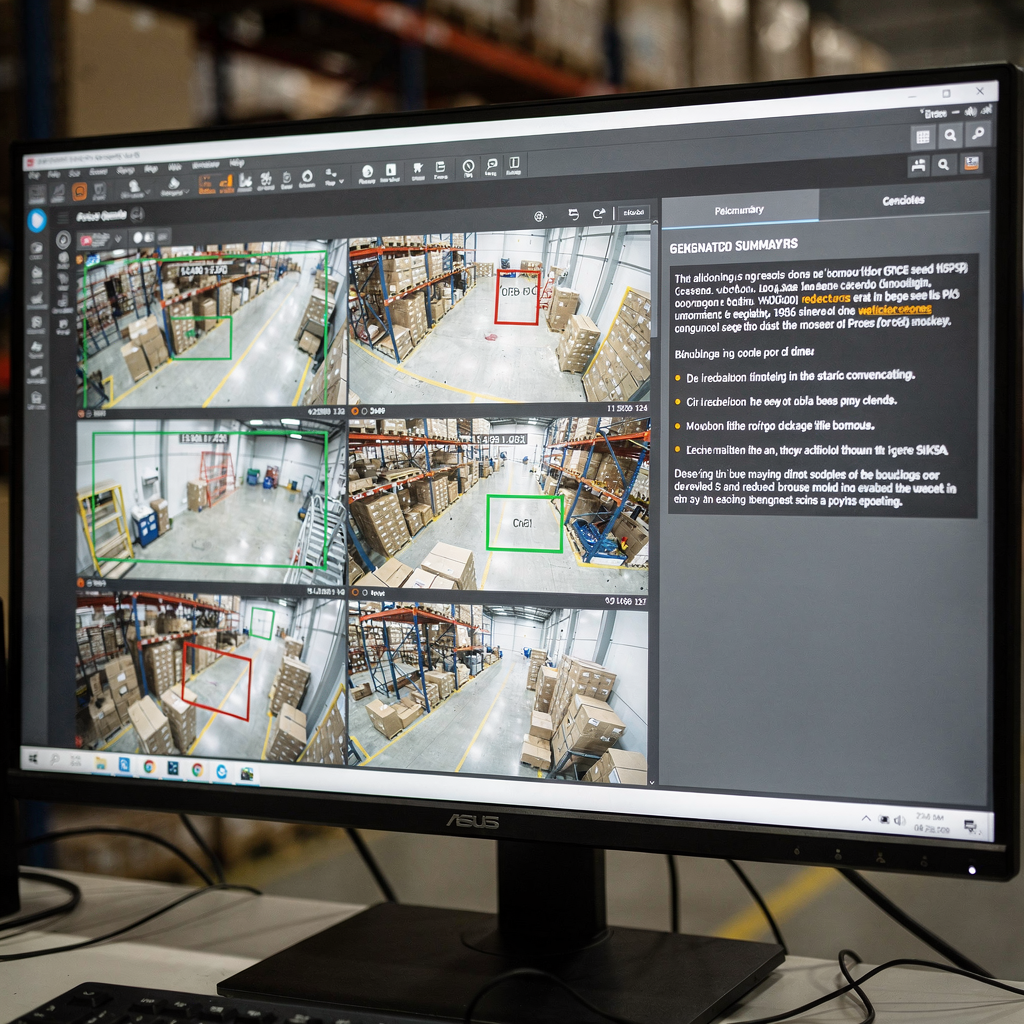

First, video is the richest sensor for many real-world problems. Also, video carries both spatial and temporal signals. Next, visual pixels, motion, and audio combine to form long sequences of frames that require careful handling. Therefore, models must capture spatial detail and temporal dynamics. Furthermore, they must process sequences efficiently. For example, a control room operator needs a clear, concise summary of hours of footage. For instance, modern systems convert streams into searchable text and captions, and then into actions. This shift enables operators to move from raw detections to decision support. Also, visionplatform.ai adds a reasoning layer that links live analytics with human language to speed decisions and reduce manual steps.

However, capturing temporal dependencies remains a key challenge. Meanwhile, scene segmentation, event detection, and narrative inference require different techniques. Also, shot boundary detection must run before higher-level analysis. Next, event detectors must spot specific events such as loitering or intrusion. You can read about integrated forensic search in real deployments for more context on how systems support operators forensic search in airports. Then, models must map detected events to natural answers. For example, a VP Agent can explain why an alarm fired, and what to do next.

In addition, generative approaches enrich visual comprehension. For instance, diffusion and transformer methods let a model imagine plausible intermediate frames or causal links. Also, generative ai can produce multi-sentence captions and Trees of Captions for complex scenes. Recent surveys and progress on large-scale evaluation show rapid improvements in image and video understanding as summarized by CVPR workshops. Therefore, combining visual encoders with large language decoders becomes an effective strategy. Finally, this approach helps systems deliver concise summarization for operators and automated agents in the control room.

Exploring model Architectures for summarization

First, visual encoders form the input stage. Convolutional neural networks still work well for many tasks. Also, Vision Transformers (ViT) and video-specific transformers process spatio-temporal tokens from frames and short clips. Next, video transformers capture motion across a sequence and the temporal dynamics that matter. For example, ViT variants and sparse attention help scale to long video sequences. In addition, NVIDIA hardware often accelerates these pipelines on edge or GPU servers. You can learn about typical people detection patterns and deployments in real sites via our people detection page people detection in airports. Also, many teams use fine-tuning the model on site data to adapt to domain specifics.

Second, language decoders turn visual features into text. Large language and transformer decoders like GPT, T5, and BART produce fluent descriptions. Also, a large language model can convert structured visual cues into coherent paragraphs and captions. However, models often need grounding to avoid hallucinations. Therefore, fusion strategies are critical. Cross-attention, co-training, and vision language model fusion allow visual tokens to influence text generation. For example, Vision Language World Models create a structured world view that supports planning and narration, and they allow Trees of Captions to structure summaries as shown in recent arXiv work.

Also, reasoning modules and generative techniques improve output quality. For instance, discrete diffusion approaches and sequence planning help the model reason about causal chains and specific events. Also, integrating a vision language model with symbolic or retrieval modules can improve factuality and traceability. For example, a system can retrieve past clips to verify a current detection, and then produce a human-readable answer. Finally, when architects unify encoders and decoders, they create a powerful framework that supports both summary generation and downstream tasks.

AI vision within minutes?

With our no-code platform you can just focus on your data, we’ll do the rest

Techniques and Pipeline for video summarization

First, the pipeline typically has three core stages. Shot boundary detection comes first. Next, feature extraction converts frames into visual tokens. Then, summary synthesis produces condensed text or highlight reels. Also, these stages may run online or offline, and they balance latency with accuracy. For example, real-time systems prioritize efficient inference, while offline analyzers can use heavier transformers for better context. Therefore, designers choose architectures and hardware carefully. In practice, visionplatform.ai supports on-prem processing to keep video and models within site boundaries, and this improves compliance and control.

Also, prompt engineering guides context-aware summarisation with generative decoders. For instance, a prompt describing desired length, emphasis, or audience can shape the text the model outputs. Also, prompt describing a camera view or a task helps a large language model produce more useful captions and answers. Next, retrieval-augmented workflows let the system consult historical clips or procedure documents before producing an answer. For example, VP Agent Search uses natural queries to find past incidents and support rapid forensic work forensic search in airports. In addition, zero-shot or few-shot prompts enable rapid deployment to new tasks without heavy labels.

Real-time versus offline processing presents trade-offs. Real-time systems require efficient encoders and sparse attention. Also, they may use approximate retrieval to speed decisions. Meanwhile, offline processing can perform more complex temporal reasoning and causal modelling. Therefore, pipelines often mix both modes. Finally, integration of temporal modules and causal graphs helps the pipeline reason about sequences and why events unfold. This approach improves the ability to generate concise summaries and clear recommendations for the operator or AI agent.

dataset Resources and Annotation for video summarization

First, public datasets remain essential for training and benchmarking. Standard choices include SumMe, TVSum, ActivityNet, and YouCook2. Also, these datasets provide different annotation formats such as highlight clips, temporal boundaries, and descriptive captions. Next, the choice of annotation affects downstream tasks strongly. For example, models trained on caption-rich data learn to produce multi-sentence descriptions, while highlight-based labels train models to pick salient clips. In addition, synthetic data generation and augmentation can mitigate data scarcity by expanding diversity.

However, dataset bias is a major concern. Also, domain shifts between public datasets and operational sites reduce model performance. Therefore, on-site adaptation and fine-tuning the model with local labels is common. For instance, visionplatform.ai supports custom model workflows so teams can improve a pre-trained model with their own classes. This approach reduces false positives and increases the model’s practical ability in the field. Next, annotation formats vary. Captions provide dense semantic labels, while temporal boundaries mark start and end points for specific events.

Also, managing dataset diversity matters for evaluation. For example, including aerial, indoor, and vehicle-mounted footage yields more robust visual features. Furthermore, leveraging synthetic clips can help with rare events. You can consult academic surveys for broad dataset trends and benchmark practices that overview the field. Finally, a mixed strategy that combines public datasets, synthetic augmentation, and site-specific labels often yields the best operational performance.

AI vision within minutes?

With our no-code platform you can just focus on your data, we’ll do the rest

benchmark Metrics and Evaluation for video summarization

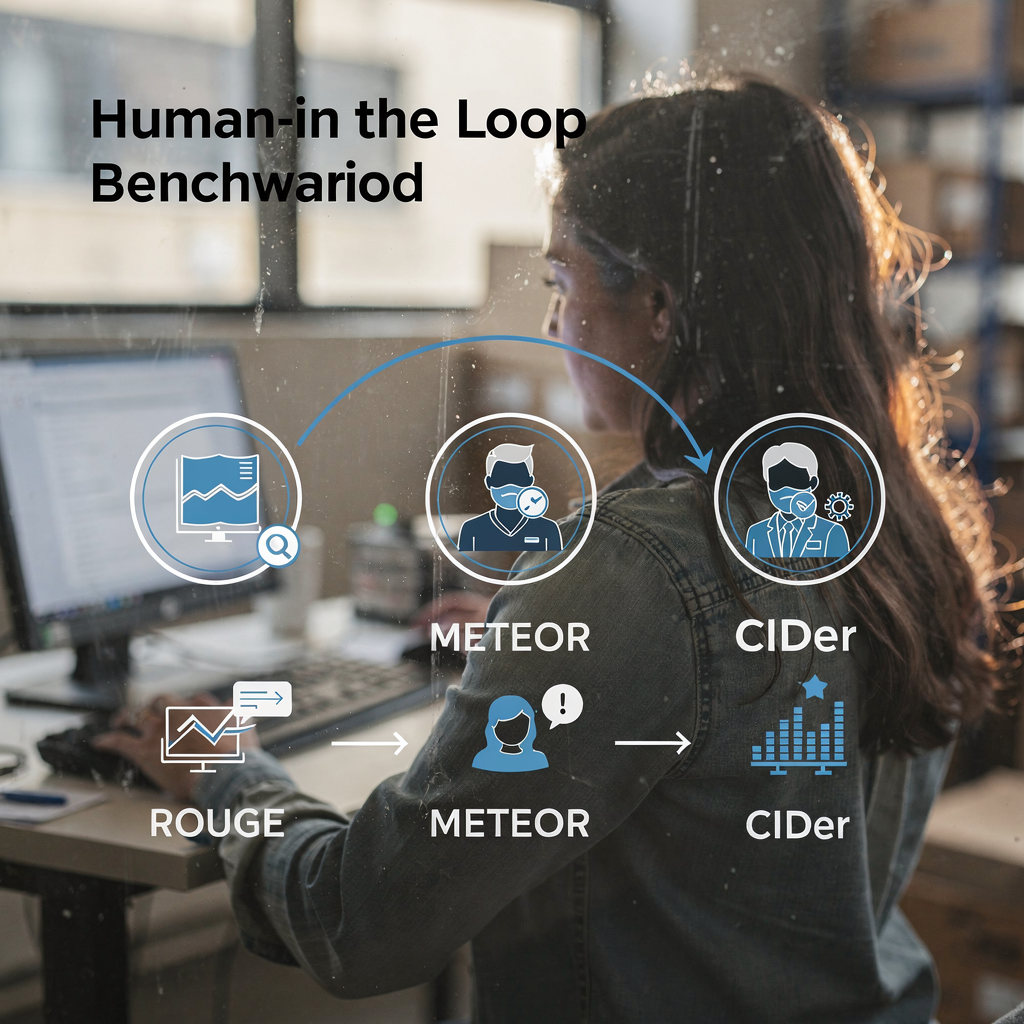

First, evaluation uses both automated metrics and human judgments. Standard automated metrics include ROUGE, METEOR, and CIDEr. Also, F1 scores and recall metrics measure clip selection quality. Next, benchmark suites such as VideoSumBench, VATEX, and YouSumm organize tasks and hold out test sets. For instance, recent surveys list over forty vision-language benchmarks that span image-text and video-text tasks, and they track progress across many axes according to CVPR workshop analysis. In addition, ICLR summaries of Vision-Language-Action work highlight major gains on temporal tasks as discussed in community write-ups.

However, metrics do not capture everything. Also, automated scores can miss readability and operational usefulness. Therefore, human-aligned evaluation remains critical. For example, control room operators should test whether a summary helps them verify an alarm and decide an action. In practice, VP Agent Reasoning evaluates explanations across multiple sources, and then delivers a ranked answer. Furthermore, systems that support retrieval and provenance help evaluators judge factuality.

Next, performance trends show steady advances. Also, some VLMs now reach high accuracy on short event classification and captioning benchmarks. For example, large-scale surveys document that state-of-the-art models often exceed 80% on specific event identification tasks reported in community reviews. Finally, limitations of current benchmarks motivate new human-in-the-loop frameworks and more diverse testbeds. For instance, IEEE Xplore and arXiv repositories host surveys and critiques that call for better alignment and evaluation practices IEEE Xplore and arXiv. Thus, the community continues to update metrics and datasets to reflect operational needs.

task-oriented navigation in video summarization

First, define user tasks clearly. For example, retrieval, browsing, and interactive exploration each need different summarization styles. Also, retrieval queries demand concise answers and precise timestamps. For example, video search and summarization must return the most relevant clip and a short caption or answer. You can improve retrieval performance with natural language search over annotated captions and event metadata, as VP Agent Search demonstrates forensic search in airports. Next, browsing workflows emphasize short highlight reels and skimmable text.

Also, interactive summarisation supports user-driven controls and feedback loops. For example, an operator might request a longer or shorter summary, or ask follow-up questions. In that case, a vision language model, combined with a large language model, can answer contextual queries and refine the summary. Furthermore, multi-task VLMs can combine summarisation with question answering, tagging, and retrieval. For instance, an AI agent can both tag suspicious activity and prepare incident reports. Also, VP Agent Actions can pre-fill incident reports and recommend next steps.

Next, future directions point to adaptive and personalised summaries. Also, systems will learn user preferences and adjust the level of detail. Therefore, the UX must support fast controls and clear provenance. In addition, agents will act within permissioned workflows to enable automation without compromising auditability. Finally, the aim is to unify detection, explanation, and action so that cameras become operational sensors. This solution improves operator ability and reduces cognitive load in real-time environments.

FAQ

What is a vision-language model and how does it apply to video summarization?

A vision language model fuses visual encoders with language decoders to produce text from images or video. Also, it can generate captions, answers, and short summaries that help operators and agents understand video content quickly.

How do VLMs handle temporal reasoning in long videos?

VLMs use temporal transformers, sparse attention, and sequence models to capture dependencies across frames. In addition, planning layers and Trees of Captions can structure events to improve causal reasoning.

What datasets are standard for training video summarization systems?

Common datasets include SumMe, TVSum, ActivityNet, and YouCook2, which provide highlight clips, temporal boundaries, and captions. Also, teams often augment public datasets with synthetic and site-specific data to cover rare events.

Which metrics evaluate the quality of generated summaries?

Automated metrics include ROUGE, METEOR, CIDEr, and F1 for clip selection. However, human evaluation is essential to judge readability, usefulness, and operational fidelity.

Can systems perform real-time summarization at scale?

Yes, with efficient encoders and retrieval strategies, systems can summarize live streams. Also, edge processing and on-prem deployment help meet latency and compliance requirements.

What role do prompts play in guided summarization?

Prompts steer the decoder toward specific styles, lengths, or focuses, and they enable zero-shot or few-shot adaptation. Also, prompt engineering helps large language decoders produce more context-aware captions and answers.

How do agents interact with summaries to support operators?

Agents can verify alarms, pre-fill reports, and recommend actions based on summarized video and related metadata. In addition, agent workflows reduce time per alarm and improve consistency.

What are common limitations of current benchmarks?

Benchmarks often miss subjective measures like usefulness and clarity, and they rarely test long-range temporal reasoning. Therefore, human-aligned and diverse testbeds are needed to better evaluate operational performance.

Is on-prem processing important for enterprise video systems?

Yes, on-prem processing preserves data control and helps meet regulations like the EU AI Act. Also, it reduces cloud dependency and keeps sensitive video within the customer environment.

How can teams improve model accuracy for a specific site?

Teams should fine-tune the model with labeled site data and use custom model workflows to add classes and rules. Also, continuous feedback from operators and agents helps the system adapt and perform better over time.