language model and vision language model: introduction

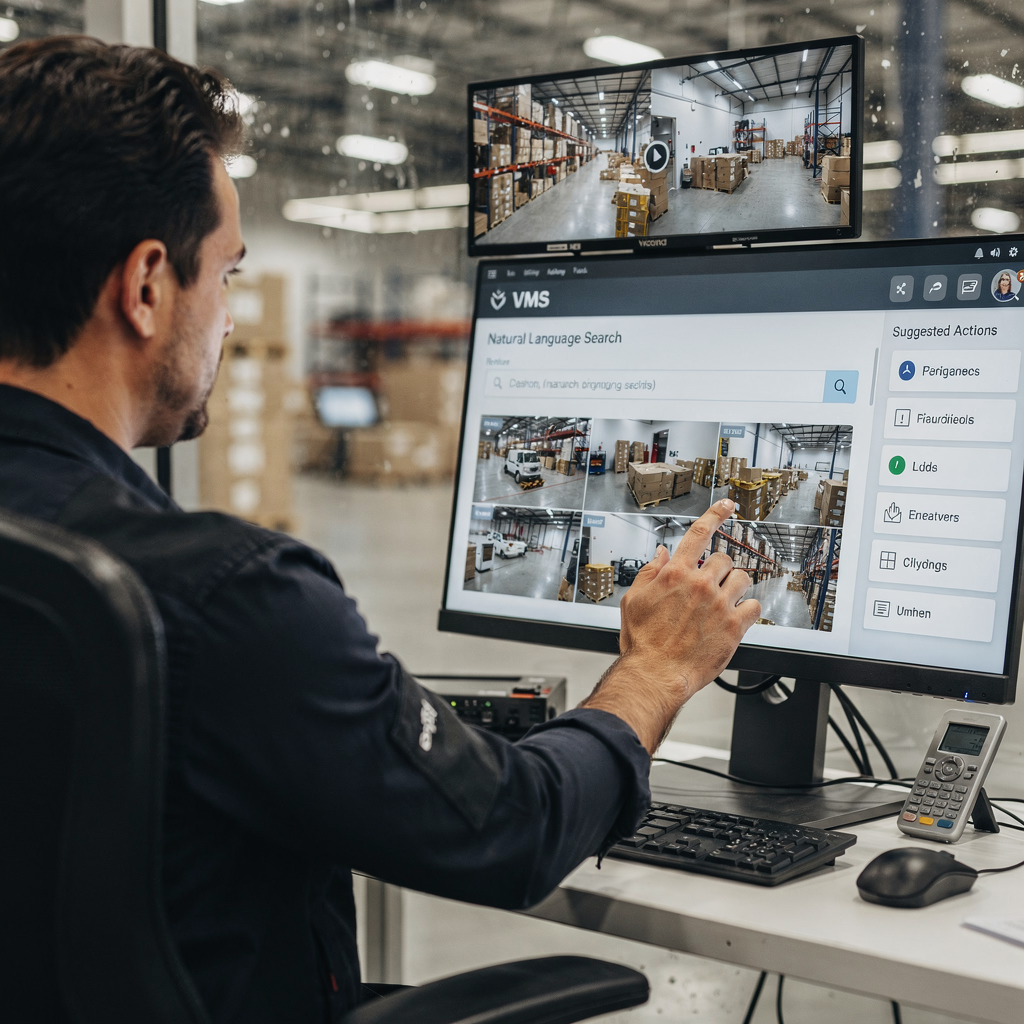

A language model predicts text. In VMS contexts a language model maps words, phrases, and commands to probabilities and actions. A vision language model adds vision to that capability. It combines visual input with textual reasoning so VMS operators can ask questions and receive human-readable descriptions. This contrast between text-only models and multimodal VLMs matters for practical deployments. Text-only systems index logs, tags, and transcripts. In contrast, VLMs index frames, events, and natural language together. For example, an operator can search “person loitering near gate after hours” and retrieve matching clips. This capability reduces time spent on manual review. Studies show intelligent video processing using AI can cut manual review time by up to 60% [source]. Furthermore, the global video surveillance market is growing quickly. Analysts forecast roughly a 10.5% CAGR to 2030 and a market over $90 billion by then [source]. These figures underline the demand for smarter VMS. visionplatform.ai addresses that need by adding an on-prem Vision Language Model and agent-driven workflows. Our platform turns detections into context, reasoning, and actionable insights. Operators no longer only see alerts. They read explained situations and get suggested actions. This reduces cognitive load and speeds decisions. The integration of vision and text changes how teams search video history, verify alarms, and automate reports. In airports, for example, semantic forensic search improves investigations. For readers who want a concrete example, see our forensic search solution for airports Forensic search in airports. The next chapters explain the architecture and design choices behind these systems, and how vlms learn to map video and language into searchable knowledge.

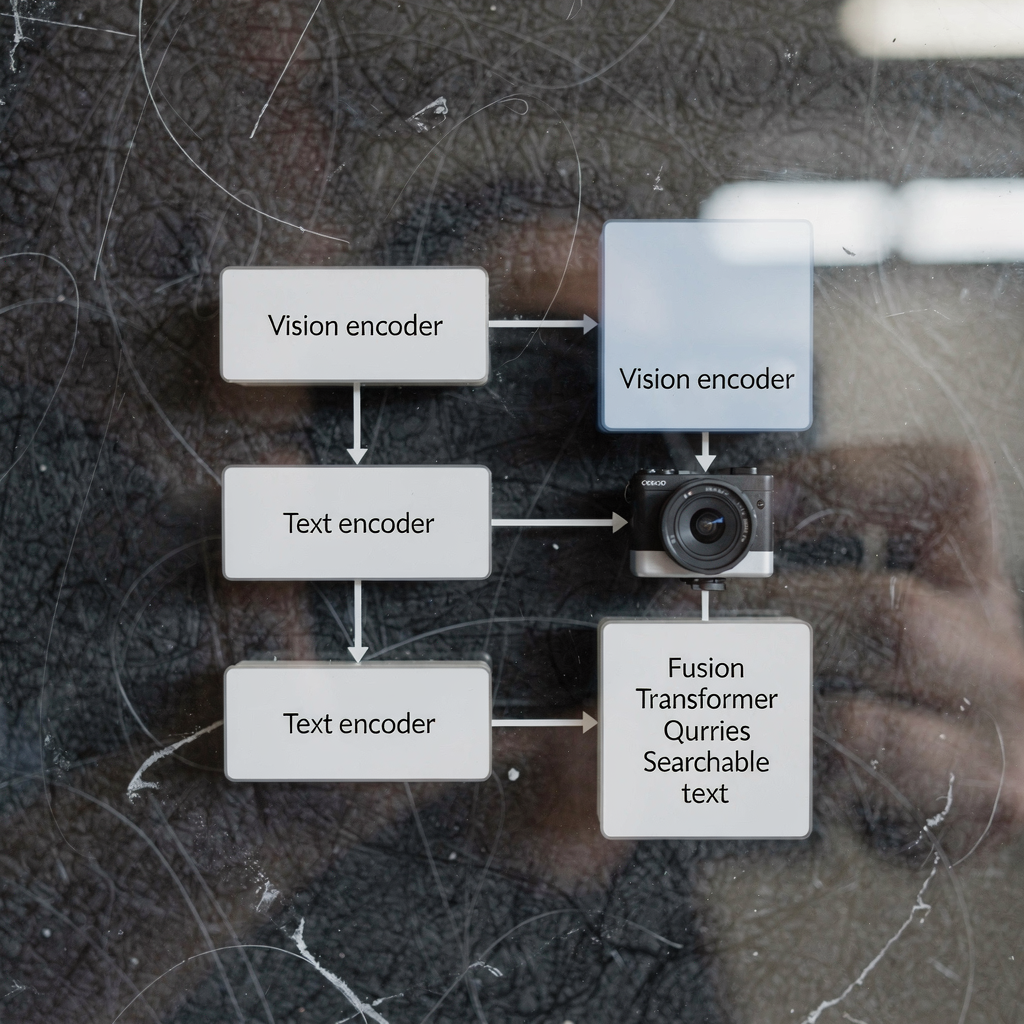

architecture of vision-language models and vision encoder: technical overview

The architecture of vision-language models typically has three core components: a vision encoder, a text encoder, and a multimodal fusion layer. The vision encoder processes raw frames, extracting features such as shapes, motion cues, and object embeddings. The text encoder turns captions, queries, and procedure text into vector representations. Then a fusion stage aligns image and text tokens for joint reasoning. Many systems build this stack on a transformer backbone and use a vision transformer as a vision encoder in some designs. Dual encoder models separate visual and textual streams and use contrastive learning to align embeddings, while other designs use cross-attention to integrate signals at depth. The transformer architecture enables context-aware attention across tokens. That attention supports tasks such as visual question answering and semantic captioning. In production VMS, latency and throughput matter. On-prem inference often uses optimized vision encoders and quantized pretrained models to reduce compute. Vision-language tasks such as event captioning or anomaly detection require both sequence modeling and spatial feature maps. A foundation model can provide general visual and textual priors. Then site-specific fine-tuning adapts the model for a specific task. For instance, models like CLIP offer powerful image and text alignment that many systems leverage as pretrained models. Developers often combine computer vision and natural language techniques to produce fast, reliable perception. visionplatform.ai uses tailored encoders and fusion layers to expose video events as natural language descriptions. This design lets the VP Agent Suite offer VP Agent Search and VP Agent Reasoning with low latency. For readers interested in object-level analytics, our people-detection solution in airports shows how vision models feed higher-level reasoning People detection in airports.

AI vision within minutes?

With our no-code platform you can just focus on your data, we’ll do the rest

vlm, vlms and ai system integration in VMS

First, clarify terms. A VL M, or vlm, refers to a single vision-language model instance. The plural, vlms, refers to collections of such models deployed across a platform. An ai system integrates vlms, telemetry, and business rules into a cohesive VMS. Integration typically follows three approaches: cloud-first, on-premise, and edge deployment. Cloud-first solutions centralize heavy compute and large pre-trained models for scale. On-premise deployments keep video and models inside customer environments for compliance. Edge deployments push inference close to cameras for minimal latency. Each approach has trade-offs. Cloud offers elasticity and simpler updates. Edge reduces bandwidth and lowers latency. On-premise balances control and compliance. visionplatform.ai focuses on on-prem and edge by default, retaining video and models inside the environment. This design aligns with EU AI Act considerations and reduces cloud dependency for sensitive sites. An ai system can orchestrate multiple vlms for different tasks. For example, one vlm may handle person detection, another may generate captions, and a third may verify alarms. The system streams events, applies rules, and exposes structured outputs for AI agents. These agents then reason over VMS data to recommend actions. VLMS can be chained so that a vlm that describes an event feeds a reasoning vlm. That pattern enables more nuanced decision support. For transport monitoring, simultaneous vlms detect vehicles, read plates, and describe behaviors. For intrusion cases, learnings from an intrusion detection model inform response and reporting; see our intrusion detection in airports page Intrusion detection in airports. Integration also requires APIs and standards like ONVIF and RTSP to connect cameras. Finally, operator workflows need human-in-the-loop controls to manage autonomy safely.

model trained and language models trained: data and training pipelines

Preparing a model trained on video-text data requires curated datasets and multi-stage pipelines. The pipeline usually begins with data collection. Raw video, captions, annotations, and transcripts form the base. Next, preprocessing extracts frames, aligns timestamps, and pairs image and text snippets. Training datasets for vlms must include diverse scenes, labeled actions, and descriptive captions. Training data for vlms often derive from public datasets and proprietary footage. The model learns to correlate pixels with language through tasks such as contrastive learning, masked language modeling, and caption generation. Language models trained on captions and transcripts supply robust textual priors. These pre-trained language components accelerate downstream fine-tuning. Transfer learning is common: large pre-trained models provide general knowledge, then targeted fine-tuning adapts to a specific task. For VMS tasks, fine-tuning on domain-specific annotations is crucial. For instance, a model trained on airport footage learns to recognize boarding gates, queues, and luggage behaviors. Data augmentation and synthetic data generation help where annotations are scarce. Pipeline stages include batch training, validation, and deployment testing on edge devices or servers. Monitoring during deployment catches drift and performance drops. Teams often use continuous learning loops to retrain models with new annotated events. visionplatform.ai supports custom model workflows: use a pre-trained model, improve it with your data, or build one from scratch. This flexibility helps sites to develop reliable models for targeted alerts and forensic search. When training, practitioners must also manage privacy, retention, and compliance requirements. Using on-prem pipelines preserves control over sensitive video and metadata.

AI vision within minutes?

With our no-code platform you can just focus on your data, we’ll do the rest

vision language models work, use case and applications of vision-language models

Vision language models work by mapping visual signals and textual queries into a shared representation space. This mapping enables retrieval, classification, and explanation tasks. In VMS, operators type natural language commands like “show footage of people wearing red jackets” and the system responds with ranked clips. That semantic video search reduces time spent scrubbing footage. For event detection, vlms can generate language descriptions such as “a person entering a restricted area” or “a vehicle stopping abruptly.” These natural language descriptions support automated tagging and summarisation. A concrete use case is security surveillance. In that scenario, combining camera feeds, access logs, and AI agents enhances situational awareness. An operator receives an explained alert that correlates a detection with access control entries and camera context. visionplatform.ai demonstrates this with VP Agent Reasoning, which verifies, explains, and recommends actions. Transport monitoring is another use case where vlms detect near-miss events, monitor passenger flows, and support ANPR workflows; see our ANPR/LPR in airports page ANPR/LPR in airports. In healthcare, vlms assist in patient monitoring and fall detection by generating timely natural language descriptions of incidents; refer to our fall detection in airports material Fall detection in airports for a similar approach. Applications of vision language models include automated tagging, video summarisation, forensics, and visual question answering. Models can generate incident summaries, pre-fill reports, and trigger workflows. Using vlms also helps control false alarms by providing context and cross-checks from other sensors. For forensic tasks, semantic search enables queries that match how humans describe events, not just camera IDs. Overall, vlms can be used to transform VMS from passive archives into searchable, explainable systems that support rapid, consistent decision-making.

evaluating vision language models and developing vision-language models: performance and future trends

Evaluating vision language models requires a mix of accuracy, latency, and robustness metrics. Accuracy covers detection precision, caption quality, and retrieval relevance. Latency measures end-to-end response times for live queries and real-time alarm verification. Robustness tests include performance under varied lighting, occlusion, and adversarial perturbations. Operational KPIs also include reductions in operator time per alarm and false positive rates. For example, AI-enhanced video analytics have improved incident detection speed and accuracy in surveys of VMS users [source]. Challenges persist. Computational load and data bias remain major issues. Privacy and compliance constraints limit data sharing and require on-prem or edge approaches to processing. Data annotation is costly, which is why transfer learning and pre-trained language or vision components often form the backbone of production models. Emerging trends focus on edge AI, 5G-enabled low-latency pipelines, and privacy-preserving techniques such as federated learning and on-device inference. Developing vision-language models also means improving explainability and auditability so operators trust recommendations. Newer models emphasize audit trails, deterministic reasoning, and alignment with policies like the EU AI Act. Practically, ensure that vlms are tested on representative datasets and that continuous monitoring detects drift. Future systems will combine vision and natural language encoders with domain-specific agents to support decision workflows. In short, evaluating vision language models must balance technical metrics with human factors. Teams should measure operator workload, decision speed, and incident resolution accuracy alongside model F1 and inference time. As vlms are enhancing VMS, they will unlock new operational value while requiring careful governance and tooling.

FAQ

What is the difference between a language model and a vision-language model?

A language model processes text and predicts words or phrases based on context. A vision-language model combines visual inputs with text so it can describe images, answer questions about video, and perform semantic search.

How do vlms improve forensic search in VMS?

VLMS index visual events with natural language descriptions, which lets operators query footage using everyday terms. This reduces time to find incidents and supports more accurate investigations.

Can vlms run on edge devices or do they need the cloud?

VLMS can run on the edge, on-premise, or in the cloud depending on performance and compliance needs. Edge deployment lowers latency and keeps video inside the customer environment for privacy reasons.

What data is required to train vlms for a specific site?

Training requires annotated video, captions, and transcripts representative of the site. Additional labels and fine-tuning help the model adapt to site-specific classes and behaviors.

How do vision encoders and text encoders work together?

The vision encoder extracts visual features from frames while the text encoder converts words into vectors. Fusion layers or cross-attention then align those vectors for joint reasoning and retrieval.

Are there privacy risks when deploying vision-language models?

Yes. Video and metadata can be sensitive, so on-premise and edge solutions help reduce exposure. Privacy-preserving techniques and clear data governance are essential.

What performance metrics should I track for vlms in VMS?

Track detection accuracy, retrieval relevance, inference latency, robustness under changing conditions, and operational metrics like operator time saved. These metrics capture both model quality and business impact.

How do AI agents use vlms inside a control room?

AI agents consume vlm outputs, VMS events, and contextual data to verify alarms and recommend actions. Agents can pre-fill reports, suggest responses, or execute workflows within defined permissions.

Can vlms reduce false alarms in surveillance?

Yes. By providing context, correlating multiple sources, and explaining detections, vlms help operators distinguish true incidents from noise. This lowers false positives and improves response time.

Where can I see examples of vlm applications in airports?

visionplatform.ai documents several practical deployments such as people detection, ANPR/LPR, fall detection, and forensic search. See pages like People detection in airports https://visionplatform.ai/people-detection-in-airports/, ANPR/LPR in airports https://visionplatform.ai/anpr-lpr-in-airports/, and Forensic search in airports https://visionplatform.ai/forensic-search-in-airports/ for real examples.