Introduction to Vision Transformer

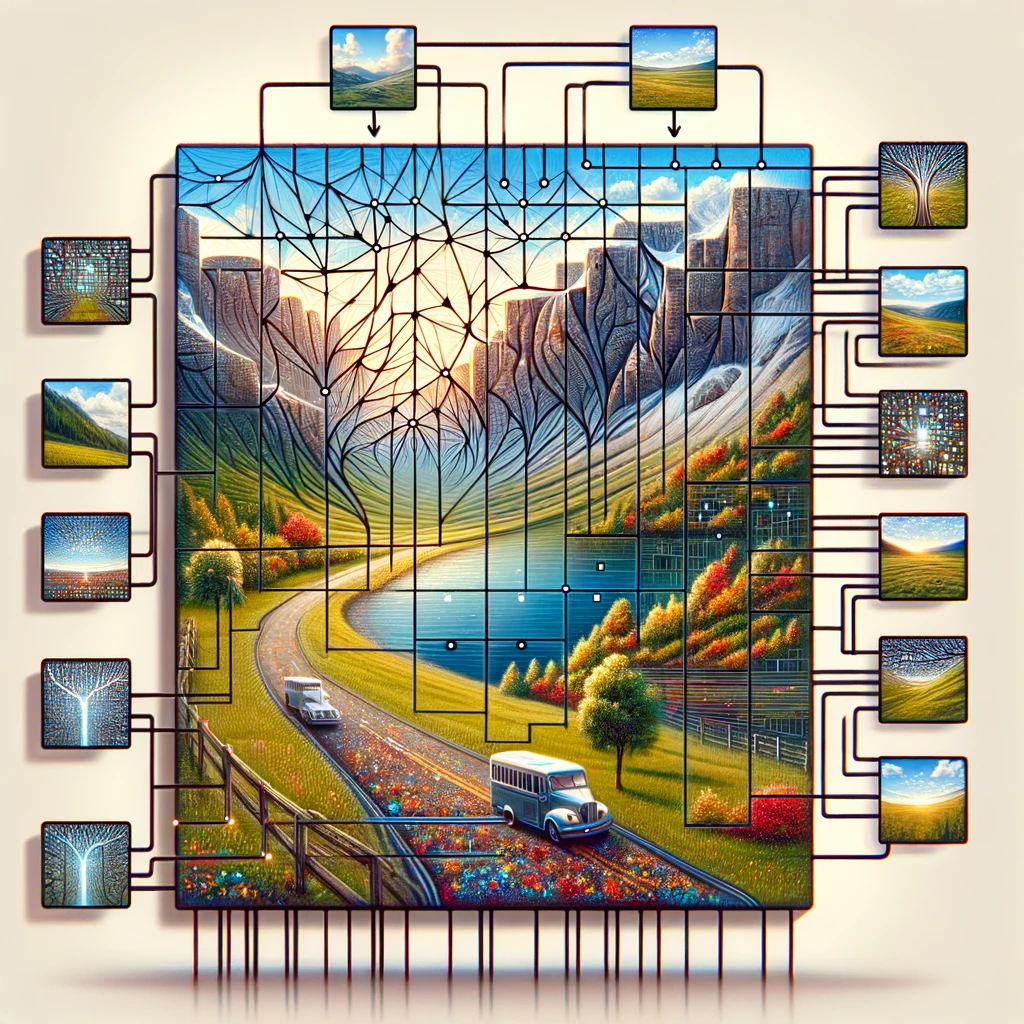

The inception of the Vision Transformer (ViT) marked a pivotal moment in the field of computer vision. Traditionally dominated by convolutional neural networks (CNNs), the landscape began to shift with the introduction of ViT, a model that leverages transformer architecture, originally designed for natural language processing. This innovative approach to image classification, as presented by Dosovitskiy et al., demonstrates how a pure transformer applied directly to sequences of image patches can outperform established CNNs on major benchmarks like ImageNet, even with substantially fewer computational resources.

Vision Transformer operates by splitting the input image into a grid of image patches, treating each patch as a token similar to words in a sentence. These tokens are then embedded along with position embeddings to retain spatial information, a crucial component in understanding the image as a whole. The transformer model’s core, the standard transformer encoder uses self-attention to relate different parts of the image, enabling the model to make predictions based on global information rather than focusing on local features as CNNs do.

This shift towards using transformers for image recognition challenges the conventional wisdom that only convolutional layers can process visual data effectively. By demonstrating that a deep learning model like ViT performs well on image classification tasks with fewer data and less computational intensity, it paves the way for more efficient and scalable solutions in image recognition at scale.

The Significance of ViT in Computer Vision

Vision Transformer (ViT) has brought about a renaissance in computer vision by demonstrating that transformer architecture has become not just viable but highly effective for image classification tasks. Unlike traditional approaches that rely heavily on convolutional neural networks (CNNs), ViT treats an image as a sequence of patches, applying the transformer directly to these sequences. This methodology, inspired by the success of transformers in natural language processing, has proven to work exceptionally well on image classification tasks, showcasing the versatility of transformer models across different domains of AI.

The introduction of ViT model by Dosovitskiy et al. has underscored a critical insight: “an image is worth 16×16 words“, metaphorically equating each 16×16 pixel patch of an image to a word in text processing. This analogy has not only been poetic but also technically profound, allowing for the application of pre-training techniques akin to those used in NLP. Such pre-trained models, when fine-tuned on specific image classification tasks, achieve remarkable accuracy, often surpassing that of CNNs. The utilization of embeddings to convert pixel values of image patches into vectors that can be processed by a transformer encoder exemplifies the model’s ability to learn complex patterns and relationships within the visual data.

Moreover, the self-attention mechanism within the transformer architecture allows ViT to focus on relevant parts of the image, irrespective of their spatial position, facilitating a more nuanced understanding of the visual content. This approach not only enhances the model’s accuracy in image classification and image segmentation tasks but also reduces the dependence on large amounts of data traditionally required for training deep learning models in computer vision software.

Embeddings and Transformer Architecture: The Core of ViT

Embeddings and transformer architecture form the cornerstone of the Vision Transformer (ViT), setting a new standard for how images are analyzed and understood in computer vision. By adopting the transformer model, a technology that revolutionized natural language processing, ViT introduces a paradigm shift in dealing with visual data. The process begins with breaking down the input image into a grid of image patches, akin to chopping up a picture into a mosaic of smaller, manageable pieces. Each of these image patches, corresponding to a token in NLP, is then embedded into a high-dimensional vector space, capturing the essence of the pixel information in a form that’s ripe for processing by the transformer architecture.

The genius of ViT lies in its ability to apply the transformer architecture directly to sequences of these embedded image patches. This approach diverges from conventional convolutional methods by leveraging the power of self-attention, a mechanism that allows the model to weigh the importance of different parts of the image relative to each other. By doing so, ViT captures both local and global context, enabling a nuanced understanding of the visual data that goes beyond what convolutional neural networks (CNNs) can achieve.

Furthermore, the addition of position embeddings is crucial, as it imbues the sequence of patches with spatial information, allowing the transformer model to understand the arrangement and relationship between different parts of the image. This method, pioneered by Dosovitskiy et al., showcases how a pure transformer applied directly to sequences of image patches can excel in computer vision tasks, such as image classification and image segmentation, with fewer computational resources and less reliance on vast datasets. The ViT architecture, therefore, not only stands as a testament to the scalability and efficiency of transformers but also heralds a new era of innovation in computer vision platforms, promising advancements in how machines perceive and interpret the visual world.

The Role of Self-Attention in Vision Transformer

The Vision Transformer model revolutionizes computer vision tasks through its novel use of self-attention, a core principle borrowed from the original transformer architecture. This mechanism enables the model to focus on different parts of an entire image, determining the importance of each area based on the task of image classification. By analyzing the image as split into patches, the Vision Transformer can perform very well on image recognition, even with multiple mid-sized or small image datasets. The classification head of the model architecture translates the complex relationships discovered among the patches into class labels for the image, showcasing a deep understanding of content and location in the original image.

This approach contrasts starkly with traditional convolutional networks while keeping computational efficiency in focus. Research indicates that such a model, pre-trained on large amounts of data and transferred to multiple mid-sized or small image recognition benchmarks, attains excellent results compared to state-of-the-art convolutional networks. It effectively shows that the reliance on CNNs is not necessary for many computer vision tasks. The transformer block within the Vision Transformer, particularly the transformer with multi-head attention, allows the model to process these patches in parallel, offering insights into the image as a whole rather than isolated parts. This method underscores the transformative potential of using transformers in vision processing tasks, where the entire landscape of applications to computer vision remains largely uncharted but promising.

Vision Transformer vs. Convolutional Neural Networks

The Vision Transformer model presents a groundbreaking shift in how computer vision tasks are approached, challenging the long-standing dominance of CNNs. The paper titled “An Image is Worth 16×16 Words” encapsulates the essence of the Vision Transformer’s methodology, treating the entire image as a series of transformer blocks to process. This original transformer design, adapted for vision, relies on the segmentation of an image into patches that can perform very well on different image recognition tasks, embodying the phrase “an image is worth 16*16 words.”

In comparison, CNNs traditionally analyze images through convolutional filters, focusing on local features within small receptive fields. However, Vision Transformers lack these constraints, instead utilizing self-attention to globally assess the relationships between patches, irrespective of their location in the original image. This fundamental difference allows Vision Transformers to capture more contextual information, proving that for many computer vision tasks, the pure transformer model architecture not only competes with but often outperforms current convolutional models.

Moreover, the research paper demonstrates that Vision Transformer models, especially when pre-trained on large datasets, can be transferred effectively across a range of computer vision tasks. This adaptability showcases their versatility and potential to redefine the landscape of vision processing, providing a compelling alternative to the convolutional paradigm. Vit models outperform the current benchmarks, challenging the necessity of convolutional approaches and paving the way for a new era in computer vision.

| Model | Parameters (Millions) | Image Size | Top-1 Accuracy (ImageNet) | Datasets Used for Pre-training | Special Features |

|---|---|---|---|---|---|

| ViT-Base | 86 | 384×384 | 77.9% | ImageNet | Original ViT model |

| ViT-Large | 307 | 384×384 | 76.5% | ImageNet | Larger version of ViT-Base |

| ViT-Huge | 632 | 384×384 | 77.0% | ImageNet | Largest ViT model |

| DeiT-Small | 22 | 224×224 | 79.8% | ImageNet | Distillation token for training efficiency |

| DeiT-Base | 86 | 224×224 | 81.8% | ImageNet | Larger version of DeiT-Small |

| Swin Transformer | 88 | 224×224 | 83.0% | ImageNet-21k, ImageNet | Hierarchical architecture, improved efficiency |

The Innovator’s Perspective: Dosovitskiy and the Neural Network Revolution

The transformative impact of the Vision Transformer (ViT) on computer vision tasks owes much to the pioneering work detailed in the conference research paper titled “An Image is Worth 16×16 Words” by Dosovitskiy et al. This seminal paper not only introduced a novel model architecture but also shifted the paradigm of image processing from convolutional networks to a framework where transformers work directly with sequences of image patches. The vision transformer model, introduced by this research, employs a series of transformer blocks to analyze the entire image, breaking it down into patches that, surprisingly, can perform very well on various image recognition benchmarks.

Dosovitskiy’s work challenges the conventional reliance on CNNs for vision tasks, demonstrating that a model pre-trained on vast amounts of data and transferred to different image recognition tasks not only attains excellent results compared to state-of-the-art convolutional networks but, in many cases, significantly surpasses them. This evidence suggests that the applications to computer vision, long considered limited without convolutional networks, are in fact expansive when employing transformers. ViT’s success lies in its ability to consider the image in its entirety, leveraging position embeddings and self-attention to understand the relationship between patches regardless of their location in the original image.

Moreover, the paper’s insights into the model’s efficiency underscore a crucial advantage: Vision Transformers require substantially fewer computational resources than their CNN counterparts while delivering superior performance. This efficiency, combined with the model’s scalability across many computer vision tasks, from image classification to segmentation, heralds a new direction in neural network design and application. As Vit shows, and subsequent Vit variants like Swin Transformer further prove, the future of computer vision lies in the versatile and powerful transformer architecture, a path first illuminated by Dosovitskiy and his team.

Bridging Vision and Language: From Natural Language Processing to Vision Transformer

The inception of the Vision Transformer model (ViT) in the realm of computer vision tasks marks a significant leap forward, bridging methodologies from natural language processing (NLP) to visual understanding. This innovative leap was chronicled in the research paper titled “An Image is Worth 16×16 Words”, which elucidates how ViT employs a series of transformer blocks, a concept borrowed from the original transformer model architecture used in NLP. The crux of this adaptation lies in treating the entire image as a sequence of data points, akin to how sentences are viewed as sequences of words in NLP.

This methodology showcases the transformer’s unique capability to process and analyze visual data. Unlike traditional convolutional networks, which necessitate local filtering and pooling operations, ViT models leverage self-attention mechanisms that allow the model to consider the entire image, facilitating a comprehensive understanding of both the local and global context. The transformer with multi-head attention further enriches this process by enabling the model to focus on various parts of the image simultaneously, enhancing its ability to discern intricate patterns and relationships within the visual data.

Furthermore, the classification head of ViT, crucial for assigning class labels to the image, signifies a departure from the convolutional paradigm, illustrating that reliance on CNNs is not necessary for achieving excellence in computer vision. Through the effective use of transformers, ViT attains excellent results compared to state-of-the-art convolutional networks across a spectrum of computer vision remain limited, reinforcing the argument that the applications to computer vision extend far beyond the conventional bounds set by previous models.

Deep Dive into ViT Architecture

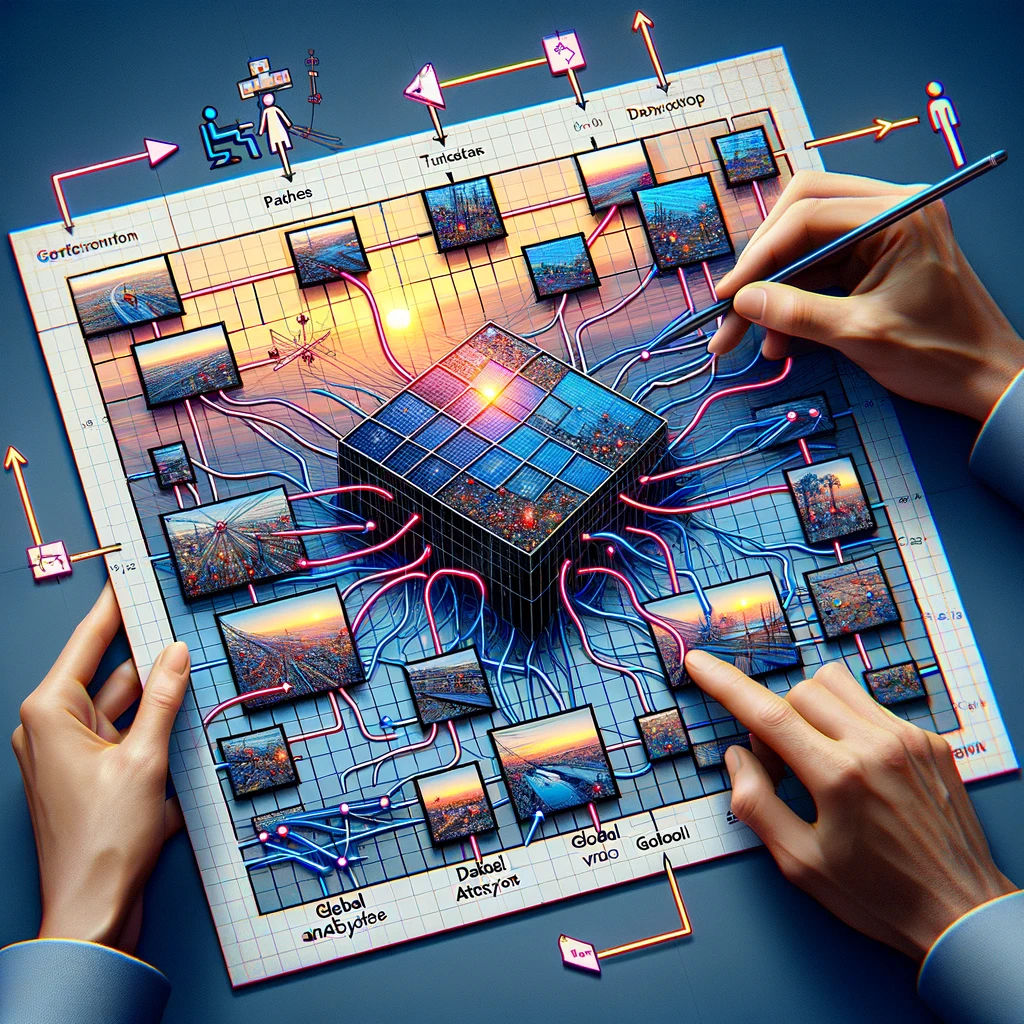

The architecture of the Vision Transformer model represents a paradigm shift in how images are interpreted for computer vision tasks. Central to this model architecture is the innovative use of image patches, akin to tokens in natural language processing, which are processed through a transformer block. This approach diverges from traditional methods by allowing the entire image to be analyzed in a holistic manner, rather than through the localized lens of convolutional operations.

Each image is split into fixed-size patches, which can perform very well on their own in various small image recognition benchmarks. These patches, once embedded alongside position embeddings to maintain their location in the original image, are fed into the transformer model. Here, the magic of the transformer architecture unfolds, utilizing self-attention mechanisms to dynamically weigh the importance of each patch in relation to the others, thereby crafting a nuanced understanding of the visual content.

This architecture, first introduced in the landmark paper titled “An Image is Worth 16*16 Words”, underscores the model’s efficiency. By leveraging transformers work, originally designed for text, ViT models outperform the current benchmarks set by convolutional networks. Moreover, the Vit employs a classification head that translates the abstract understanding of the image into concrete class labels, showcasing the model’s prowess in navigating the task of image classification with remarkable accuracy.

Exploring ViT Variants and Their Impact

Since the introduction of the original Vision Transformer model, the exploration of ViT variants has been a vibrant area of research, pushing the boundaries of what is possible in computer vision tasks. These variants, designed to tackle a wide array of vision processing tasks, underscore the versatility and adaptability of the transformer architecture in addressing the complexities of visual data.

One of the most notable advancements in this domain is the development of the Swin Transformer, a model that uses a hierarchical transformer architecture optimized for efficiency and scalability. Unlike the larger ViT models that process the entire image in a uniform manner, the Swin Transformer introduces a novel approach to partitioning the image into patches, which then undergoes a series of transformer layers with multi-head attention. This method allows for a more dynamic and flexible adaptation to the varying scales of visual features present within an image, from the minute details to the overarching structure.

The impact of these ViT variants is profound, demonstrating that transformers, despite being originally conceived for NLP, possess immense potential in revolutionizing computer vision. By employing different architectural tweaks and optimizations, such as those seen in ViT variants, researchers have shown that these models can perform very well on image recognition at scale, including on multiple mid-sized or small image benchmarks. The success of these variants not only attests to the inherent flexibility of the transformer model in adapting to different tasks but also showcases the potential for future innovations in the field, promising further enhancements in accuracy, efficiency, and applicability across a broader spectrum of computer vision challenges.

How Transformers Work in Vision: A Closer Look at ViT

The Vision Transformer (ViT) model architecture, introduced in the conference research paper titled “An Image is Worth 16*16 Words,” showcases a groundbreaking approach in which using transformers for computer vision tasks is not just feasible but highly effective. This model architecture underlines that a necessary and pure transformer approach, devoid of convolutional networks while keeping computational efficiency in check, can offer profound insights into the entire image. Through the division of an image into patches, ViT demonstrates that these image patches can perform exceptionally well across various image recognition tasks.

ViT employs a series of transformer blocks that process the visual data. This method allows the model to consider the entire image holistically, a significant departure from the localized analysis typical of convolutional neural networks (CNNs). The paper titled “An Image is Worth 16*16 Words” fundamentally shifts the paradigm, showing that this reliance on CNNs is not necessary for achieving high performance in many computer vision tasks. By treating each patch as a token similar to how words are treated in natural language processing, ViT leverages the original transformer’s self-attention mechanism, enabling it to dynamically focus on different parts of the image and how they relate to one another.

Furthermore, the model’s ability to be pre-trained on large amounts of data and then transferred to multiple mid-sized or small image recognition benchmarks attests to its versatility and effectiveness. This adaptability underscores the transformer’s potential in vision tasks, where it attains excellent results compared to state-of-the-art convolutional networks, thereby redefining the boundaries of what’s possible in computer vision.

Exploring ViT Variants and Their Impact

The evolution of Vision Transformer (ViT) models has led to the development of various ViT variants, each tailored for specific computer vision tasks, including mid-sized or small image recognition benchmarks. These variants illustrate the transformative power of adapting the model architecture to cater to different requirements, showcasing that the applications to computer vision remain vast and far from limited. Among these, the larger ViT models and those specialized for small image recognition benchmarks have significantly pushed the envelope, demonstrating that patches can perform very well across a diverse range of visual tasks.

One notable variant, the Swin Transformer, exemplifies the ingenious modifications to the standard ViT model that uses a hierarchical approach to process the image. This model architecture allows for more efficient handling of the entire image by dynamically adjusting to the content’s scale and complexity. Such innovations highlight the importance of using transformers in vision tasks, where they can leverage the model’s inherent capabilities for both broad and nuanced analyses.

Moreover, the success of these variants, particularly in how they are pre-trained on large amounts of data and transferred to address different image recognition challenges, underscores the flexibility and potential of transformer-based models in computer vision. The ability of ViT variants to consistently outperform the current benchmarks set by traditional convolutional networks signals a shift towards a more versatile and powerful approach to computer vision tasks, paving the way for future advancements in the field.

Using Transformers Beyond Text: Vision Transformer in Action

The Vision Transformer (ViT) model, since its introduction in 2021, has showcased the versatility of transformers beyond their original application in natural language processing. This expansion into computer vision tasks has been met with considerable success, as evidenced by ViT models’ ability to outperform current state-of-the-art convolutional networks across a variety of benchmarks. The model architecture, which breaks down the entire image into patches, illustrates that a transformer with multi-head attention can effectively process visual data, challenging the long-held belief that CNNs are necessary for these tasks.

Applications of ViT in computer vision have showcased its efficacy not just in traditional areas like image recognition but also in more nuanced tasks that require an understanding of the complex interplay between different parts of the visual data. This capability stems from the model’s design, which, as outlined in the paper titled “An Image is Worth 16*16 Words,” allows for a detailed and comprehensive analysis of the image, leveraging both the patches’ individual and collective information.

The success of ViT in various computer vision tasks demonstrates the potential of transformer models to redefine the landscape of visual processing. Whereas ViT shows that transformers lack the limitations often associated with CNNs, it employs a unique approach to understanding visual data that combines the depth and nuance of human vision with the scalability and efficiency of machine learning models. This innovative use of transformers in vision tasks heralds a new era in computer vision, where the applications and implementations on Edge devices such as the NVIDIA Jetson of such models are only beginning to be explored.

Swin Transformer: A Novel ViT Variant

The Swin Transformer represents a significant advancement in the landscape of Vision Transformer (ViT) variants, introducing a novel approach that adapts the transformer model for enhanced performance across a spectrum of computer vision tasks. As a model that uses a hierarchical transformer architecture, the Swin Transformer redefines the processing of the entire image by segmenting it into patches that are dynamically scaled, allowing for a more granular and efficient analysis.

This architectural innovation is particularly adept at handling various scales of visual data, making it well-suited for tasks that range from detailed image recognition to comprehensive scene understanding. The Swin Transformer’s design emphasizes flexibility and scalability, enabling it to efficiently manage the computational demands associated with processing large images. By employing a smaller, more focused attention mechanism within each transformer block, the Swin Transformer ensures that the necessary computational resources are precisely allocated, enhancing the model’s overall efficiency.

The impact of the Swin Transformer extends beyond just technical improvements; it signifies a broader shift in how transformers are applied to computer vision, highlighting the potential for these models to evolve and adapt in response to the diverse and growing demands of the field. As a novel ViT variant, the Swin Transformer sets a new standard for what is possible with transformer-based models in computer vision, promising further innovations and applications that could redefine the boundaries of image analysis and interpretation.

Conclusion: The Future of Computer Vision with Vision Transformer

The introduction and evolution of the Vision Transformer (ViT) have ushered in a new era for computer vision, challenging conventional methodologies and establishing a promising direction for future research and applications. The transformative impact of ViT models, underscored by their ability to outperform state-of-the-art convolutional networks across numerous benchmarks, highlights the immense potential of transformer technology in redefining the landscape of computer vision tasks.

The success of ViT and its variants, such as the Swin Transformer, demonstrates the adaptability and efficacy of transformer models in addressing a wide array of visual processing challenges. By leveraging the principles of self-attention and global context understanding, ViT models have shown that a comprehensive and nuanced analysis of visual data is achievable, surpassing the capabilities of traditional convolutional approaches.

Looking forward, the continued exploration and development of ViT models promise to unlock even greater capabilities in computer vision. The potential for these models to enhance and expand the scope of applications in the field is vast, ranging from advanced image recognition systems to sophisticated scene analysis tools. As researchers and practitioners build upon the foundational work of ViT, the future of computer vision appears poised for a wave of innovations that will further harness the power of transformers, driving forward the capabilities of machines to understand and interpret the visual world with machine vision with unprecedented depth and accuracy.

Frequently Asked Questions about Vision Transformers

Vision Transformers (ViTs) have emerged as a groundbreaking technology in the field of computer vision, challenging conventional approaches and offering new possibilities. As interest in ViTs grows, so do questions about their functionality, advantages, and applications. Below, we’ve compiled a list of frequently asked questions to provide insights into the transformative impact of Vision Transformers on image processing and analysis.

What Are Vision Transformers?

Vision Transformers (ViTs) are a class of deep learning models adapted from transformers in natural language processing (NLP) to handle computer vision tasks. Unlike traditional methods that rely on convolutional neural networks (CNNs), ViTs divide images into patches and apply self-attention mechanisms to capture global dependencies within the image. This approach allows ViTs to achieve superior performance on various image processing tasks, including but not limited to, image classification, object detection, and semantic segmentation.

How Do Vision Transformers Work?

Vision Transformers work by first splitting an image into a grid of fixed-size patches. Each patch is then flattened and transformed into a vector through a process called embedding. These vectors, along with positional encodings, are fed into a series of transformer blocks that utilize self-attention mechanisms to understand the relationships between different patches of the image. This process enables the model to consider the entire context of the image, improving its ability to classify or interpret visual data accurately.

Why Are Vision Transformers Important for Computer Vision Tasks?

Vision Transformers are important for computer vision tasks because they introduce a novel approach to image analysis that departs from the local perspective of CNNs. By leveraging self-attention mechanisms, ViTs can consider the entire image holistically, leading to improved performance on tasks such as image classification, where they have been shown to outperform CNNs on benchmarks like ImageNet with accuracy rates exceeding 88%. Their ability to efficiently process global image features makes them a powerful tool in advancing computer vision.

Can Vision Transformers Outperform CNNs in Image Recognition?

Yes, Vision Transformers can outperform CNNs in image recognition tasks. Studies have shown that ViTs achieve state-of-the-art performance on major benchmarks, including ImageNet, where they have reached accuracy levels surpassing those of advanced CNN models. The key to their success lies in the transformer’s ability to capture long-range dependencies across the image, allowing for a more comprehensive understanding and classification of visual data.

What Makes Vision Transformers Efficient in Image Classification?

Vision Transformers are efficient in image classification due to their ability to learn global image features through self-attention mechanisms. Unlike CNNs, which primarily focus on local features, ViTs analyze relationships between all parts of an image, enabling them to capture complex patterns more effectively. Additionally, the scalability of transformer models allows for efficient training on large datasets, further enhancing their performance on image classification tasks with increasingly accurate results as the dataset size grows.

How Are Vision Transformers Trained?

Vision Transformers are typically trained using large datasets, often leveraging a technique known as transfer learning. Initially, a ViT model is pre-trained on a vast dataset, such as ImageNet, which contains millions of images across thousands of categories. This pre-training process allows the model to learn a wide variety of visual features. Subsequently, the model can be fine-tuned on smaller, task-specific datasets to achieve high performance on specific computer vision tasks, significantly reducing the training time and computational resources required.

What Are the Applications of Vision Transformers?

Vision Transformers have been successfully applied to a range of computer vision tasks, from basic image classification to complex challenges like object detection, semantic segmentation, and image generation. For instance, in image classification, ViTs have achieved accuracy rates exceeding 88% on the ImageNet benchmark. Their ability to understand the global context of images also makes them ideal for medical image analysis, autonomous vehicle navigation, and content-based image retrieval systems, showcasing their versatility across various domains.

What Are the Challenges of Implementing Vision Transformers?

Implementing Vision Transformers comes with challenges, primarily due to their computational complexity and the need for large amounts of training data. ViTs require significant GPU resources for training, which can be a barrier for those without access to high-performance computing facilities. Additionally, while they excel with large datasets, their performance on smaller datasets without extensive pre-training can lag behind that of more traditional models, like CNNs. Optimizing ViTs for specific tasks and managing resource requirements are key challenges for wider adoption.

How Do Vision Transformers Handle Large Images?

Vision Transformers handle large images by dividing them into smaller, manageable patches that are processed independently, allowing the model to scale its analysis according to the image size. This patch-based approach enables ViTs to maintain high performance even as image resolutions increase, without a proportional increase in computational cost. For extremely large images, techniques like hierarchical processing can be employed, where the model’s architecture is adapted to analyze images at multiple resolutions, further enhancing its efficiency and accuracy.

What Is the Future of Vision Transformers in Computer Vision?

The future of Vision Transformers in computer vision looks promising, with ongoing research aimed at enhancing their efficiency, accuracy, and applicability to a broader range of tasks. Efforts to reduce their computational requirements, improve training methods, and develop more robust models for small datasets are key areas of focus. Additionally, as ViTs continue to outperform traditional models in various benchmarks, their integration into real-world applications, from healthcare diagnostics to automated systems in vehicles and smartphones, is expected to grow, further solidifying their role in advancing computer vision technology.